This page has been recently added and will contain new modules that can be integrated into courses.

This the multi-page printable view of this section. Click here to print.

Modules

- 1: Contributors

- 2: List

- 3: Autogenerating Analytics Rest Services

- 4: DevOps

- 4.1: DevOps - Continuous Improvement

- 4.2: Infrastructure as Code (IaC)

- 4.3: Ansible

- 4.4: Puppet

- 4.5: Travis

- 4.6: DevOps with AWS

- 4.7: DevOps with Azure Monitor

- 5: Google Colab

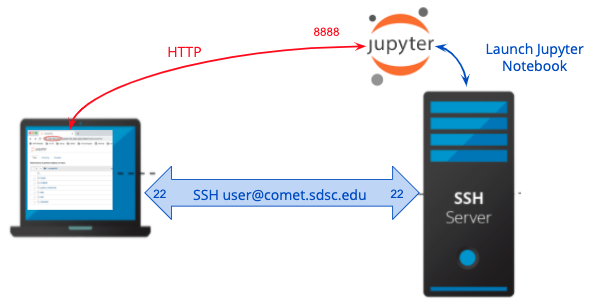

- 6: Modules from SDSC

- 7: AI-First Engeneering Cybertraining Spring 2021 - Module

- 7.1: 2021

- 7.1.1: Introduction to AI-Driven Digital Transformation

- 7.1.2: AI-First Engineering Cybertraining Spring 2021

- 7.1.3: Introduction to AI in Health and Medicine

- 7.1.4: Mobility (Industry)

- 7.1.5: Space and Energy

- 7.1.6: AI In Banking

- 7.1.7: Cloud Computing

- 7.1.8: Transportation Systems

- 7.1.9: Commerce

- 7.1.10: Python Warm Up

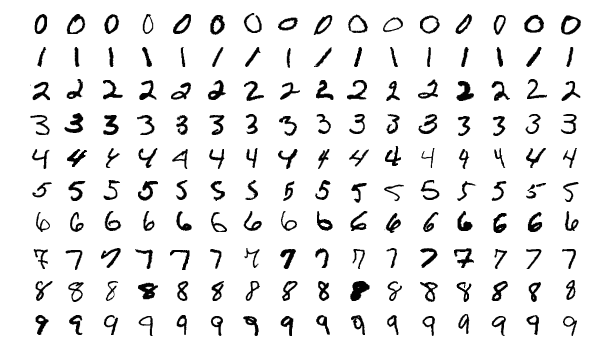

- 7.1.11: Distributed Training for MNIST

- 7.1.12: MLP + LSTM with MNIST on Google Colab

- 7.1.13: MNIST Classification on Google Colab

- 7.1.14: MNIST With PyTorch

- 7.1.15: MNIST-AutoEncoder Classification on Google Colab

- 7.1.16: MNIST-CNN Classification on Google Colab

- 7.1.17: MNIST-LSTM Classification on Google Colab

- 7.1.18: MNIST-MLP Classification on Google Colab

- 7.1.19: MNIST-RMM Classification on Google Colab

- 8: Big Data Applications

- 8.1: 2020

- 8.1.1: Introduction to AI-Driven Digital Transformation

- 8.1.2: BDAA Fall 2020 Course Lectures and Organization

- 8.1.3: Big Data Use Cases Survey

- 8.1.4: Physics

- 8.1.5: Introduction to AI in Health and Medicine

- 8.1.6: Mobility (Industry)

- 8.1.7: Sports

- 8.1.8: Space and Energy

- 8.1.9: AI In Banking

- 8.1.10: Cloud Computing

- 8.1.11: Transportation Systems

- 8.1.12: Commerce

- 8.1.13: Python Warm Up

- 8.1.14: MNIST Classification on Google Colab

- 8.2: 2019

- 8.2.1: Introduction

- 8.2.2: Introduction (Fall 2018)

- 8.2.3: Motivation

- 8.2.4: Motivation (cont.)

- 8.2.5: Cloud

- 8.2.6: Physics

- 8.2.7: Deep Learning

- 8.2.8: Sports

- 8.2.9: Deep Learning (Cont. I)

- 8.2.10: Deep Learning (Cont. II)

- 8.2.11: Introduction to Deep Learning (III)

- 8.2.12: Cloud Computing

- 8.2.13: Introduction to Cloud Computing

- 8.2.14: Assignments

- 8.2.14.1: Assignment 1

- 8.2.14.2: Assignment 2

- 8.2.14.3: Assignment 3

- 8.2.14.4: Assignment 4

- 8.2.14.5: Assignment 5

- 8.2.14.6: Assignment 6

- 8.2.14.7: Assignment 7

- 8.2.14.8: Assignment 8

- 8.2.15: Applications

- 8.2.15.1: Big Data Use Cases Survey

- 8.2.15.2: Cloud Computing

- 8.2.15.3: e-Commerce and LifeStyle

- 8.2.15.4: Health Informatics

- 8.2.15.5: Overview of Data Science

- 8.2.15.6: Physics

- 8.2.15.7: Plotviz

- 8.2.15.8: Practical K-Means, Map Reduce, and Page Rank for Big Data Applications and Analytics

- 8.2.15.9: Radar

- 8.2.15.10: Sensors

- 8.2.15.11: Sports

- 8.2.15.12: Statistics

- 8.2.15.13: Web Search and Text Mining

- 8.2.15.14: WebPlotViz

- 8.2.16: Technologies

- 9: MNIST Example

- 10: Sample

- 10.1: Module Sample

- 10.2: Alert Sample

- 10.3: Element Sample

- 10.4: Figure Sample

- 10.5: Mermaid Sample

1 - Contributors

A partial list of contributors.

List of contributors

| HID | Lastname | Firstname |

|---|---|---|

| fa18-423-02 | Liuwie | Kelvin |

| fa18-423-03 | Tamhankar | Omkar |

| fa18-423-05 | Hu | Yixing |

| fa18-423-06 | Mick | Chandler |

| fa18-423-07 | Gillum | Michael |

| fa18-423-08 | Zhao | Yuli |

| fa18-516-01 | Angelier | Mario |

| fa18-516-02 | Barshikar | Vineet |

| fa18-516-03 | Branam | Jonathan |

| fa18-516-04 | Demeulenaere | David |

| fa18-516-06 | Filliman | Paul |

| fa18-516-08 | Joshi | Varun |

| fa18-516-10 | Li | Rui |

| fa18-516-11 | Cheruvu | Murali |

| fa18-516-12 | Luo | Yu |

| fa18-516-14 | Manipon | Gerald |

| fa18-516-17 | Pope | Brad |

| fa18-516-18 | Rastogi | Richa |

| fa18-516-19 | Rutledge | De’Angelo |

| fa18-516-21 | Shanishchara | Mihir |

| fa18-516-22 | Sims | Ian |

| fa18-516-23 | Sriramulu | Anand |

| fa18-516-24 | Withana | Sachith |

| fa18-516-25 | Wu | Chun Sheng |

| fa18-516-26 | Andalibi | Vafa |

| fa18-516-29 | Singh | Shilpa |

| fa18-516-30 | Kamau | Alexander |

| fa18-516-31 | Spell | Jordan |

| fa18-523-52 | Heine | Anna |

| fa18-523-53 | Kakarala | Chaitanya |

| fa18-523-56 | Hinders | Daniel |

| fa18-523-57 | Rajendran | Divya |

| fa18-523-58 | Duvvuri | Venkata Pramod Kumar |

| fa18-523-59 | Bhutka | Jatinkumar |

| fa18-523-60 | Fetko | Izolda |

| fa18-523-61 | Stockwell | Jay |

| fa18-523-62 | Bahl | Manek |

| fa18-523-63 | Miller | Mark |

| fa18-523-64 | Tupe | Nishad |

| fa18-523-65 | Patil | Prajakta |

| fa18-523-66 | Sanjay | Ritu |

| fa18-523-67 | Sridhar | Sahithya |

| fa18-523-68 | AKKAS | Selahattin |

| fa18-523-69 | Rai | Sohan |

| fa18-523-70 | Dash | Sushmita |

| fa18-523-71 | Kota | Uma Bhargavi |

| fa18-523-72 | Bhoyar | Vishal |

| fa18-523-73 | Tong | Wang |

| fa18-523-74 | Ma | Yeyi |

| fa18-523-79 | Rapelli | Abhishek |

| fa18-523-80 | Beall | Evan |

| fa18-523-81 | Putti | Harika |

| fa18-523-82 | Madineni | Pavan Kumar |

| fa18-523-83 | Tran | Nhi |

| fa18-523-84 | Hilgenkamp | Adam |

| fa18-523-85 | Li | Bo |

| fa18-523-86 | Liu | Jeff |

| fa18-523-88 | Leite | John |

| fa19-516-140 | Abdelgader | Mohamed |

| fa19-516-141 | (Bala) | Balakrishna Katuru |

| fa19-516-142 | Martel | Tran |

| fa19-516-143 | Sanders | Sheri |

| fa19-516-144 | Holland | Andrew |

| fa19-516-145 | Kumar | Anurag |

| fa19-516-146 | Jones | Kenneth |

| fa19-516-147 | Upadhyay | Harsha |

| fa19-516-148 | Raizada | Sub |

| fa19-516-149 | Modi | Hely |

| fa19-516-150 | Kowshi | Akshay |

| fa19-516-151 | Liu | Qiwei |

| fa19-516-152 | Pagadala | Pratibha Madharapakkam |

| fa19-516-153 | Mirjankar | Anish |

| fa19-516-154 | Shah | Aneri |

| fa19-516-155 | Pimparkar | Ketan |

| fa19-516-156 | Nagarajan | Manikandan |

| fa19-516-157 | Wang | Chenxu |

| fa19-516-158 | Dayanand | Daivik |

| fa19-516-159 | Zebrowski | Austin |

| fa19-516-160 | Jain | Shreyans |

| fa19-516-161 | Nelson | Jim |

| fa19-516-162 | Katukota | Shivani |

| fa19-516-163 | Hoerr | John |

| fa19-516-164 | Mirjankar | Siddhesh |

| fa19-516-165 | Wang | Zhi |

| fa19-516-166 | Funk | Brian |

| fa19-516-167 | Screen | William |

| fa19-516-168 | Deopura | Deepak |

| fa19-516-169 | Pandit | Harshawardhan |

| fa19-516-170 | Wan | Yanting |

| fa19-516-171 | Kandimalla | Jagadeesh |

| fa19-516-172 | Shaik | Nayeemullah Baig |

| fa19-516-173 | Yadav | Brijesh |

| fa19-516-174 | Ancha | Sahithi |

| fa19-523-180 | Grant | Jonathon |

| fa19-523-181 | Falkenstein | Max |

| fa19-523-182 | Siddiqui | Zak |

| fa19-523-183 | Creech | Brent |

| fa19-523-184 | Floreak | Michael |

| fa19-523-186 | Park | Soowon |

| fa19-523-187 | Fang | Chris |

| fa19-523-188 | Katukota | Shivani |

| fa19-523-189 | Wang | Huizhou |

| fa19-523-190 | Konger | Skyler |

| fa19-523-191 | Tao | Yiyu |

| fa19-523-192 | Kim | Jihoon |

| fa19-523-193 | Sung | Lin-Fei |

| fa19-523-194 | Minton | Ashley |

| fa19-523-195 | Gan | Kang Jie |

| fa19-523-196 | Zhang | Xinzhuo |

| fa19-523-198 | Matthys | Dominic |

| fa19-523-199 | Gupta | Lakshya |

| fa19-523-200 | Chaudhari | Naimesh |

| fa19-523-201 | Bohlander | Ross |

| fa19-523-202 | Liu | Limeng |

| fa19-523-203 | Yoo | Jisang |

| fa19-523-204 | Dingman | Andrew |

| fa19-523-205 | Palani | Senthil |

| fa19-523-206 | Arivukadal | Lenin |

| fa19-523-207 | Chadderwala | Nihir |

| fa19-523-208 | Natarajan | Saravanan |

| fa19-523-209 | Kirgiz | Asya |

| fa19-523-210 | Han | Matthew |

| fa19-523-211 | Chiang | Yu-Hsi |

| fa19-523-212 | Clemons | Josiah |

| fa19-523-213 | Hu | Die |

| fa19-523-214 | Liu | Yihan |

| fa19-523-215 | Farris | Chris |

| fa19-523-216 | Kasem | Jamal |

| hid-sp18-201 | Ali | Sohile |

| hid-sp18-202 | Cantor | Gabrielle |

| hid-sp18-203 | Clarke | Jack |

| hid-sp18-204 | Gruenberg | Maxwell |

| hid-sp18-205 | Krzesniak | Jonathan |

| hid-sp18-206 | Mhatre | Krish Hemant |

| hid-sp18-207 | Phillips | Eli |

| hid-sp18-208 | Fanbo | Sun |

| hid-sp18-209 | Tugman | Anthony |

| hid-sp18-210 | Whelan | Aidan |

| hid-sp18-401 | Arra | Goutham |

| hid-sp18-402 | Athaley | Sushant |

| hid-sp18-403 | Axthelm | Alexander |

| hid-sp18-404 | Carmickle | Rick |

| hid-sp18-405 | Chen | Min |

| hid-sp18-406 | Dasegowda | Ramyashree |

| hid-sp18-407 | Keith | Hickman |

| hid-sp18-408 | Joshi | Manoj |

| hid-sp18-409 | Kadupitige | Kadupitiya |

| hid-sp18-410 | Kamatgi | Karan |

| hid-sp18-411 | Kaveripakam | Venkatesh Aditya |

| hid-sp18-412 | Kotabagi | Karan |

| hid-sp18-413 | Lavania | Anubhav |

| hid-sp18-414 | Joao | Leite |

| hid-sp18-415 | Mudvari | Janaki |

| hid-sp18-416 | Sabra | Ossen |

| hid-sp18-417 | Ray | Rashmi |

| hid-sp18-418 | Surya | Sekar |

| hid-sp18-419 | Sobolik | Bertholt |

| hid-sp18-420 | Swarnima | Sowani |

| hid-sp18-421 | Vijjigiri | Priyadarshini |

| hid-sp18-501 | Agunbiade | Tolu |

| hid-sp18-502 | Alshi | Ankita |

| hid-sp18-503 | Arnav | Arnav |

| hid-sp18-504 | Arshad | Moeen |

| hid-sp18-505 | Cate | Averill |

| hid-sp18-506 | Esteban | Orly |

| hid-sp18-507 | Giuliani | Stephen |

| hid-sp18-508 | Guo | Yue |

| hid-sp18-509 | Irey | Ryan |

| hid-sp18-510 | Kaul | Naveen |

| hid-sp18-511 | Khandelwal | Sandeep Kumar |

| hid-sp18-512 | Kikaya | Felix |

| hid-sp18-513 | Kugan | Uma |

| hid-sp18-514 | Lambadi | Ravinder |

| hid-sp18-515 | Lin | Qingyun |

| hid-sp18-516 | Pathan | Shagufta |

| hid-sp18-517 | Pitkar | Harshad |

| hid-sp18-518 | Robinson | Michael |

| hid-sp18-519 | Saurabh | Shukla |

| hid-sp18-520 | Sinha | Arijit |

| hid-sp18-521 | Steinbruegge | Scott |

| hid-sp18-522 | Swaroop | Saurabh |

| hid-sp18-523 | Tandon | Ritesh |

| hid-sp18-524 | Tian | Hao |

| hid-sp18-525 | Walker | Bruce |

| hid-sp18-526 | Whitson | Timothy |

| hid-sp18-601 | Ferrari | Juliano |

| hid-sp18-602 | Naredla | Keerthi |

| hid-sp18-701 | Unni | Sunanda Unni |

| hid-sp18-702 | Dubey | Lokesh |

| hid-sp18-703 | Rufael | Ribka |

| hid-sp18-704 | Meier | Zachary |

| hid-sp18-705 | Thompson | Timothy |

| hid-sp18-706 | Sylla | Hady |

| hid-sp18-707 | Smith | Michael |

| hid-sp18-708 | Wright | Darren |

| hid-sp18-709 | Castro | Andres |

| hid-sp18-710 | Kugan | Uma M |

| hid-sp18-711 | Kagita | Mani |

| sp19-222-100 | Saxberg | Jarod |

| sp19-222-101 | Bower | Eric |

| sp19-222-102 | Danehy | Ryan |

| sp19-222-89 | Fischer | Brandon |

| sp19-222-90 | Japundza | Ethan |

| sp19-222-91 | Zhang | Tyler |

| sp19-222-92 | Yeagley | Ben |

| sp19-222-93 | Schwantes | Brian |

| sp19-222-94 | Gotts | Andrew |

| sp19-222-96 | Olson | Mercedes |

| sp19-222-97 | Levy | Zach |

| sp19-222-98 | McDowell | Xandria |

| sp19-222-99 | Badillo | Jesus |

| sp19-516-121 | Bahramian | Hamidreza |

| sp19-516-122 | Duer | Anthony |

| sp19-516-123 | Challa | Mallik |

| sp19-516-124 | Garbe | Andrew |

| sp19-516-125 | Fine | Keli |

| sp19-516-126 | Peters | David |

| sp19-516-127 | Collins | Eric |

| sp19-516-128 | Rawat | Tarun |

| sp19-516-129 | Ludwig | Robert |

| sp19-516-130 | Rachepalli | Jeevan Reddy |

| sp19-516-131 | Huang | Jing |

| sp19-516-132 | Gupta | Himanshu |

| sp19-516-133 | Mannarswamy | Aravind |

| sp19-516-134 | Sivan | Manjunath |

| sp19-516-135 | Yue | Xiao |

| sp19-516-136 | Eggleton | Joaquin Avila |

| sp19-516-138 | Samanvitha | Pradhan |

| sp19-516-139 | Pullakhandam | Srimannarayana |

| sp19-616-111 | Vangalapat | Tharak |

| sp19-616-112 | Joshi | Shirish |

| sp20-516-220 | Goodman | Josh |

| sp20-516-222 | McCandless | Peter |

| sp20-516-223 | Dharmchand | Rahul |

| sp20-516-224 | Mishra | Divyanshu |

| sp20-516-227 | Gu | Xin |

| sp20-516-229 | Shaw | Prateek |

| sp20-516-230 | Thornton | Ashley |

| sp20-516-231 | Kegerreis | Brian |

| sp20-516-232 | Singam | Ashok |

| sp20-516-233 | Zhang | Holly |

| sp20-516-234 | Goldfarb | Andrew |

| sp20-516-235 | Ibadi | Yasir Al |

| sp20-516-236 | Achath | Seema |

| sp20-516-237 | Beckford | Jonathan |

| sp20-516-238 | Mishra | Ishan |

| sp20-516-239 | Lam | Sara |

| sp20-516-240 | Nicasio | Falconi |

| sp20-516-241 | Jaswal | Nitesh |

| sp20-516-243 | Drummond | David |

| sp20-516-245 | Baker | Joshua |

| sp20-516-246 | Fischer | Rhonda |

| sp20-516-247 | Gupta | Akshay |

| sp20-516-248 | Bookland | Hannah |

| sp20-516-250 | Palani | Senthil |

| sp20-516-251 | Jiang | Shihui |

| sp20-516-252 | Zhu | Jessica |

| sp20-516-253 | Arivukadal | Lenin |

| sp20-516-254 | Kagita | Mani |

| sp20-516-255 | Porwal | Prafull |

2 - List

This page contains the list of current modules.

Legend

- h - header missing the #

- m - too many #’s in titles

3 - Autogenerating Analytics Rest Services

On this page, we will deploy a Pipeline Anova SVM onto our openapi server, and subsequently train the model with data and make predictions from said data. All code needed for this is provided in the cloudmesh-openapi repository. The code is largely based on this sklearn example.

1. Overview

1.1 Prerequisite

It is also assumed that the user has installed and has familiarity with the following:

python3 --version>= 3.8- Linux Command line

1.2 Effort

- 15 minutes (not including assignment)

1.3 List of Topics Covered

In this module, we focus on the following:

- Training ML models with stateless requests

- Generating RESTful APIs using

cms openapifor existing python code - Deploying openapi definitions onto a localserver

- Interacting with newly created openapi services

1.4 Syntax of this Tutorial.

We describe the syntax for terminal commands used in this tutorial using the following example:

(TESTENV) ~ $ echo "hello"

Here, we are in the python virtual environment (TESTENV) in the home directory ~. The $ symbol denotes the beginning of the terminal command (ie. echo "hello"). When copying and pasting commands, do not include $ or anything before it.

2. Creating a virtual environment

It is best practice to create virtual environments when you do not envision needing a python package consistently. We also want to place all source code in a common directory called cm. Let us set up this create one for this tutorial.

On your Linux/Mac, open a new terminal.

~ $ python3 -m venv ~/ENV3

The above will create a new python virtual environment. Activate it with the following.

~ $ source ~/ENV3/bin/activate

First, we update pip and verify your python and pip are correct

(ENV3) ~ $ which python

/Users/user/ENV3/bin/python

(ENV3) ~ $ which pip

/Users/user/ENV3/bin/pip

(ENV3) ~ $ pip install -U pip

Now we can use cloudmesh-installer to install the code in developer mode. This gives you access to the source code.

First, create a new directory for the cloudmesh code.

(ENV3) ~ $ mkdir ~/cm

(ENV3) ~ $ cd ~/cm

Next, we install cloudmesh-installer and use it to install cloudmesh openapi.

(ENV3) ~/cm $ pip install -U pip

(ENV3) ~/cm $ pip install cloudmesh-installer

(ENV3) ~/cm $ cloudmesh-installer get openapi

Finally, for this tutorial, we use sklearn. Install the needed packages as follows:

(ENV3) ~/cm $ pip install sklearn pandas

3. The Python Code

Let’s take a look at the python code we would like to make a REST service from. First, let’s navigate to the local openapi repository that was installed with cloudmesh-installer.

(ENV3) ~/cm $ cd cloudmesh-openapi

(ENV3) ~/cm/cloudmesh-openapi $ pwd

/Users/user/cm/cloudmesh-openapi

Let us take a look at the PipelineAnova SVM example code.

A Pipeline is a pipeline of transformations to apply with a final estimator. Analysis of variance (ANOVA) is used for feature selection. A Support vector machine SVM is used as the actual learning model on the features.

Use your favorite editor to look at it (whether it be vscode, vim, nano, etc). We will use emacs

(ENV3) ~/cm/cloudmesh-openapi $ emacs ./tests/Scikitlearn-experimental/sklearn_svm.py

The class within this file has two main methods to interact with (except for the file upload capability which is added at runtime)

@classmethod

def train(cls, filename: str) -> str:

"""

Given the filename of an uploaded file, train a PipelineAnovaSVM

model from the data. Assumption of data is the classifications

are in the last column of the data.

Returns the classification report of the test split

"""

# some code...

@classmethod

def make_prediction(cls, model_name: str, params: str):

"""

Make a prediction based on training configuration

"""

# some code...

Note the parameters that each of these methods takes in. These parameters are expected as part of the stateless request for each method.

4. Generating the OpenAPI YAML file

Let us now use the python code from above to create the openapi YAML file that we will deploy onto our server. To correctly generate this file, use the following command:

(ENV3) ~/cm/cloudmesh-openapi $ cms openapi generate PipelineAnovaSVM \

--filename=./tests/Scikitlearn-experimental/sklearn_svm.py \

--import_class \

--enable_upload

Let us digest the options we have specified:

--filenameindicates the path to the python file in which our code is located--import_classnotifiescms openapithat the YAML file is generated from a class. The name of this class is specified asPipelineAnovaSVM--enable_uploadallows the user to upload files to be stored on the server for reference. This flag causescms openapito auto-generate a new python file with theuploadmethod appended to the end of the file. For this example, you will notice a new file has been added in the same directory assklearn_svm.py. The file is aptly called:sklearn_svm_upload-enabled.py

5. The OpenAPI YAML File (optional)

If Section 2 above was correctly, cms will have generated the corresponding openapi YAML file. Let us take a look at it.

(ENV3) ~/cm/cloudmesh-openapi $ emacs ./tests/Scikitlearn-experimental/sklearn_svm.yaml

This YAML file has a lot of information to digest. The basic structure is documented here. However, it is not necessary to understand this information to deploy RESTful APIs.

However, take a look at paths: on line 9 in this file. Under this section, we have several different endpoints for our API listed. Notice the correlation between the endpoints and the python file we generated from.

6. Starting the Server

Using the YAML file from Section 2, we can now start the server.

(ENV3) ~/cm/cloudmesh-openapi $ cms openapi server start ./tests/Scikitlearn-experimental/sklearn_svm.yaml

The server should now be active. Navigate to http://localhost:8080/cloudmesh/ui.

7. Interacting With the Endpoints

7.1 Uploading the Dataset

We now have a nice user inteface to interact with our newly generated

API. Let us upload the data set. We are going to use the iris data set in this example. We have provided it for you to use. Simply navigate to the /upload endpoint by clicking on it, then click Try it out.

We can now upload the file. Click on Choose File and upload the data set located at ~/cm/cloudmesh-openapi/tests/Scikitlearn-experimental/iris.data. Simply hit Execute after the file is uploaded. We should then get a 200 return code (telling us that everything went ok).

7.2 Training on the Dataset

The server now has our dataset. Let us now navigate to the /train endpoint by, again, clicking on it. Similarly, click Try it out. The parameter being asked for is the filename. The filename we are interested in is iris.data. Then click execute. We should get another 200 return code with a Classification Report in the Response Body.

7.3 Making Predictions

We now have a trained model on the iris data set. Let us now use it to make predictions. The model expects 4 attribute values: sepal length, seapl width, petal length, and petal width. Let us use the values 5.1, 3.5, 1.4, 0.2 as our attributes. The expected classification is Iris-setosa.

Navigate to the /make_prediction endpoint as we have with other endpoints. Again, let us Try it out. We need to provide the name of the model and the params (attribute values). For the model name, our model is aptly called iris (based on the name of the data set).

As expected, we have a classification of Iris-setosa.

8. Clean Up (optional)

At this point, we have created and trained a model using cms openapi. After satisfactory use, we can shut down the server. Let us check what we have running.

(ENV3) ~/cm/cloudmesh-openapi $ cms openapi server ps

openapi server ps

INFO: Running Cloudmesh OpenAPI Servers

+-------------+-------+--------------------------------------------------+

| name | pid | spec |

+-------------+-------+--------------------------------------------------+

| sklearn_svm | 94428 | ./tests/Scikitlearn- |

| | | experimental/sklearn_svm.yaml |

+-------------+-------+--------------------------------------------------+

We can stop the server with the following command:

(ENV3) ~/cm/cloudmesh-openapi $ cms openapi server stop sklearn_svm

We can verify the server is shut down by running the ps command again.

(ENV3) ~/cm/cloudmesh-openapi $ cms openapi server ps

openapi server ps

INFO: Running Cloudmesh OpenAPI Servers

None

9. Uninstallation (Optional)

After running this tutorial, you may uninstall all cloudmesh-related things as follows:

First, deactivate the virtual environment.

(ENV3) ~/cm/cloudmesh-openapi $ deactivate

~/cm/cloudmesh-openapi $ cd ~

Then, we remove the ~/cm directory.

~ $ rm -r -f ~/cm

We also remove the cloudmesh hidden files:

~ $ rm -r -f ~/.cloudmesh

Lastly, we delete our virtual environment.

~ $ rm -r -f ~/ENV3

Cloudmesh is now succesfully uninstalled.

10. Assignments

Many ML models follow the same basic process for training and testing:

- Upload Training Data

- Train the model

- Test the model

Using the PipelineAnovaSVM code as a template, write python code for a new model and deploy it as a RESTful API as we have done above. Train and test your model using the provided iris data set. There are plenty of examples that can be referenced here

11. References

4 - DevOps

We present here a collection information and of tools related to DevOps.

4.1 - DevOps - Continuous Improvement

Deploying enterprise applications has been always challenging. Without consistent and reliable processes and practices, it would be impossible to track and measure the deployment artifacts, which code-files and configuration data have been deployed to what servers and what level of unit and integration tests have been done among various components of the enterprise applications. Deploying software to cloud is much more complex, given Dev-Op teams do not have extensive access to the infrastructure and they are forced to follow the guidelines and tools provided by the cloud companies. In recent years, Continuous Integration (CI) and Continuous Deployment (CD) are the Dev-Op mantra for delivering software reliably and consistently.

While CI/CD process is, as difficult as it gets, monitoring the deployed applications is emerging as new challenge, especially, on an infrastructure that is sort of virtual with VMs in combination with containers. Continuous Monitoring (CM) is somewhat new concept, that has gaining rapid popularity and becoming integral part of the overall Dev-Op functionality. Based on where the software has been deployed, continuous monitoring can be as simple as, monitoring the behavior of the applications to as complex as, end-to-end visibility across infrastructure, heart-beat and health-check of the deployed applications along with dynamic scalability based on the usage of these applications. To address this challenge, building robust monitoring pipeline process, would be a necessity. Continuous Monitoring aspects get much better control, if they are thought as early as possible and bake them into the software during the development. We can provide much better tracking and analyze metrics much closer to the application needs, if these aspects are considered very early into the process. Cloud companies aware of this necessity, provide various Dev-Op tools to make CI/CD and continuous monitoring as easy as possible. While, some of these tools and aspects are provided by the cloud offerings, some of them must be planned and planted into our software.

At high level, we can think of a simple pipeline to achieve consistent and scalable deployment process. CI/CD and Continuous Monitoring Pipeline:

-

Step 1 - Continuous Development - Plan, Code, Build and Test:

Planning, Coding, building the deployable artifacts - code, configuration, database, etc. and let them go through the various types of tests with all the dimensions - technical to business and internal to external, as automated as possible. All these aspects come under Continuous Development.

-

Step 2 - Continuous Improvement - Deploy, Operate and Monitor:

Once deployed to production, how these applications get operated - bug and health-checks, performance and scalability along with various high monitoring - infrastructure and cold delays due to on-demand VM/container instantiations by the cloud offerings due to the nature of the dynamic scalability of the deployment and selected hosting options. Making necessary adjustments to improve the overall experience is essentially called Continuous Improvement.

4.2 - Infrastructure as Code (IaC)

Learning Objectives

Learning Objectives

Learning Objectives

- Introduction to IaC

- How IaC is related to DevOps

- How IaC differs from Configuration Management Tools, and how is it related

- Listing of IaC Tools

- Further Reading

Introduction to IaC

IaC(Infrastructure as Code) is the ability of code to generate, maintain and destroy application infrastructure like server, storage and networking, without requiring manual changes. State of the infrastructure is maintained in files.

Cloud architectures, and containers have forced usage of IaC, as the amount of elements to manage at each layer are just too many. It is impractical to keep track with the traditional method of raising tickets and having someone do it for you. Scaling demands, elasticity during odd hours, usage-based-billing all require provisioning, managing and destroying infrastructure much more dynamically.

From the book “Amazon Web Services in Action” by Wittig [1], using a script or a declarative description has the following advantages

- Consistent usage

- Dependencies are handled

- Replicable

- Customizable

- Testable

- Can figure out updated state

- Minimizes human failure

- Documentation for your infrastructure

Sometimes IaC tools are also called Orchestration tools, but that label is not as accurate, and often misleading.

How IaC is related to DevOps

DevOps has the following key practices

- Automated Infrastructure

- Automated Configuration Management, including Security

- Shared version control between Dev and Ops

- Continuous Build - Integrate - Test - Deploy

- Continuous Monitoring and Observability

The first practice - Automated Infrastructure can be fulfilled by IaC tools. By having the code for IaC and Configuration Management in the same code repository as application code ensures adhering to the practice of shared version control.

Typically, the workflow of the DevOps team includes running Configuration Management tool scripts after running IaC tools, for configurations, security, connectivity, and initializations.

How IaC tools differs from Configuration Management Tools, and how it is related

There are 4 broad categories of such tools [2], there are

- Ad hoc scripts: Any shell, Python, Perl, Lua scripts that are written

- Configuration management tools: Chef, Puppet, Ansible, SaltStack

- Server templating tools: Docker, Packer, Vagrant

- Server provisioning tools: Terraform, Heat, CloudFormation, Cloud Deployment Manager, Azure Resource Manager

Configuration Management tools make use of scripts to achieve a state. IaC tools maintain state and metadata created in the past.

However, the big difference is the state achieved by running procedural code or scripts may be different from state when it was created because

- Ordering of the scripts determines the state. If the order changes, state will differ. Also, issues like waiting time required for resources to be created, modified or destroyed have to be correctly dealt with.

- Version changes in procedural code are inevitabale, and will lead to a different state.

Chef and Ansible are more procedural, while Terraform, CloudFormation, SaltStack, Puppet and Heat are more declarative.

IaC or declarative tools do suffer from inflexibility related to expressive scripting language.

Listing of IaC Tools

IaC tools that are cloud specific are

- Amazon AWS - AWS CloudFormation

- Google Cloud - Cloud Deployment Manager

- Microsoft Azure - Azure Resource Manager

- OpenStack - Heat

Terraform is not a cloud specific tool, and is multi-vendor. It has got good support for all the clouds, however, Terraform scripts are not portable across clouds.

Advantages of IaC

IaC solves the problem of environment drift, that used to lead to the infamous “but it works on my machine” kind of errors that are difficult to trace. According to ???

IaC guarantees Idempotence – known/predictable end state – irrespective of starting state. Idempotency is achieved by either automatically configuring an existing target or by discarding the existing target and recreating a fresh environment.

Further Reading

Please see books and resources like the “Terraform Up and Running” [2] for more real-world advice on IaC, structuring Terraform code and good deployment practices.

A good resource for IaC is the book “Infrastructure as Code” [3].

Refernces

[1] M. Wittig Andreas; Wittig, Amazon web services in action, 1st ed. Manning Press, 2015.

[2] Y. Brikman, Terraform: Up and running, 1st ed. O’Reilly Media Inc, 2017.

[3] K. Morris, Infrastructure as code, 1st ed. O’Reilly Media Inc, 2015.

4.3 - Ansible

Introduction to Ansible

Ansible is an open-source IT automation DevOps engine allowing you to manage and configure many compute resources in a scalable, consistent and reliable way.

Ansible to automates the following tasks:

-

Provisioning: It sets up the servers that you will use as part of your infrastructure.

-

Configuration management: You can change the configuration of an application, OS, or device. You can implement security policies and other configuration tasks.

-

Service management: You can start and stop services, install updates

-

Application deployment: You can conduct application deployments in an automated fashion that integrate with your DevOps strategies.

Prerequisite

We assume you

-

can install Ubuntu 18.04 virtual machine on VirtualBox

-

can install software packages via ‘apt-get’ tool in Ubuntu virtual host

-

already reserved a virtual cluster (with at least 1 virtual machine in it) on some cloud. OR you can use VMs installed in VirtualBox instead.

-

have SSH credentials and can login to your virtual machines.

Setting up a playbook

Let us develop a sample from scratch, based on the paradigms that ansible supports. We are going to use Ansible to install Apache server on our virtual machines.

First, we install ansible on our machine and make sure we have an up to date OS:

$ sudo apt-get update

$ sudo apt-get install ansible

Next, we prepare a working environment for your Ansible example

$ mkdir ansible-apache

$ cd ansible-apache

To use ansible we will need a local configuration. When you execute

Ansible within this folder, this local configuration file is always

going to overwrite a system level Ansible configuration. It is in

general beneficial to keep custom configurations locally unless you

absolutely believe it should be applied system wide. Create a file

inventory.cfg in this folder, add the following:

[defaults]

hostfile = hosts.txt

This local configuration file tells that the target machines' names

are given in a file named hosts.txt. Next we will specify hosts in

the file.

You should have ssh login accesses to all VMs listed in this file as

part of our prerequisites. Now create and edit file hosts.txt with

the following content:

[apache]

<server_ip> ansible_ssh_user=<server_username>

The name apache in the brackets defines a server group name. We will

use this name to refer to all server items in this group. As we intend

to install and run apache on the server, the name choice seems quite

appropriate. Fill in the IP addresses of the virtual machines you

launched in your VirtualBox and fire up these VMs in you VirtualBox.

To deploy the service, we need to create a playbook. A playbook tells

Ansible what to do. it uses YAML Markup syntax. Create and edit a file

with a proper name e.g. apache.yml as follow:

---

- hosts: apache #comment: apache is the group name we just defined

become: yes #comment: this operation needs privilege access

tasks:

- name: install apache2 # text description

apt: name=apache2 update_cache=yes state=latest

This block defines the target VMs and operations(tasks) need to apply.

We are using the apt attribute to indicate all software packages that

need to be installed. Dependent on the distribution of the operating

system it will find the correct module installer without your

knowledge. Thus an ansible playbook could also work for multiple

different OSes.

Ansible relies on various kinds of modules to fulfil tasks on the remote

servers. These modules are developed for particular tasks and take in

related arguments. For instance, when we use apt module, we

need to tell which package we intend to install. That is why we provide

a value for the name= argument. The first -name attribute is just

a comment that will be printed when this task is executed.

Run the playbook

In the same folder, execute

ansible-playbook apache.yml --ask-sudo-pass

After a successful run, open a browser and fill in your server IP. you should see an ‘It works!’ Apache2 Ubuntu default page. Make sure the security policy on your cloud opens port 80 to let the HTTP traffic go through.

Ansible playbook can have more complex and fancy structure and syntaxes. Go explore! This example is based on:

We are going to offer an advanced Ansible in next chapter.

Ansible Roles

Next we install the R package onto our cloud VMs. R is a useful statistic programing language commonly used in many scientific and statistics computing projects, maybe also the one you chose for this class. With this example we illustrate the concept of Ansible Roles, install source code through Github, and make use of variables. These are key features you will find useful in your project deployments.

We are going to use a top-down fashion in this example. We first start from a playbook that is already good to go. You can execute this playbook (do not do it yet, always read the entire section first) to get R installed in your remote hosts. We then further complicate this concise playbook by introducing functionalities to do the same tasks but in different ways. Although these different ways are not necessary they help you grasp the power of Ansible and ease your life when they are needed in your real projects.

Let us now create the following playbook with the name example.yml:

---

- hosts: R_hosts

become: yes

tasks:

- name: install the R package

apt: name=r-base update_cache=yes state=latest

The hosts are defined in a file hosts.txt, which we configured in

a file that we now call ansible.cfg:

[R_hosts]

<cloud_server_ip> ansible_ssh_user=<cloud_server_username>

Certainly, this should get the installation job done. But we are going to extend it via new features called role next

Role is an important concept used often in large Ansible projects. You divide a series of tasks into different groups. Each group corresponds to certain role within the project.

For example, if your project is to deploy a web site, you may need to install the back end database, the web server that responses HTTP requests and the web application itself. They are three different roles and should carry out their own installation and configuration tasks.

Even though we only need to install the R package in this example, we

can still do it by defining a role ‘r’. Let us modify our example.yml to be:

---

- hosts: R_hosts

roles:

- r

Now we create a directory structure in your top project directory as follows

$ mkdir -p roles/r/tasks

$ touch roles/r/tasks/main.yml

Next, we edit the main.yml file and include the following content:

---

- name: install the R package

apt: name=r-base update_cache=yes state=latest

become: yes

You probably already get the point. We take the ‘tasks’ section out of

the earlier example.yml and re-organize them into roles. Each role

specified in example.yml should have its own directory under roles/ and

the tasks need be done by this role is listed in a file ‘tasks/main.yml’

as previous.

Using Variables

We demonstrate this feature by installing source code from Github. Although R can be installed through the OS package manager (apt-get etc.), the software used in your projects may not. Many research projects are available by Git instead. Here we are going to show you how to install packages from their Git repositories. Instead of directly executing the module ‘apt’, we pretend Ubuntu does not provide this package and you have to find it on Git. The source code of R can be found at https://github.com/wch/r-source.git. We are going to clone it to a remote VM’s hard drive, build the package and install the binary there.

To do so, we need a few new Ansible modules. You may remember from the

last example that Ansible modules assist us to do different tasks

based on the arguments we pass to it. It will come to no surprise that

Ansible has a module ‘git’ to take care of git-related works, and a

‘command’ module to run shell commands. Let us modify

roles/r/tasks/main.yml to be:

---

- name: get R package source

git:

repo: https://github.com/wch/r-source.git

dest: /tmp/R

- name: build and install R

become: yes

command: chdir=/tmp/R "{{ item }}"

with_items:

- ./configure

- make

- make install

The role r will now carry out two tasks. One to clone the R source

code into /tmp/R, the other uses a series of shell commands to build and

install the packages.

Note that the commands executed by the second task may not be available on a fresh VM image. But the point of this example is to show an alternative way to install packages, so we conveniently assume the conditions are all met.

To achieve this we are using variables in a separate file.

We typed several string constants in our Ansible scripts so far. In

general, it is a good practice to give these values names and use them

by referring to their names. This way, you complex Ansible project can

be less error prone. Create a file in the same directory, and name it

vars.yml:

---

repository: https://github.com/wch/r-source.git

tmp: /tmp/R

Accordingly, we will update our example.yml:

---

- hosts: R_hosts

vars_files:

- vars.yml

roles:

- r

As shown, we specify a vars_files telling the script that the file

vars.yml is going to supply variable values, whose keys are denoted by

Double curly brackets like in roles/r/tasks/main.yml:

---

- name: get R package source

git:

repo: "{{ repository }}"

dest: "{{ tmp }}"

- name: build and install R

become: yes

command: chdir="{{ tmp }}" "{{ item }}"

with_items:

- ./configure

- make

- make install

Now, just edit the hosts.txt file with your target VMs' IP addresses and

execute the playbook.

You should be able to extend the Ansible playbook for your needs. Configuration tools like Ansible are important components to master the cloud environment.

Ansible Galaxy

Ansible Galaxy is a marketplace, where developers can share Ansible Roles to complete their system administration tasks. Roles exchanged in Ansible Galaxy community need to follow common conventions so that all participants know what to expect. We will illustrate details in this chapter.

It is good to follow the Ansible Galaxy standard during your development as much as possible.

Ansible Galaxy helloworld

Let us start with a simplest case: We will build an Ansible Galaxy project. This project will install the Emacs software package on your localhost as the target host. It is a helloworld project only meant to get us familiar with Ansible Galaxy project structures.

First you need to create a directory. Let us call it mongodb:

$ mkdir mongodb

Go ahead and create files README.md, playbook.yml, inventory and a

subdirectory roles/ then `playbook.yml is your project playbook. It

should perform the Emacs installation task by executing the

corresponding role you will develop in the folder ‘roles/’. The only

difference is that we will construct the role with the help of

ansible-galaxy this time.

Now, let ansible-galaxy initialize the directory structure for you:

$ cd roles

$ ansible-galaxy init <to-be-created-role-name>

The naming convention is to concatenate your name and the role name by a dot. @fig:ansible shows how it looks like.

{#fig:ansible}

{#fig:ansible}

Let us fill in information to our project. There are several main.yml

files in different folders, and we will illustrate their usages.

defaults and vars:

These folders should hold variables key-value pairs for your playbook scripts. We will leave them empty in this example.

files:

This folder is for files need to be copied to the target hosts. Data files or configuration files can be specified if needed. We will leave it empty too.

templates:

Similar missions to files/, templates is allocated for template files. Keep empty for a simple Emacs installation.

handlers:

This is reserved for services running on target hosts. For example, to restart a service under certain circumstance.

tasks:

This file is the actual script for all tasks. You can use the role you built previously for Emacs installation here:

--- - name: install Emacs on Ubuntu 16.04 become: yes package: name=emacs state=present

meta:

Provide necessary metadata for our Ansible Galaxy project for shipping:

---

galaxy_info:

author: <you name>

description: emacs installation on Ubuntu 16.04

license:

- MIT

min_ansible_version: 2.0

platforms:

- name: Ubuntu

versions:

- xenial

galaxy_tags:

- development

dependencies: []

Next let us test it out. You have your Ansible Galaxy role ready

now. To test it as a user, go to your directory and edit the other

two files inventory.txt and playbook.yml, which are already generated

for you in directory tests by the script:

$ ansible-playbook -i ./hosts playbook.yml

After running this playbook, you should have Emacs installed on localhost.

A Complete Ansible Galaxy Project

We are going to use ansible-galaxy to setup a sample project. This sample project will:

- use a cloud cluster with multiple VMs

- deploy Apache Spark on this cluster

- install a particular HPC application

- prepare raw data for this cluster to process

- run the experiment and collect results

Ansible: Write a Playbooks for MongoDB

Ansible Playbooks are automated scripts written in YAML data format. Instead of using manual commands to setup multiple remote machines, you can utilize Ansible Playbooks to configure your entire systems. YAML syntax is easy to read and express the data structure of certain Ansible functions. You simply write some tasks, for example, installing software, configuring default settings, and starting the software, in a Ansible Playbook. With a few examples in this section, you will understand how it works and how to write your own Playbooks.

- There are also several examples of using Ansible Playbooks from the official site. It covers

-

from basic usage of Ansible Playbooks to advanced usage such as applying patches and updates with different roles and groups.

We are going to write a basic playbook of Ansible

software. Keep in mind that Ansible is a main program and playbook

is a template that you would like to use. You may have several playbooks

in your Ansible.

First playbook for MongoDB Installation

As a first example, we are going to write a playbook which installs MongoDB server. It includes the following tasks:

- Import the public key used by the package management system

- Create a list file for MongoDB

- Reload local package database

- Install the MongoDB packages

- Start MongoDB

The material presented here is based on the manual installation of MongoDB from the official site:

We also assume that we install MongoDB on Ubuntu 15.10.

Enabling Root SSH Access

Some setups of managed nodes may not allow you to log in as root. As

this may be problematic later, let us create a playbook to resolve this.

Create a enable-root-access.yaml file with the following contents:

---

- hosts: ansible-test

remote_user: ubuntu

tasks:

- name: Enable root login

shell: sudo cp ~/.ssh/authorized_keys /root/.ssh/

Explanation:

-

hostsspecifies the name of a group of machines in the inventory -

remote_userspecifies the username on the managed nodes to log in as -

tasksis a list of tasks to accomplish having aname(a description) and modules to execute. In this case we use theshellmodule.

We can run this playbook like so:

$ ansible-playbook -i inventory.txt -c ssh enable-root-access.yaml

PLAY [ansible-test] ***********************************************************

GATHERING FACTS ***************************************************************

ok: [10.23.2.105]

ok: [10.23.2.104]

TASK: [Enable root login] *****************************************************

changed: [10.23.2.104]

changed: [10.23.2.105]

PLAY RECAP ********************************************************************

10.23.2.104 : ok=2 changed=1 unreachable=0 failed=0

10.23.2.105 : ok=2 changed=1 unreachable=0 failed=0

Hosts and Users

First step is choosing hosts to install MongoDB and a user account to

run commands (tasks). We start with the following lines in the example

filename of mongodb.yaml:

---

- hosts: ansible-test

remote_user: root

become: yes

In a previous section, we setup two machines with ansible-test group

name. We use two machines for MongoDB installation.

Also, we use root account to complete Ansible tasks.

- Indentation is important in YAML format. Do not ignore spaces start

-

with in each line.

Tasks

A list of tasks contains commands or configurations to be executed on

remote machines in a sequential order. Each task comes with a name and

a module to run your command or configuration. You provide a

description of your task in name section and choose a module for

your task. There are several modules that you can use, for example,

shell module simply executes a command without considering a return

value. You may use apt or yum module which is one of the packaging

modules to install software. You can find an entire list of modules

here: http://docs.ansible.com/list_of_all_modules.html

Module apt_key: add repository keys

We need to import the MongoDB public GPG Key. This is going to be a first task in our playbook.:

tasks:

- name: Import the public key used by the package management system

apt_key: keyserver=hkp://keyserver.ubuntu.com:80 id=7F0CEB10 state=present

Module apt_repository: add repositories

Next add the MongoDB repository to apt:

- name: Add MongoDB repository

apt_repository: repo='deb http://downloads-distro.mongodb.org/repo/ubuntu-upstart dist 10gen' state=present

Module apt: install packages

We use apt module to install mongodb-org package. notify action is

added to start mongod after the completion of this task. Use the

update_cache=yes option to reload the local package database.:

- name: install mongodb

apt: pkg=mongodb-org state=latest update_cache=yes

notify:

- start mongodb

Module service: manage services

We use handlers here to start or restart services. It is similar to

tasks but will run only once.:

handlers:

- name: start mongodb

service: name=mongod state=started

The Full Playbook

Our first playbook looks like this:

---

- hosts: ansible-test

remote_user: root

become: yes

tasks:

- name: Import the public key used by the package management system

apt_key: keyserver=hkp://keyserver.ubuntu.com:80 id=7F0CEB10 state=present

- name: Add MongoDB repository

apt_repository: repo='deb http://downloads-distro.mongodb.org/repo/ubuntu-upstart dist 10gen' state=present

- name: install mongodb

apt: pkg=mongodb-org state=latest update_cache=yes

notify:

- start mongodb

handlers:

- name: start mongodb

service: name=mongod state=started

Running a Playbook

We use ansible-playbook command to run our playbook:

$ ansible-playbook -i inventory.txt -c ssh mongodb.yaml

PLAY [ansible-test] ***********************************************************

GATHERING FACTS ***************************************************************

ok: [10.23.2.104]

ok: [10.23.2.105]

TASK: [Import the public key used by the package management system] ***********

changed: [10.23.2.104]

changed: [10.23.2.105]

TASK: [Add MongoDB repository] ************************************************

changed: [10.23.2.104]

changed: [10.23.2.105]

TASK: [install mongodb] *******************************************************

changed: [10.23.2.104]

changed: [10.23.2.105]

NOTIFIED: [start mongodb] *****************************************************

ok: [10.23.2.105]

ok: [10.23.2.104]

PLAY RECAP ********************************************************************

10.23.2.104 : ok=5 changed=3 unreachable=0 failed=0

10.23.2.105 : ok=5 changed=3 unreachable=0 failed=0

If you rerun the playbook, you should see that nothing changed:

$ ansible-playbook -i inventory.txt -c ssh mongodb.yaml

PLAY [ansible-test] ***********************************************************

GATHERING FACTS ***************************************************************

ok: [10.23.2.105]

ok: [10.23.2.104]

TASK: [Import the public key used by the package management system] ***********

ok: [10.23.2.104]

ok: [10.23.2.105]

TASK: [Add MongoDB repository] ************************************************

ok: [10.23.2.104]

ok: [10.23.2.105]

TASK: [install mongodb] *******************************************************

ok: [10.23.2.105]

ok: [10.23.2.104]

PLAY RECAP ********************************************************************

10.23.2.104 : ok=4 changed=0 unreachable=0 failed=0

10.23.2.105 : ok=4 changed=0 unreachable=0 failed=0

Sanity Check: Test MongoDB

Let us try to run ‘mongo’ to enter mongodb shell.:

$ ssh ubuntu@$IP

$ mongo

MongoDB shell version: 2.6.9

connecting to: test

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, see

http://docs.mongodb.org/

Questions? Try the support group

http://groups.google.com/group/mongodb-user

>

Terms

-

Module: Ansible library to run or manage services, packages, files or commands.

-

Handler: A task for notifier.

-

Task: Ansible job to run a command, check files, or update configurations.

-

Playbook: a list of tasks for Ansible nodes. YAML format used.

-

YAML: Human readable generic data serialization.

Reference

The main tutorial from Ansible is here: http://docs.ansible.com/playbooks_intro.html

You can also find an index of the ansible modules here: http://docs.ansible.com/modules_by_category.html

Exercise

We have shown a couple of examples of using Ansible tools. Before you apply it in you final project, we will practice it in this exercise.

- set up the project structure similar to Ansible Galaxy example

- install MongoDB from the package manager (apt in this class)

- configure your MongoDB installation to start the service automatically

- use default port and let it serve local client connections only

4.4 - Puppet

Overview

Configuration management is an important task of IT department in any organization. It is process of managing infrastructure changes in structured and systematic way. Manual rolling back of infrastructure to previous version of software is cumbersome, time consuming and error prone. Puppet is configuration management tool that simplifies complex task of deploying new software, applying software updates and rollback software packages in large cluster. Puppet does this through Infrastructure as Code (IAC). Code is written for infrastructure on one central location and is pushed to nodes in all environments (Dev, Test, Production) using puppet tool. Configuration management tool has two approaches for managing infrastructure; Configuration push and pull. In push configuration, infrastructure as code is pushed from centralized server to nodes whereas in pull configuration nodes pulls infrastructure as code from central server as shown in fig. 1.

![Figure 1: Infrastructure As Code [1]](../images/IAC.jpg)

Puppet uses push and pull configuration in centralized manner as shown in fig. 2.

![Figure 2: push-pull-config Image [1]](../images/push-pull-configuration.jpg)

Another popular infrastructure tool is Ansible. It does not have master and client nodes. Any node in Ansible can act as executor. Any node containing list of inventory and SSH credential can play master node role to connect with other nodes as opposed to puppet architecture where server and agent software needs to be setup and installed. Configuring Ansible nodes is simple, it just requires python version 2.5 or greater. Ansible uses push architecture for configuration.

Master slave architecture

Puppet uses master slave architecture as shown in fig. 3. Puppet server is called as master node and client nodes are called as puppet agent. Agents poll server at regular interval and pulls updated configuration from master. Puppet Master is highly available. It supports multi master architecture. If one master goes down backup master stands up to serve infrastructure.

Workflow

- nodes (puppet agents) sends information (for e.g IP, hardware detail, network etc.) to master. Master stores such information in manifest file.

- Master node compiles catalog file containing configuration information that needs to be implemented on agent nodes.

- Master pushes catalog to puppet agent nodes for implementing configuration.

- Client nodes send back updated report to Master. Master updates its inventory.

- All exchange between master and agent is secured through SSL encryption (see fig. 3)

![Figure 3: Master and Slave Architecture [1]](../images/master-slave.jpg)

fig. 4, shows flow between master and slave.

![Figure 4: Master Slave Workflow 1 [1]](../images/master-slave1.jpg)

fig. 5 shows SSL workflow between master and slave.

![Figure 5: Master Slave SSL Workflow [1]](../images/master-slave-connection.jpg)

Puppet comes in two forms. Open source Puppet and Enterprise In this tutorial we will showcase installation steps of both forms.

Install Opensource Puppet on Ubuntu

We will demonstrate installation of Puppet on Ubuntu

Prerequisite - Atleast 4 GB RAM, Ubuntu box ( standalone or VM )

First, we need to make sure that Puppet master and agent is able to communicate with each other. Agent should be able to connect with master using name.

configure Puppet server name and map with its ip address

$ sudo nano /etc/hosts

contents of the /etc/hosts should look like

<ip_address> my-puppet-master

my-puppet-master is name of Puppet master to which Puppet agent would try to connect

press <ctrl> + O to Save and <ctrl> + X to exit

Next, we will install Puppet on Ubuntu server. We will execute the following commands to pull from official Puppet Labs Repository

$ curl -O https://apt.puppetlabs.com/puppetlabs-release-pc1-xenial.deb

$ sudo dpkg -i puppetlabs-release-pc1-xenial.deb

$ sudo apt-get update

Intstall the Puppet server

$ sudo apt-get install puppetserver

Default instllation of Puppet server is configured to use 2 GB of RAM. However, we can customize this by opening puppetserver configuration file

$ sudo nano /etc/default/puppetserver

This will open the file in editor. Look for JAVA_ARGS line and change

the value of -Xms and -Xmx parameters to 3g if we wish to configure

Puppet server for 3GB RAM. Note that default value of this parameter is

2g.

JAVA_ARGS="-Xms3g -Xmx3g -XX:MaxPermSize=256m"

press <ctrl> + O to Save and <ctrl> + X to exit

By default Puppet server is configured to use port 8140 to communicate with agents. We need to make sure that firewall allows to communicate on this port

$ sudo ufw allow 8140

next, we start Puppet server

$ sudo systemctl start puppetserver

Verify server has started

$ sudo systemctl status puppetserver

we would see “active(running)” if server has started successfully

$ sudo systemctl status puppetserver

● puppetserver.service - puppetserver Service

Loaded: loaded (/lib/systemd/system/puppetserver.service; disabled; vendor pr

Active: active (running) since Sun 2019-01-27 00:12:38 EST; 2min 29s ago

Process: 3262 ExecStart=/opt/puppetlabs/server/apps/puppetserver/bin/puppetser

Main PID: 3269 (java)

CGroup: /system.slice/puppetserver.service

└─3269 /usr/bin/java -Xms3g -Xmx3g -XX:MaxPermSize=256m -Djava.securi

Jan 27 00:11:34 ritesh-ubuntu1 systemd[1]: Starting puppetserver Service...

Jan 27 00:11:34 ritesh-ubuntu1 puppetserver[3262]: OpenJDK 64-Bit Server VM warn

Jan 27 00:12:38 ritesh-ubuntu1 systemd[1]: Started puppetserver Service.

lines 1-11/11 (END)

configure Puppet server to start at boot time

$ sudo systemctl enable puppetserver

Next, we will install Puppet agent

$ sudo apt-get install puppet-agent

start Puppet agent

$ sudo systemctl start puppet

configure Puppet agent to start at boot time

$ sudo systemctl enable puppet

next, we need to change Puppet agent config file so that it can connect to Puppet master and communicate

$ sudo nano /etc/puppetlabs/puppet/puppet.conf

configuration file will be opened in an editor. Add following sections in file

[main]

certname = <puppet-agent>

server = <my-puppet-server>

[agent]

server = <my-puppet-server>

Note: my-puppet-server is the name that we have set up in /etc/hosts file while installing Puppet server. And certname is the name of the certificate

Puppet agent sends certificate signing request to Puppet server when it connects first time. After signing request, Puppet server trusts and identifies agent for managing.

execute following command on Puppet Master in order to see all incoming cerficate signing requests

$ sudo /opt/puppetlabs/bin/puppet cert list

we will see something like

$ sudo /opt/puppetlabs/bin/puppet cert list

"puppet-agent" (SHA256) 7B:C1:FA:73:7A:35:00:93:AF:9F:42:05:77:9B:

05:09:2F:EA:15:A7:5C:C9:D7:2F:D7:4F:37:A8:6E:3C:FF:6B

- Note that puppet-agent is the name that we have configured for certname in puppet.conf file*

After validating that request is from valid and trusted agent, we sign the request

$ sudo /opt/puppetlabs/bin/puppet cert sign puppet-agent

we will see message saying certificate was signed if successful

$ sudo /opt/puppetlabs/bin/puppet cert sign puppet-agent

Signing Certificate Request for:

"puppet-agent" (SHA256) 7B:C1:FA:73:7A:35:00:93:AF:9F:42:05:77:9B:05:09:2F:

EA:15:A7:5C:C9:D7:2F:D7:4F:37:A8:6E:3C:FF:6B

Notice: Signed certificate request for puppet-agent

Notice: Removing file Puppet::SSL::CertificateRequest puppet-agent

at '/etc/puppetlabs/puppet/ssl/ca/requests/puppet-agent.pem'

Next, we will verify installation and make sure that Puppet server is able to push configuration to agent. Puppet uses domian specific language code written in manifests ( .pp ) file

create default manifest site.pp file

$ sudo nano /etc/puppetlabs/code/environments/production/manifests/site.pp

This will open file in edit mode. Make following changes to this file

file {'/tmp/it_works.txt': # resource type file and filename

ensure => present, # make sure it exists

mode => '0644', # file permissions

content => "It works!\n", # Print the eth0 IP fact

}

domain specific language is used to create it_works.txt file inside /tmp directory on agent node. ensure directive make sure that file is present. It creates one if file is removed. mode directive specifies that process has write permission on file to make changes. content directive is used to define content of the changes applied [hid-sp18-523-open]

next, we test the installation on single node

sudo /opt/puppetlabs/bin/puppet agent --test

successfull verification will display

Info: Using configured environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Caching catalog for puppet-agent

Info: Applying configuration version '1548305548'

Notice: /Stage[main]/Main/File[/tmp/it_works.txt]/content:

--- /tmp/it_works.txt 2019-01-27 02:32:49.810181594 +0000

+++ /tmp/puppet-file20190124-9628-1vy51gg 2019-01-27 02:52:28.717734377 +0000

@@ -0,0 +1 @@

+it works!

Info: Computing checksum on file /tmp/it_works.txt

Info: /Stage[main]/Main/File[/tmp/it_works.txt]: Filebucketed /tmp/it_works.txt

to puppet with sum d41d8cd98f00b204e9800998ecf8427e

Notice: /Stage[main]/Main/File[/tmp/it_works.txt]/content: content

changed '{md5}d41d8cd98f00b204e9800998ecf8427e' to '{md5}0375aad9b9f3905d3c545b500e871aca'

Info: Creating state file /opt/puppetlabs/puppet/cache/state/state.yaml

Notice: Applied catalog in 0.13 seconds

Installation of Puppet Enterprise

First, download ubuntu-<version and arch>.tar.gz and CPG signature

file on Ubuntu VM

Second, we import Puppet public key

$ wget -O - https://downloads.puppetlabs.com/puppet-gpg-signing-key.pub | gpg --import

we will see ouput as

--2019-02-03 14:02:54-- https://downloads.puppetlabs.com/puppet-gpg-signing-key.pub

Resolving downloads.puppetlabs.com

(downloads.puppetlabs.com)... 2600:9000:201a:b800:10:d91b:7380:93a1

, 2600:9000:201a:800:10:d91b:7380:93a1, 2600:9000:201a:be00:10:d91b:7380:93a1, ...

Connecting to downloads.puppetlabs.com (downloads.puppetlabs.com)

|2600:9000:201a:b800:10:d91b:7380:93a1|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3139 (3.1K) [binary/octet-stream]

Saving to: ‘STDOUT’

- 100%[===================>] 3.07K --.-KB/s in 0s

2019-02-03 14:02:54 (618 MB/s) - written to stdout [3139/3139]

gpg: key 7F438280EF8D349F: "Puppet, Inc. Release Key

(Puppet, Inc. Release Key) <release@puppet.com>" not changed

gpg: Total number processed: 1

gpg: unchanged: 1

Third, we print fingerprint of used key

$ gpg --fingerprint 0x7F438280EF8D349F

we will see successful output as

pub rsa4096 2016-08-18 [SC] [expires: 2021-08-17]

6F6B 1550 9CF8 E59E 6E46 9F32 7F43 8280 EF8D 349F

uid [ unknown] Puppet, Inc. Release Key

(Puppet, Inc. Release Key) <release@puppet.com>

sub rsa4096 2016-08-18 [E] [expires: 2021-08-17]

Fourth, we verify release signature of installed package

$ gpg --verify puppet-enterprise-VERSION-PLATFORM.tar.gz.asc

successful output will show as

gpg: assuming signed data in 'puppet-enterprise-2019.0.2-ubuntu-18.04-amd64.tar.gz'

gpg: Signature made Fri 25 Jan 2019 02:03:23 PM EST

gpg: using RSA key 7F438280EF8D349F

gpg: Good signature from "Puppet, Inc. Release Key

(Puppet, Inc. Release Key) <release@puppet.com>" [unknown]

gpg: WARNING: This key is not certified with a trusted signature!

gpg: There is no indication that the signature belongs to the owner.

Primary key fingerprint: 6F6B 1550 9CF8 E59E 6E46 9F32 7F43 8280 EF8D 349

Next, we need to unpack installation tarball. Store location of path in

$TARBALL variable. This variable will be used in our installation.

$ export TARBALL=path of tarball file

then, we extract tarball

$ tar -xf $TARBALL

Next, we run installer from installer directory

$ sudo ./puppet-enterprise-installer

This will ask us to chose installation option; we could chose from guided installation or text based installation

~/pe/puppet-enterprise-2019.0.2-ubuntu-18.04-amd64

$ sudo ./puppet-enterprise-installer

~/pe/puppet-enterprise-2019.0.2-ubuntu-18.04-amd64

~/pe/puppet-enterprise-2019.0.2-ubuntu-18.04-amd64

=============================================================

Puppet Enterprise Installer

=============================================================

## Installer analytics are enabled by default.

## To disable, set the DISABLE_ANALYTICS environment variable and rerun

this script.

For example, "sudo DISABLE_ANALYTICS=1 ./puppet-enterprise-installer".

## If puppet_enterprise::send_analytics_data is set to false in your

existing pe.conf, this is not necessary and analytics will be disabled.

Puppet Enterprise offers three different methods of installation.

[1] Express Installation (Recommended)

This method will install PE and provide you with a link at the end

of the installation to reset your PE console admin password

Make sure to click on the link and reset your password before proceeding

to use PE

[2] Text-mode Install

This method will open your EDITOR (vi) with a PE config file (pe.conf)

for you to edit before you proceed with installation.

The pe.conf file is a HOCON formatted file that declares parameters

and values needed to install and configure PE.

We recommend that you review it carefully before proceeding.

[3] Graphical-mode Install

This method will install and configure a temporary webserver to walk

you through the various configuration options.

NOTE: This method requires you to be able to access port 3000 on this

machine from your desktop web browser.

=============================================================

How to proceed? [1]:

-------------------------------------------------------------------

Press 3 for web based Graphic-mode-Install

when successfull, we will see output as

## We're preparing the Web Installer...

2019-02-02T20:01:39.677-05:00 Running command:

mkdir -p /opt/puppetlabs/puppet/share/installer/installer

2019-02-02T20:01:39.685-05:00 Running command:

cp -pR /home/ritesh/pe/puppet-enterprise-2019.0.2-ubuntu-18.04-amd64/*

/opt/puppetlabs/puppet/share/installer/installer/

## Go to https://<localhost>:3000 in your browser to continue installation.

By default Puppet Enterprise server uses 3000 port. Make sure that firewall allows communication on port 3000

$ sudo ufw allow 3000

Next, go to https://localhost:3000 url for completing installation

Click on get started button.

Chose install on this server

Enter <mypserver> as DNS name. This is our Puppet Server name. This

can be configured in confile file also.

Enter console admin password

Click continue

we will get confirm the plan screen with following information

The Puppet master component

Hostname

ritesh-ubuntu-pe

DNS aliases

<mypserver>

click continue and verify installer validation screen.

click Deploy Now button

Puppet enterprise will be installed and will display message on screen

Puppet agent ran sucessfully

login to console with admin password that was set earlier and click on nodes links to manage nodes.

Installing Puppet Enterprise as Text mode monolithic installation

$ sudo ./puppet-enterprise-installer

Enter 2 on How to Proceed for text mode monolithic installation.

Following message will be displayed if successfull.

2019-02-02T22:08:12.662-05:00 - [Notice]: Applied catalog in 339.28 seconds

2019-02-02T22:08:13.856-05:00 - [Notice]:

Sent analytics: pe_installer - install_finish - succeeded

* /opt/puppetlabs/puppet/bin/puppet infrastructure configure

--detailed-exitcodes --environmentpath /opt/puppetlabs/server/data/environments

--environment enterprise --no-noop --install=2019.0.2 --install-method='repair'

* returned: 2

## Puppet Enterprise configuration complete!

Documentation: https://puppet.com/docs/pe/2019.0/pe_user_guide.html

Release notes: https://puppet.com/docs/pe/2019.0/pe_release_notes.html

If this is a monolithic configuration, run 'puppet agent -t' to complete the

setup of this system.

If this is a split configuration, install or upgrade the remaining PE components,

and then run puppet agent -t on the Puppet master, PuppetDB, and PE console,

in that order.

~/pe/puppet-enterprise-2019.0.2-ubuntu-18.04-amd64

2019-02-02T22:08:14.805-05:00 Running command: /opt/puppetlabs/puppet/bin/puppet

agent --enable

~/pe/puppet-enterprise-2019.0.2-ubuntu-18.04-amd64$

This is called as monolithic installation as all components of Puppet Enterprise such as Puppet master, PuppetDB and Console are installed on single node. This installation type is easy to install. Troubleshooting errors and upgrading infrastructure using this type is simple. This installation type can easily support infrastructure of up to 20,000 managed nodes. Compiled master nodes can be added as network grows. This is recommended installation type for small to mid size organizations [2].

pe.conf configuration file will be opened in editor to configure

values. This file contains parameters and values for installing,

upgrading and configuring Puppet.

Some important parameters that can be specified in pe.conf file are

console_admin_password

puppet_enterprise::console_host

puppet_enterprise::puppetdb_host

puppet_enterprise::puppetdb_database_name

puppet_enterprise::puppetdb_database_user

Lastly, we run puppet after installation is complete

$ puppet agent -t

Text mode split installation is performed for large networks. Compared to monolithic installation split installation type can manage large infrastucture that requires more than 20,000 nodes. In this type of installation different components of Puppet Enterprise (master, PuppetDB and Console) are installed on different nodes. This installation type is recommended for organizations with large infrastructure needs [3].

In this type of installation, we need to install componenets in specific order. First master then puppet db followed by console.

Puppet Enterprise master and agent settings can be configured in

puppet.conf file. Most configuration settings of Puppet Enterprise

componenets such as Master, Agent and security certificates are all

specified in this file.

Config section of Agent Node

[main]

certname = <http://your-domain-name.com/>

server = puppetserver

environment = testing

runinterval = 4h

Config section of Master Node

[main]

certname = <http://your-domain-name.com/>

server = puppetserver

environment = testing

runinterval = 4h

strict_variables = true

[master]

dns_alt_names = puppetserver,puppet, <http://your-domain-name.com/>

reports = pupated

storeconfigs_backend = puppetdb

storeconfigs = true

environment_timeout = unlimited

Comment lines, Settings lines and Settings variables are main components of puppet configuration file. Comments in config files are specified by prefixing hash character. Setting line consists name of setting followed by equal sign, value of setting are specified in this section. Setting variable value generally consists of one word but multiple can be specified in rare cases [4].

Refernces

[1] Edureka, “Puppet tutorial – devops tool for configuration management.” Web Page, May-2017 [Online]. Available: https://www.edureka.co/blog/videos/puppet-tutorial/

[2] Puppet, “Text mode installation: Monolithic.” Web Page, Nov-2017 [Online]. Available: https://puppet.com/docs/pe/2017.1/install_text_mode_mono.html

[3] Puppet, “Text mode installation : Split.” Web Page, Nov-2017 [Online]. Available: https://puppet.com/docs/pe/2017.1/install_text_mode_split.html

[4] Puppet, “Config files: The main config files.” Web Page, Apr-2014 [Online]. Available: https://puppet.com/docs/puppet/5.3/config_file_main.html

4.5 - Travis

Travis CI is a continuous integration tool that is often used as part of DevOps development. It is a hosted service that enables users to test their projects on GitHub.

Once travis is activated in a GitHub project, the developers can place

a .travis file in the project root. Upon checkin the travis

configuration file will be interpreted and the commands indicated in

it will be executed.

In fact this book has also a travis file that is located at

Please inspect it as we will illustrate some concepts of it. Unfortunately travis does not use an up to date operating system such as ubuntu 18.04. Therefore it contains outdated libraries. Although we would be able to use containers, we have elected for us to chose mechanism to update the operating system as we need.

This is done in the install phase that in our case installs a new

version of pandoc, as well as some additional libraries that we use.

in the env we specify where we can find our executables with the

PATH variable.

The last portion in our example file specifies the script that is executed after the install phase has been completed. As our installation contains convenient and sophisticated makefiles, the script is very simple while executing the appropriate make command in the corresponding directories.

Exercises

E.travis.1:

Develop an alternative travis file that in conjunction uses a preconfigured container for ubuntu 18.04

E.travis.2:

Develop an travis file that checks our books on multiple operating systems such as macOS, and ubuntu 18.04.

Resources

4.6 - DevOps with AWS