This the multi-page printable view of this section. Click here to print.

Reports

- 1: Investigating the Classification of Breast Cancer Subtypes using KMeans

- 2: Project: Detection of Autism Spectrum Disorder with a Facial Image using Artificial Intelligence

- 3: Project: Analyzing the Advantages and Disadvantages of Artificial Intelligence for Breast Cancer Detection in Women

- 4: Increasing Cervical Cancer Risk Analysis

- 5: Cyber Attacks Detection Using AI Algorithms

- 6: Report: Dentronics: Classifying Dental Implant Systems by using Automated Deep Learning

- 7:

- 8: Report: Aquatic Animals Classification Using AI

- 9: Project: Hand Tracking with AI

- 10: Review: Handwriting Recognition Using AI

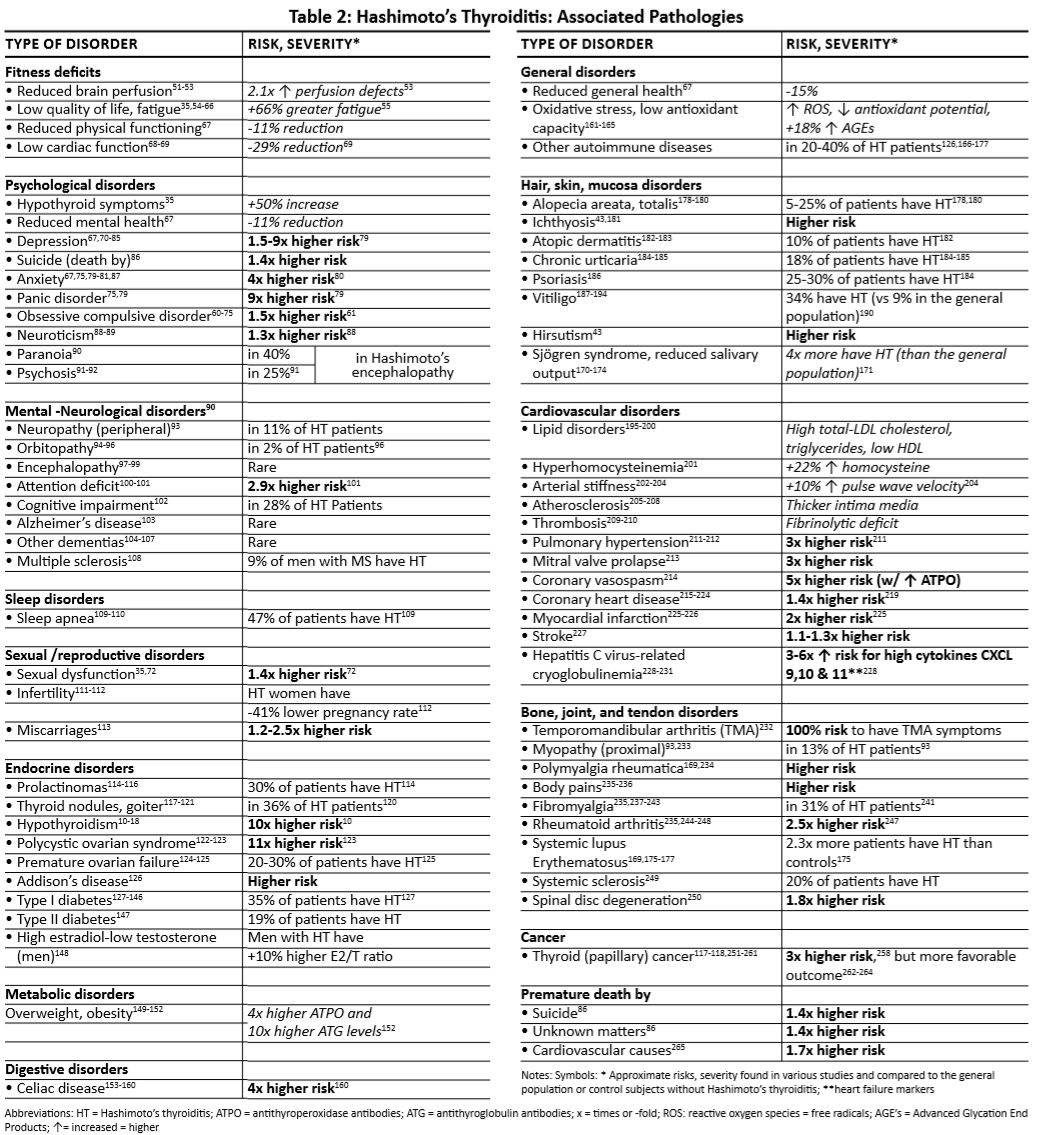

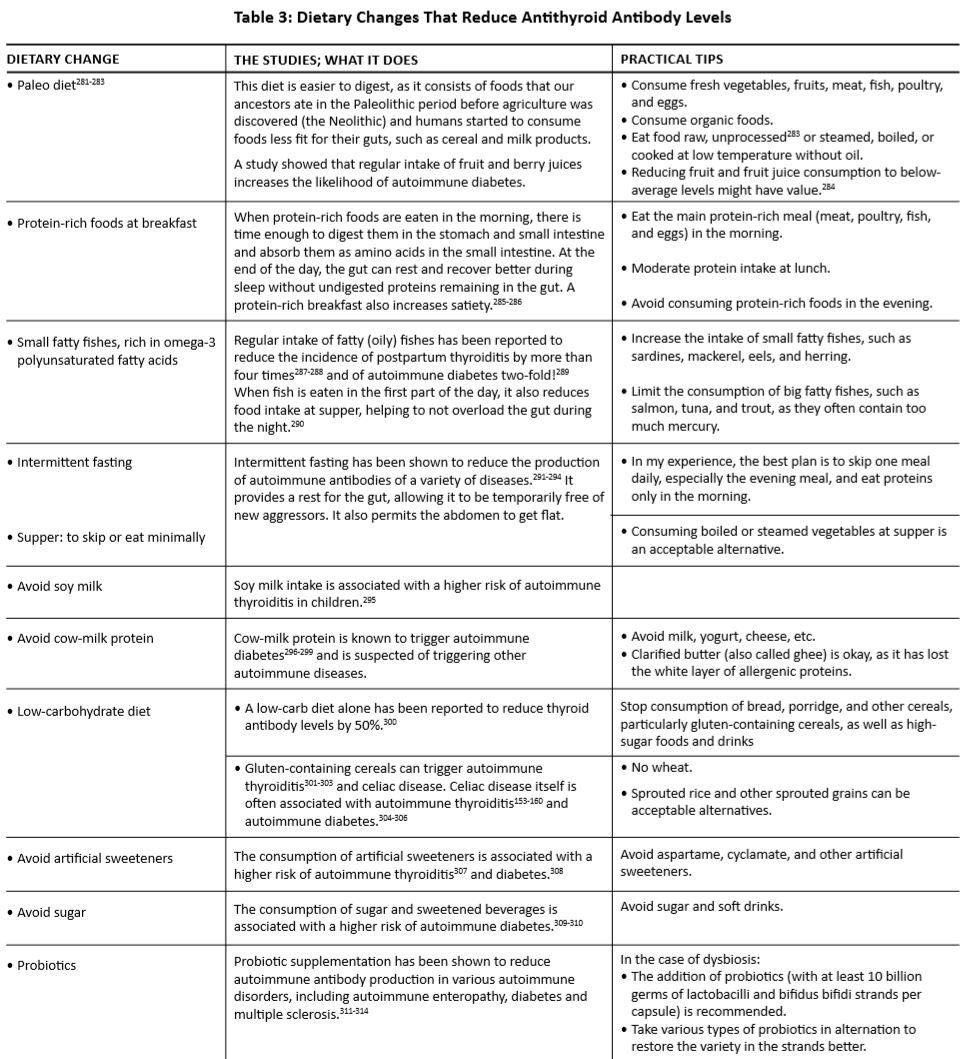

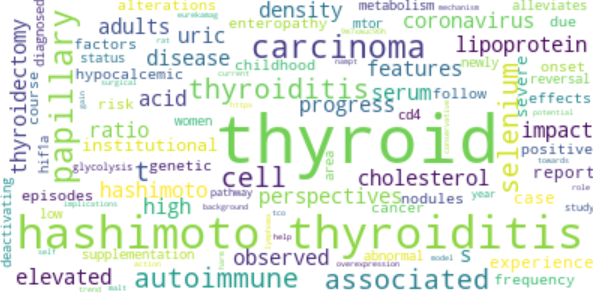

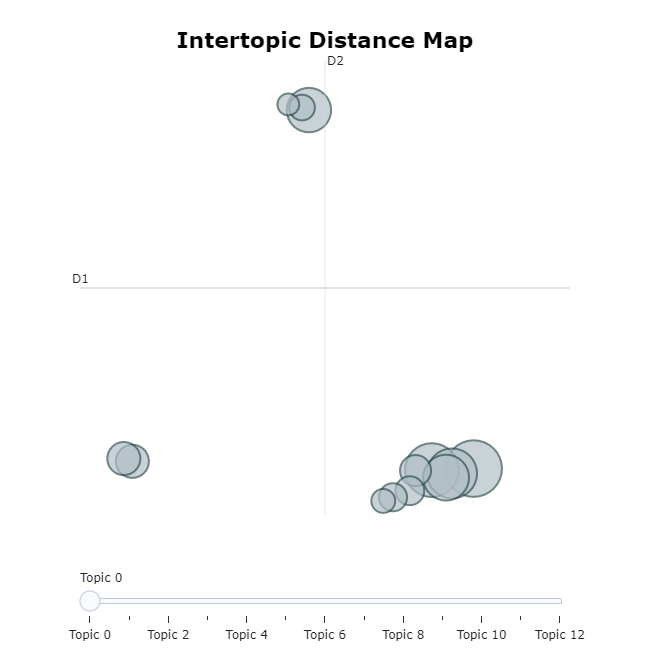

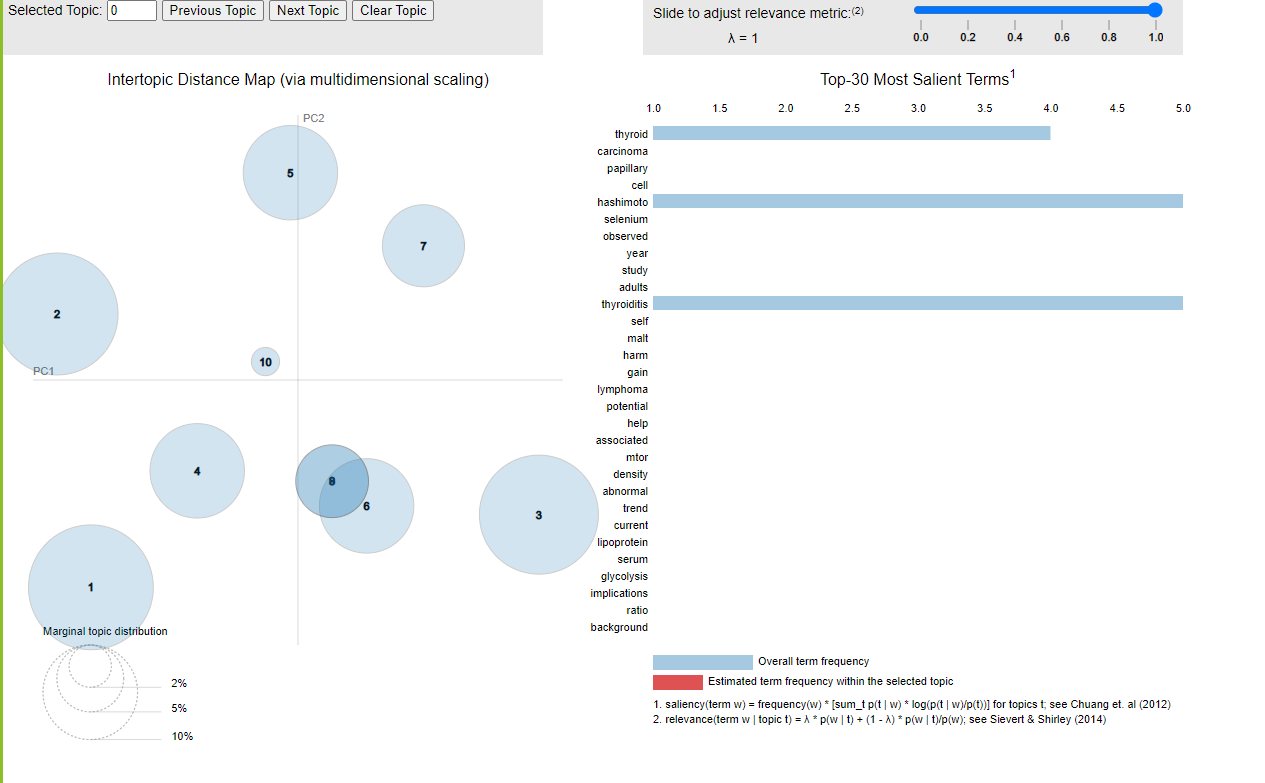

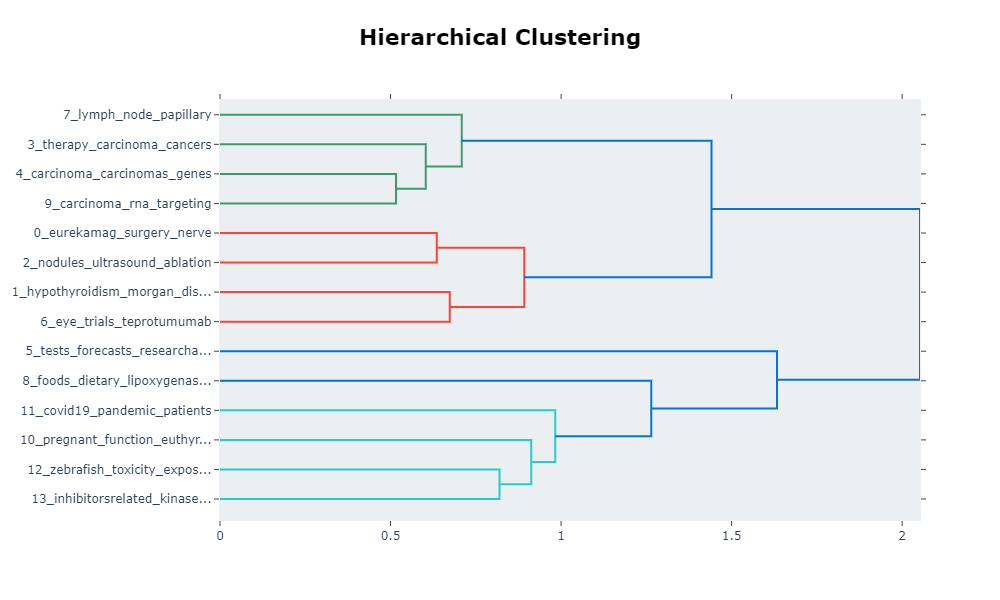

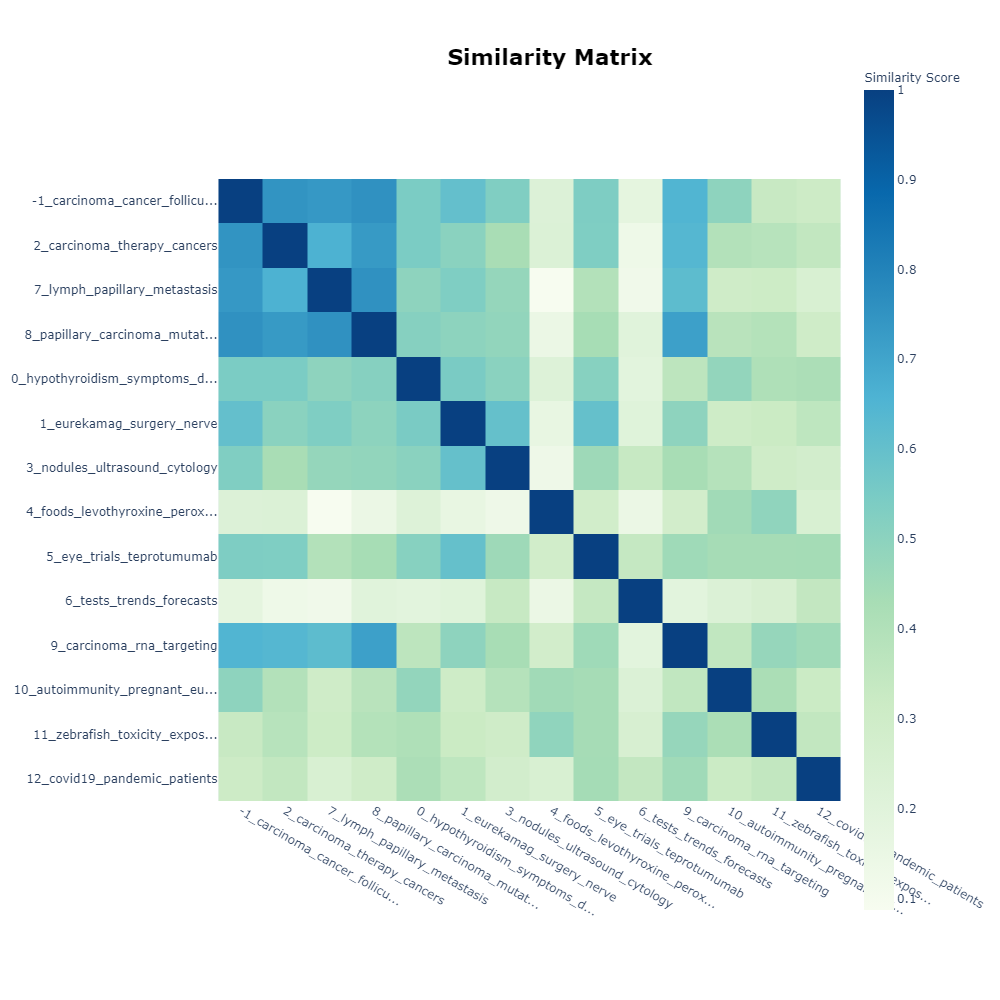

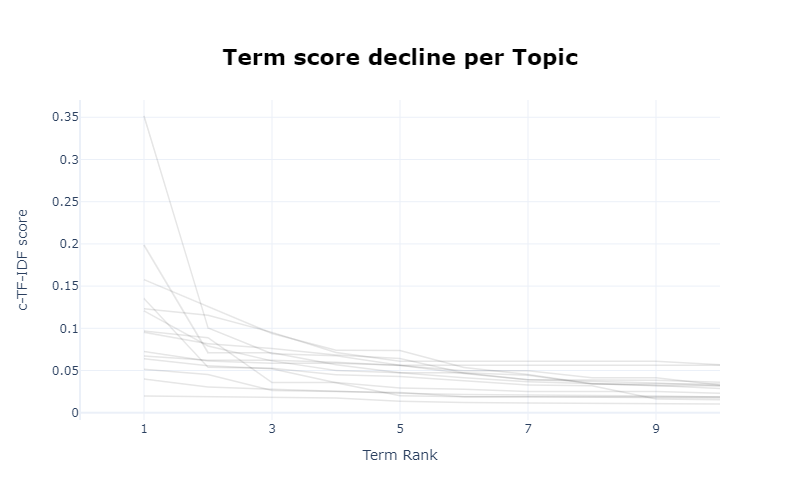

- 11: Project: Analyzing Hashimoto disease causes, symptoms and cases improvements using Topic Modeling

- 12: Project: Classification of Hyperspectral Images

- 13: Project: Detecting Multiple Sclerosis Symptoms using AI

- 14: Report: AI in Orthodontics

- 15: Time Series Analysis of Blockchain-Based Cryptocurrency Price Changes

- 16: Analysis of Covid-19 Vaccination Rates in Different Races

- 17: Aquatic Toxicity Analysis with the aid of Autonomous Surface Vehicle (ASV)

- 18: How Big Data has Affected Statistics in Baseball

- 19: Predictive Model For Pitches Thrown By Major League Baseball Pitchers

- 20: Big Data Analytics in the National Basketball Association

- 21: Big Data in E-Commerce

- 22: Big Data Analytics in Brazilian E-Commerce

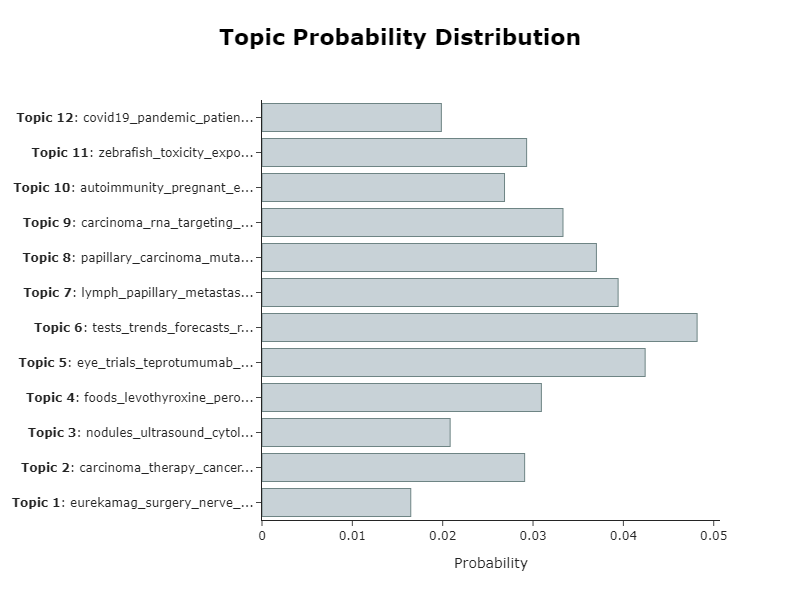

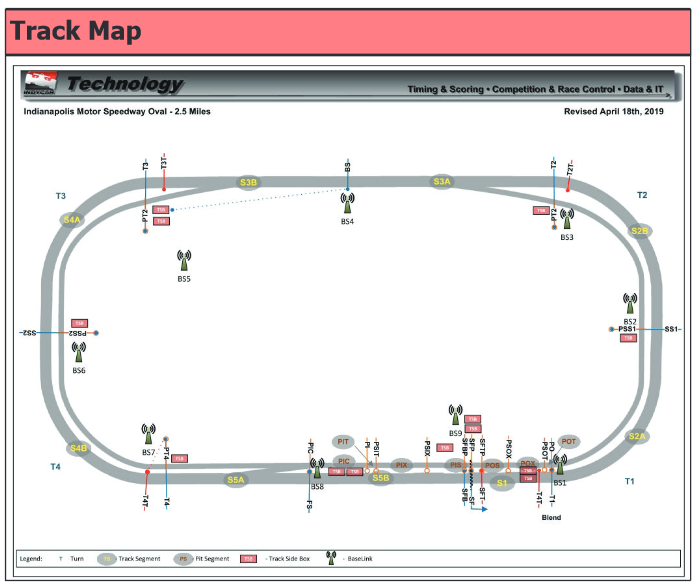

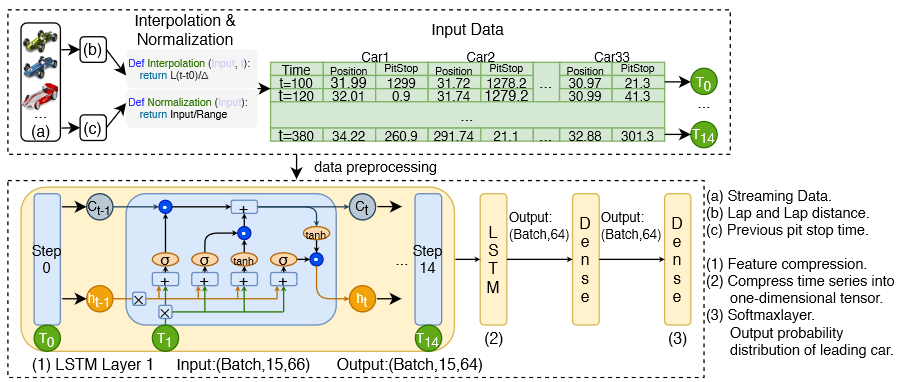

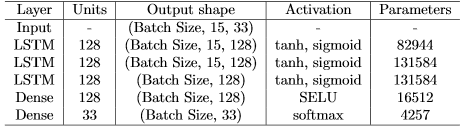

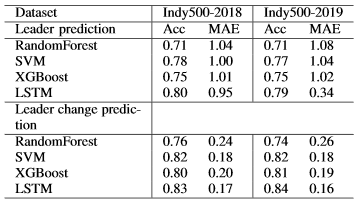

- 23: Rank Forecasting in Car Racing

- 24: Change of Internet Capabilities Throughout the World

- 25: Project: Chat Bots in Customer Service

- 26: COVID-19 Analysis

- 27: Analyzing the Relationship of Cryptocurrencies with Foriegn Exchange Rates and Global Stock Market Indices

- 28: Project: Deep Learning in Drug Discovery

- 29: Big Data Application in E-commerce

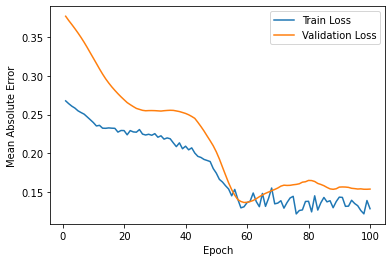

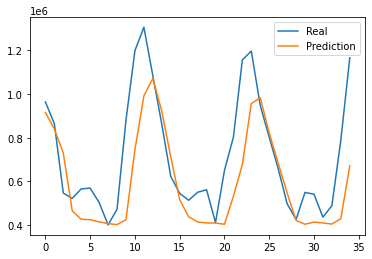

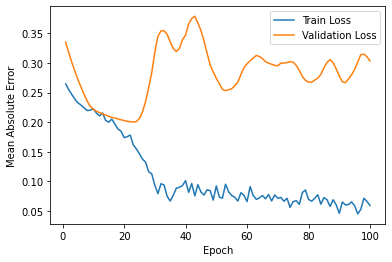

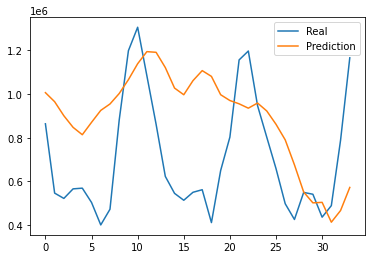

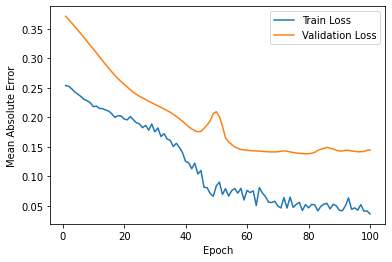

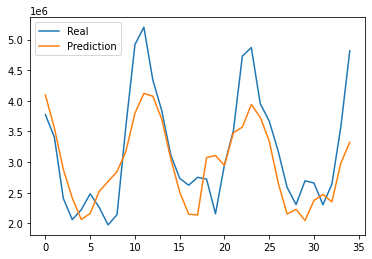

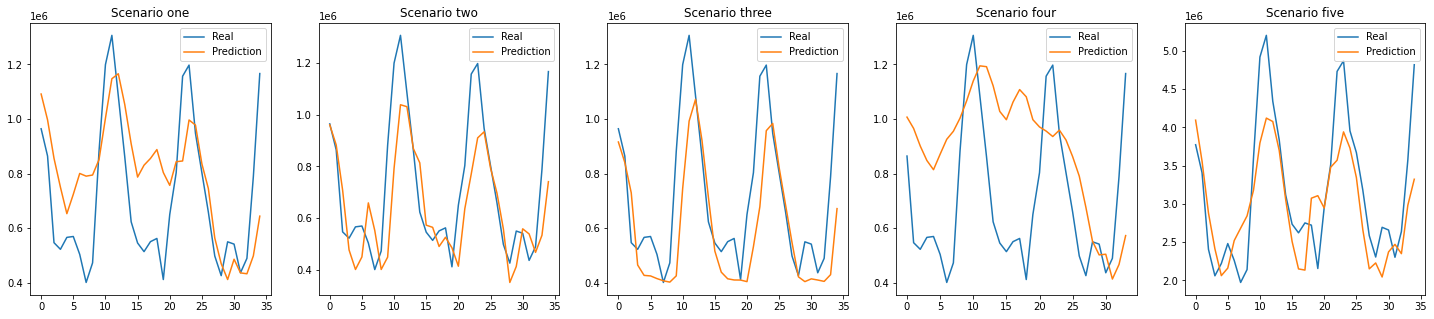

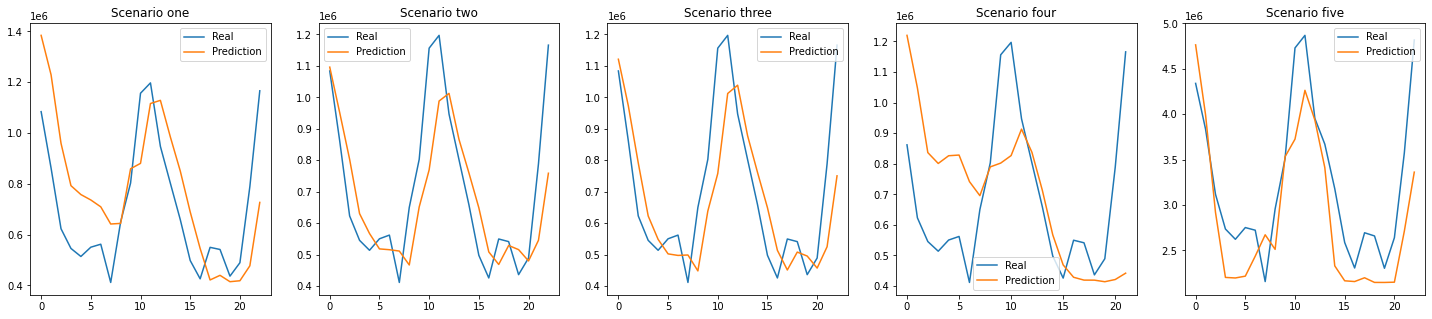

- 30: Residential Power Usage Prediction

- 31: Big Data Applications in the Gaming Industry

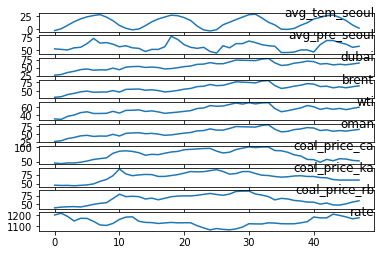

- 32: Project: Forecasting Natural Gas Demand/Supply

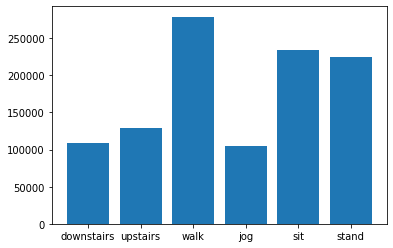

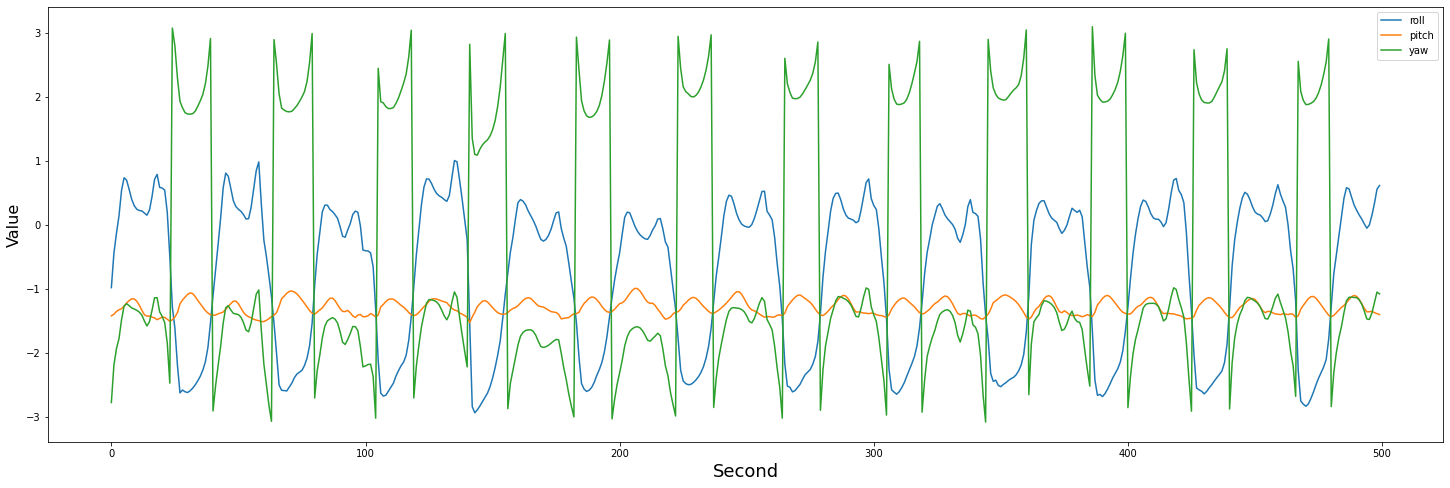

- 33: Big Data on Gesture Recognition and Machine Learning

- 34: Big Data in the Healthcare Industry

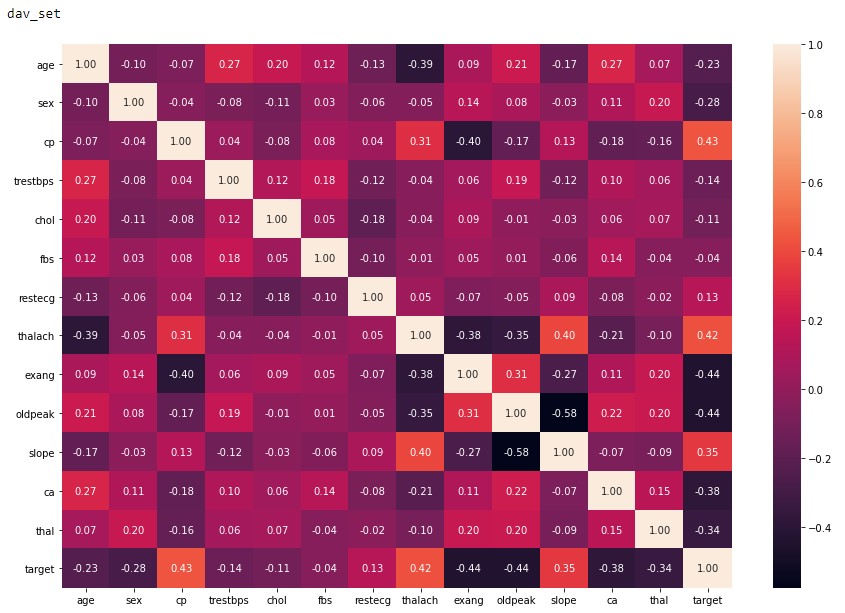

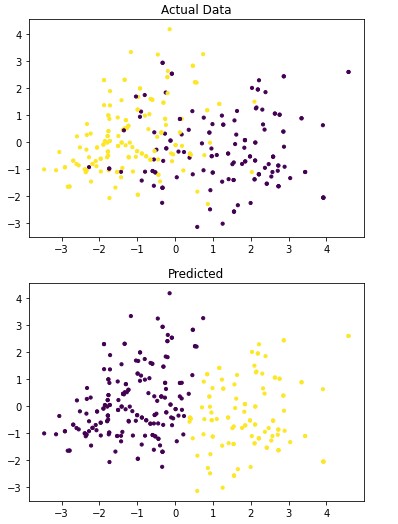

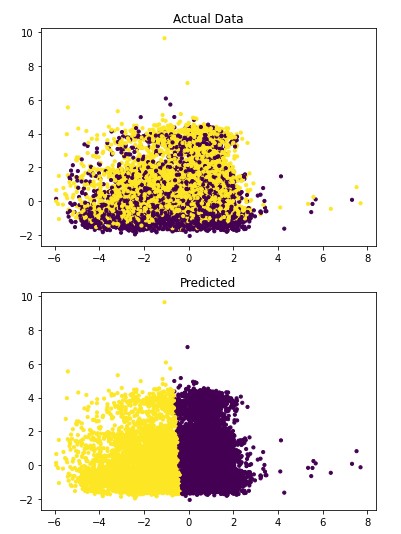

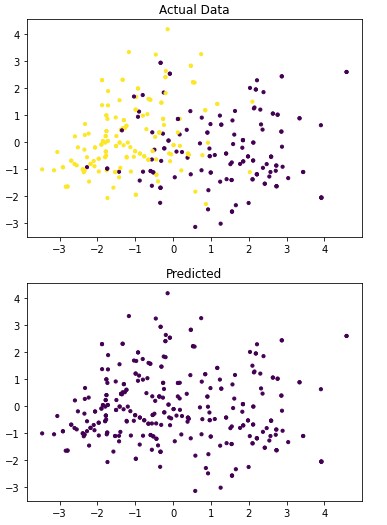

- 35: Analysis of Various Machine Learning Classification Techniques in Detecting Heart Disease

- 36: Predicting Hotel Reservation Cancellation Rates

- 37: Analysis of Future of Buffalo Breeds and Milk Production Growth in India

- 38: Music Mood Classification

- 39: Does Modern Day Music Lack Uniqueness Compared to Music before the 21st Century

- 40: NBA Performance and Injury

- 41: NFL Regular Season Skilled Position Player Performance as a Predictor of Playoff Appearance Overtime

- 42: Project: Training A Vehicle Using Camera Feed from Vehicle Simulation

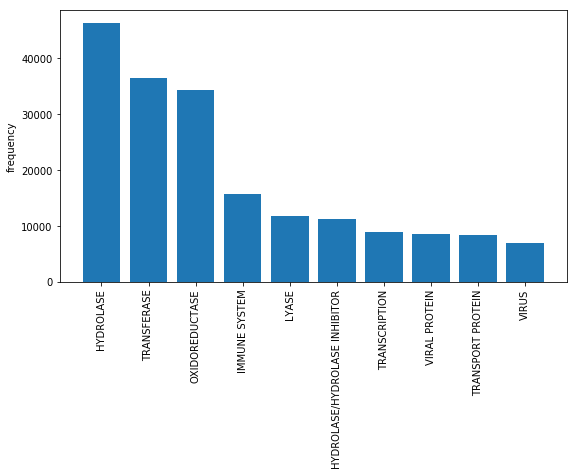

- 43: Project: Structural Protein Sequences Classification

- 44: How Big Data Can Eliminate Racial Bias and Structural Discrimination

- 45: Online Store Customer Revenue Prediction

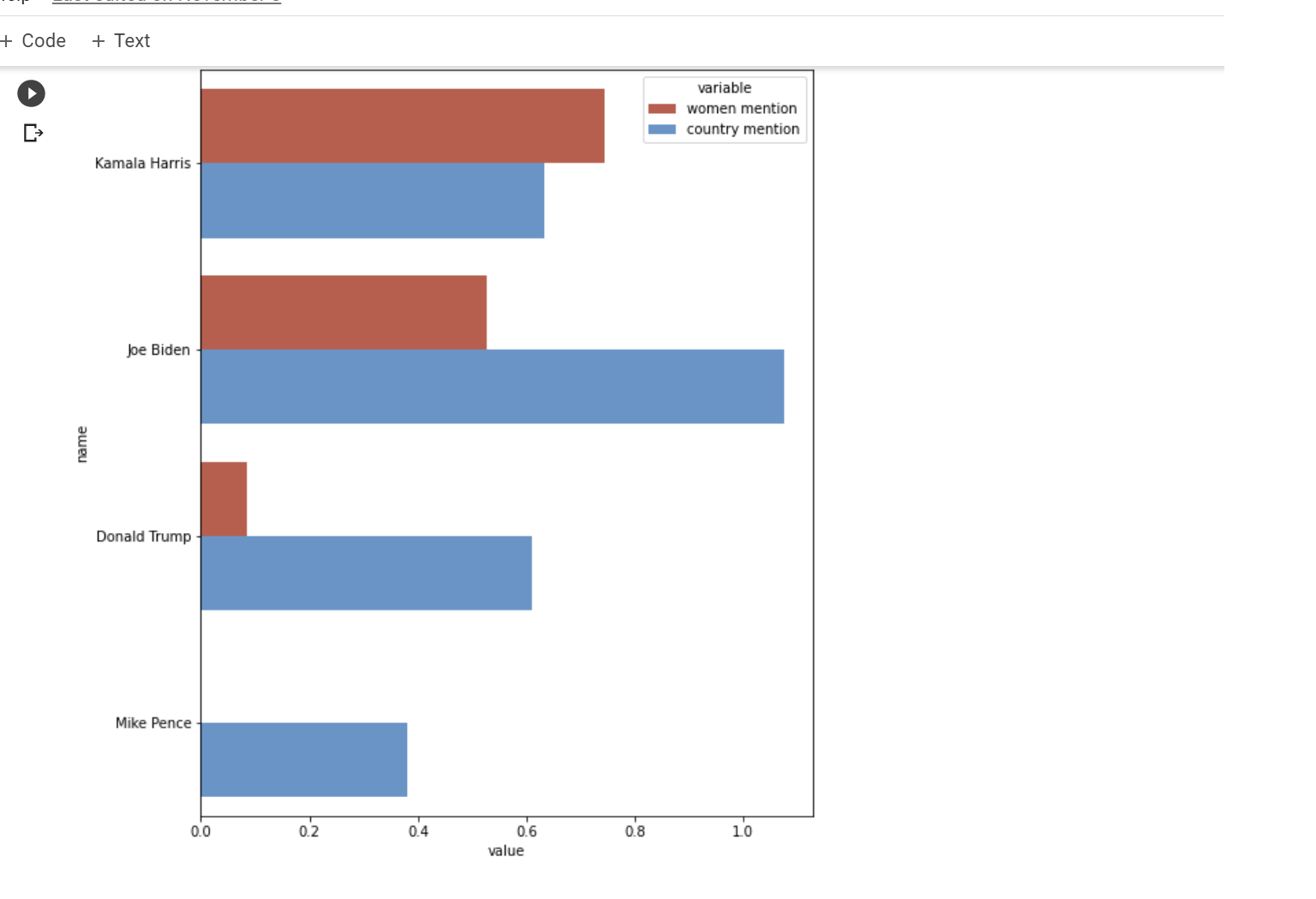

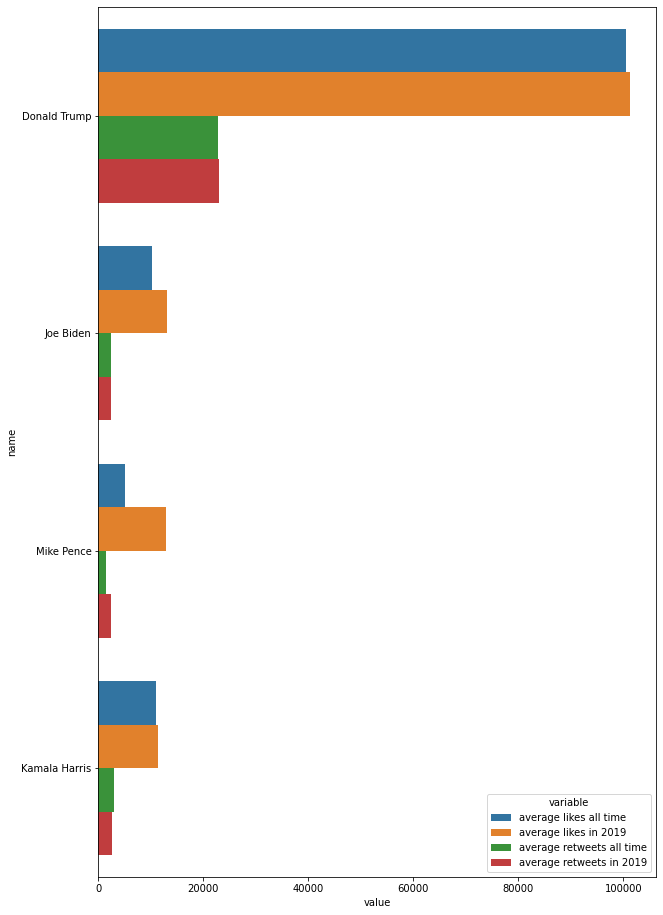

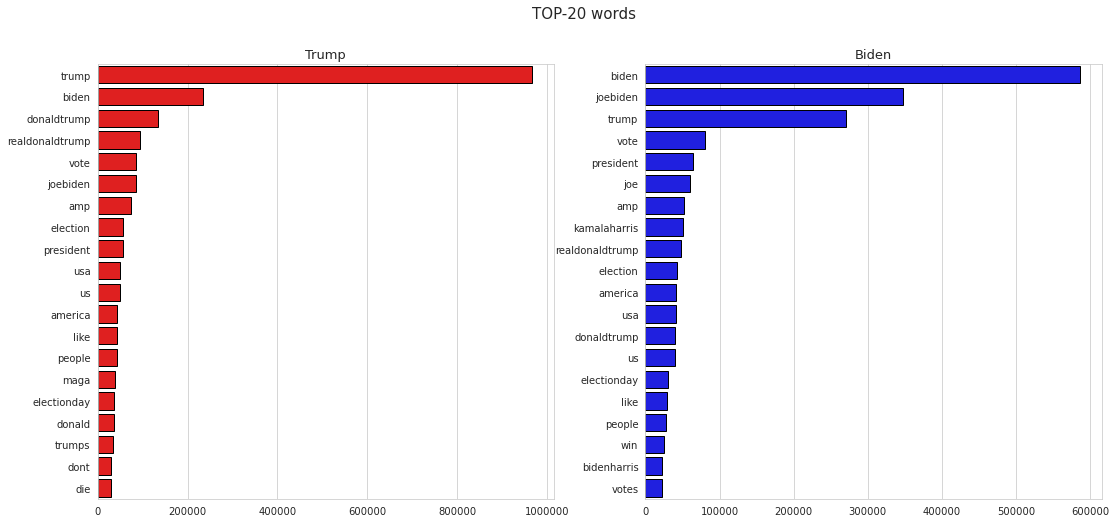

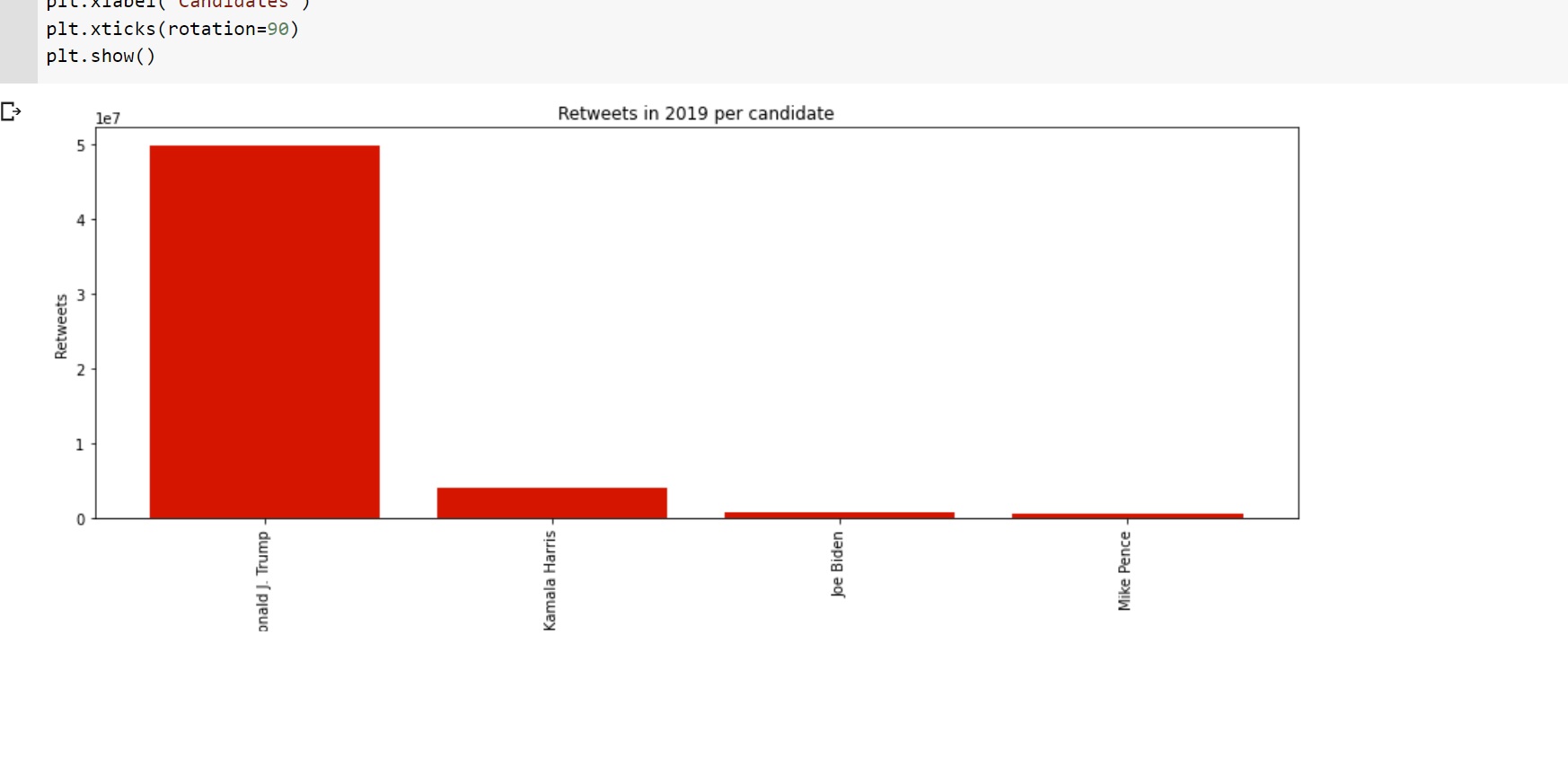

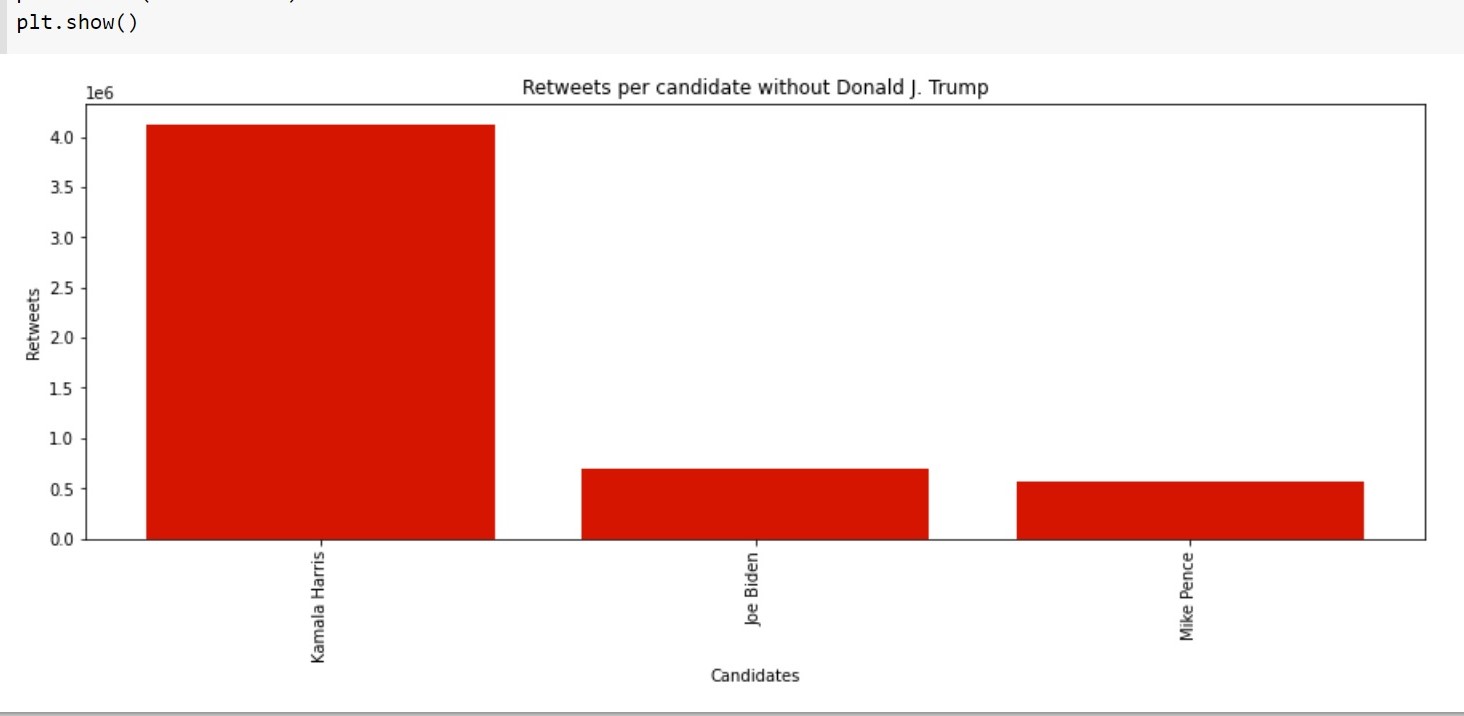

- 46: Sentiment Analysis and Visualization using a US-election dataset for the 2020 Election

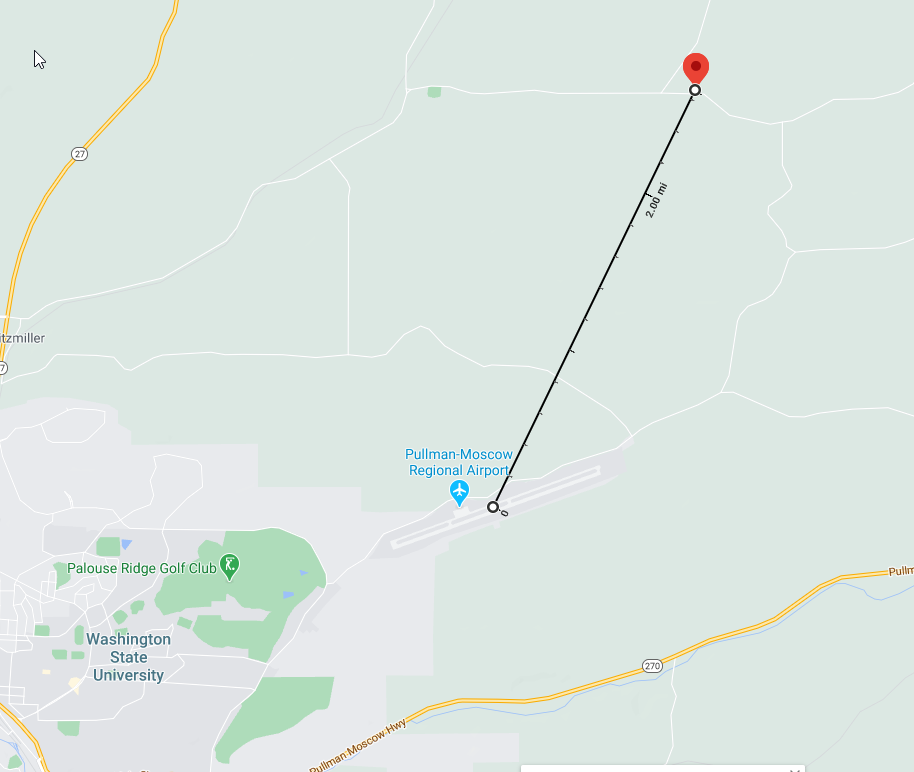

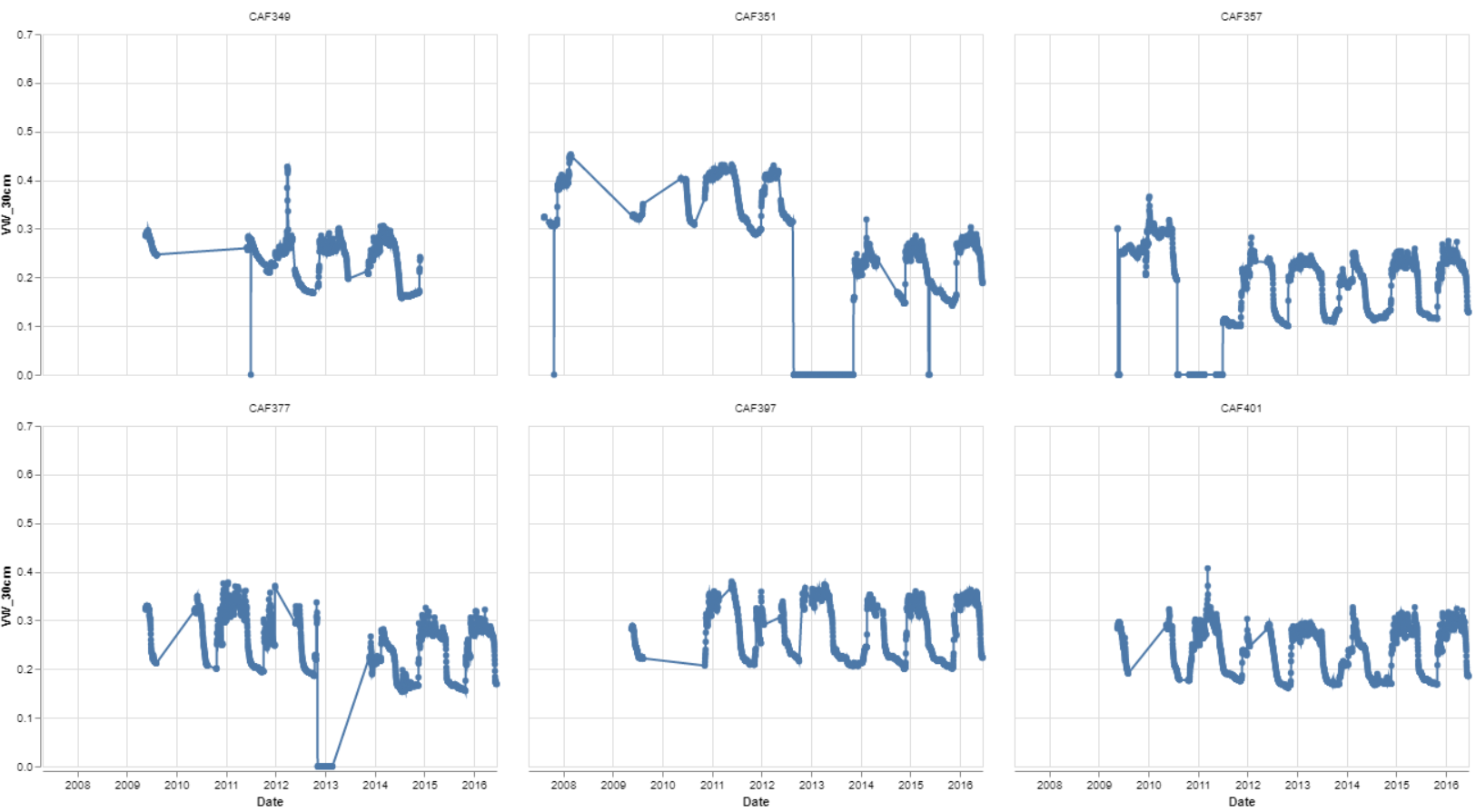

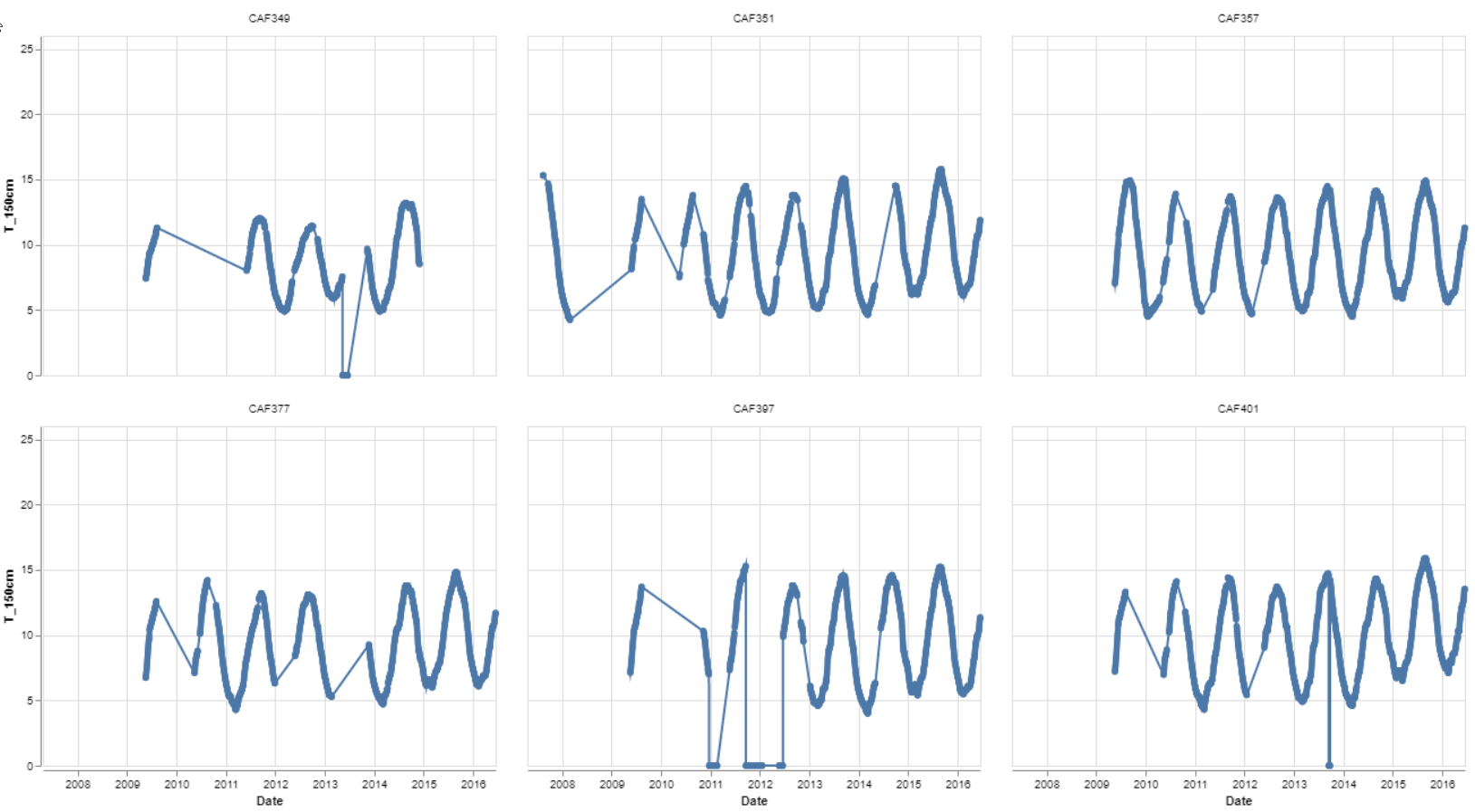

- 47: Estimating Soil Moisture Content Using Weather Data

- 48: Big Data in Sports Game Predictions and How It is Used in Sports Gambling

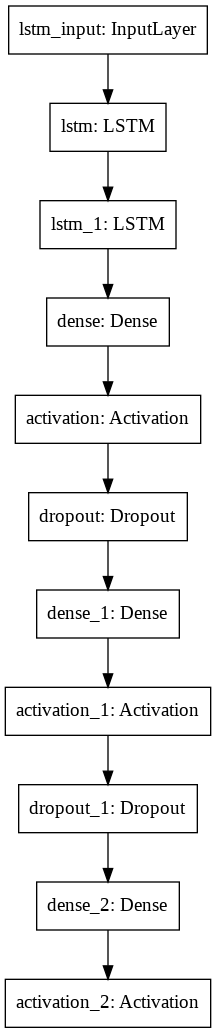

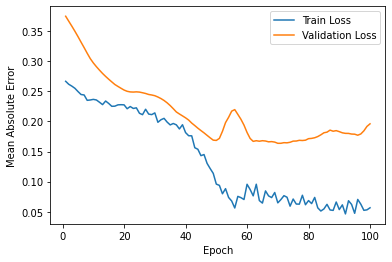

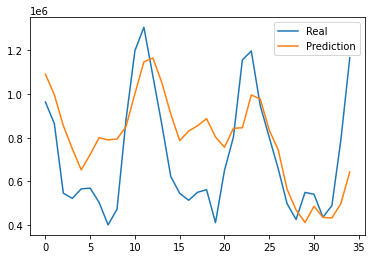

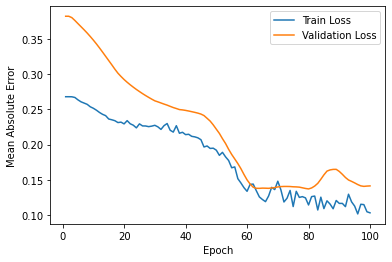

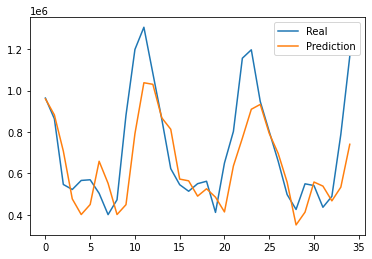

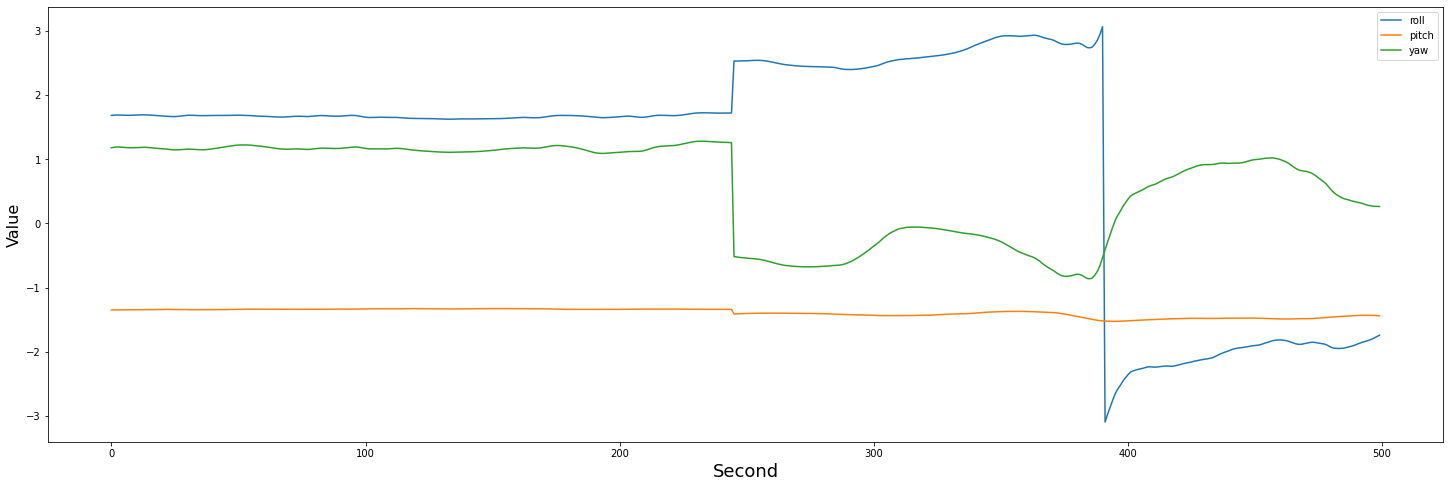

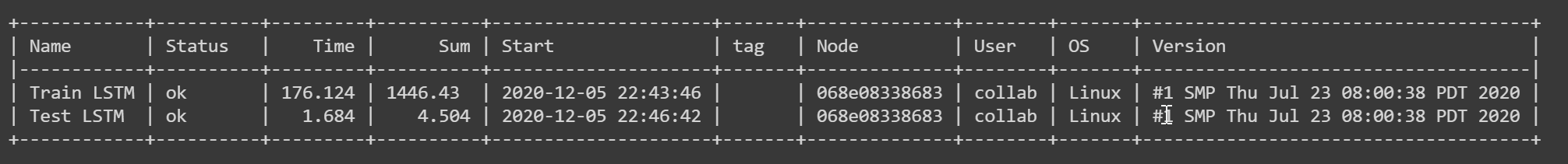

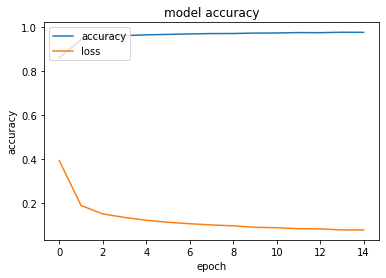

- 49: Analyzing LSTM Performance on Predicting the Stock Market for Multiple Time Steps

- 50: Stock Price Reactions to Earnings Announcements

- 51: Project: Stock level prediction

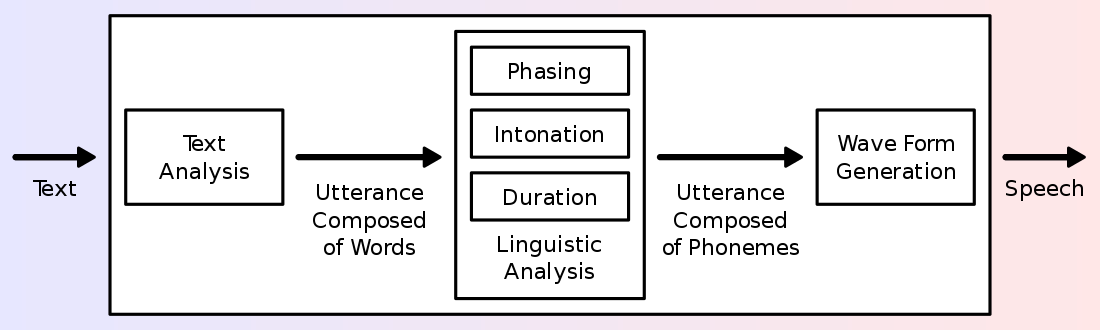

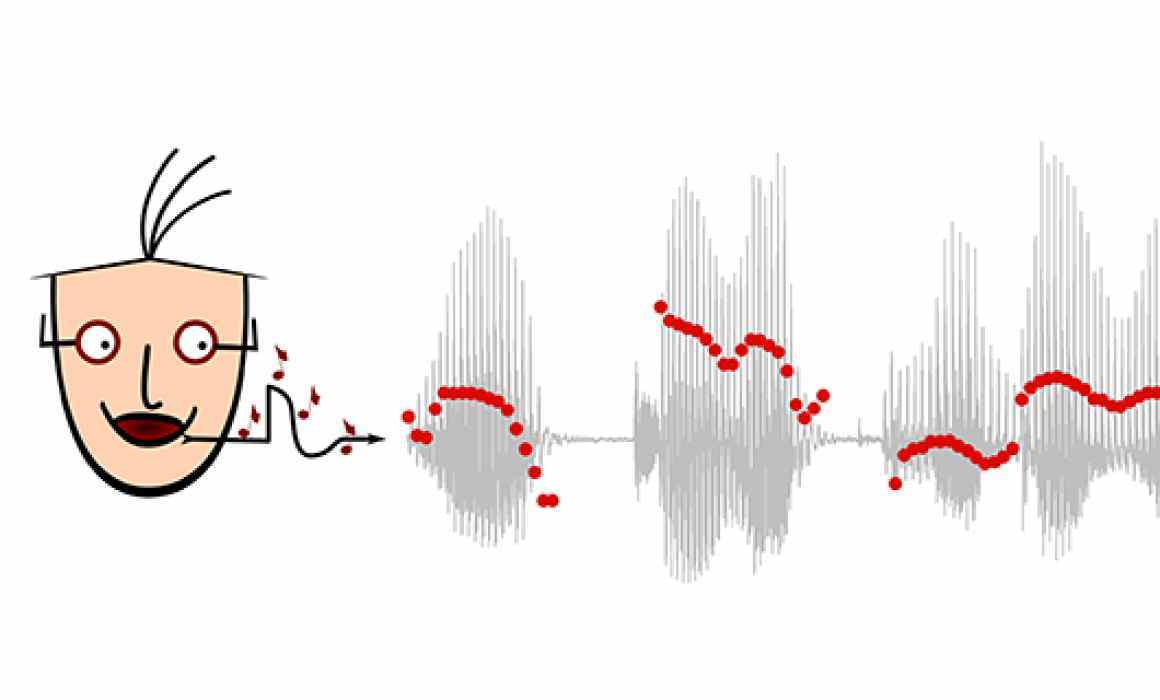

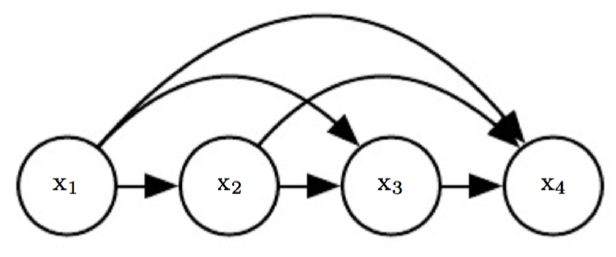

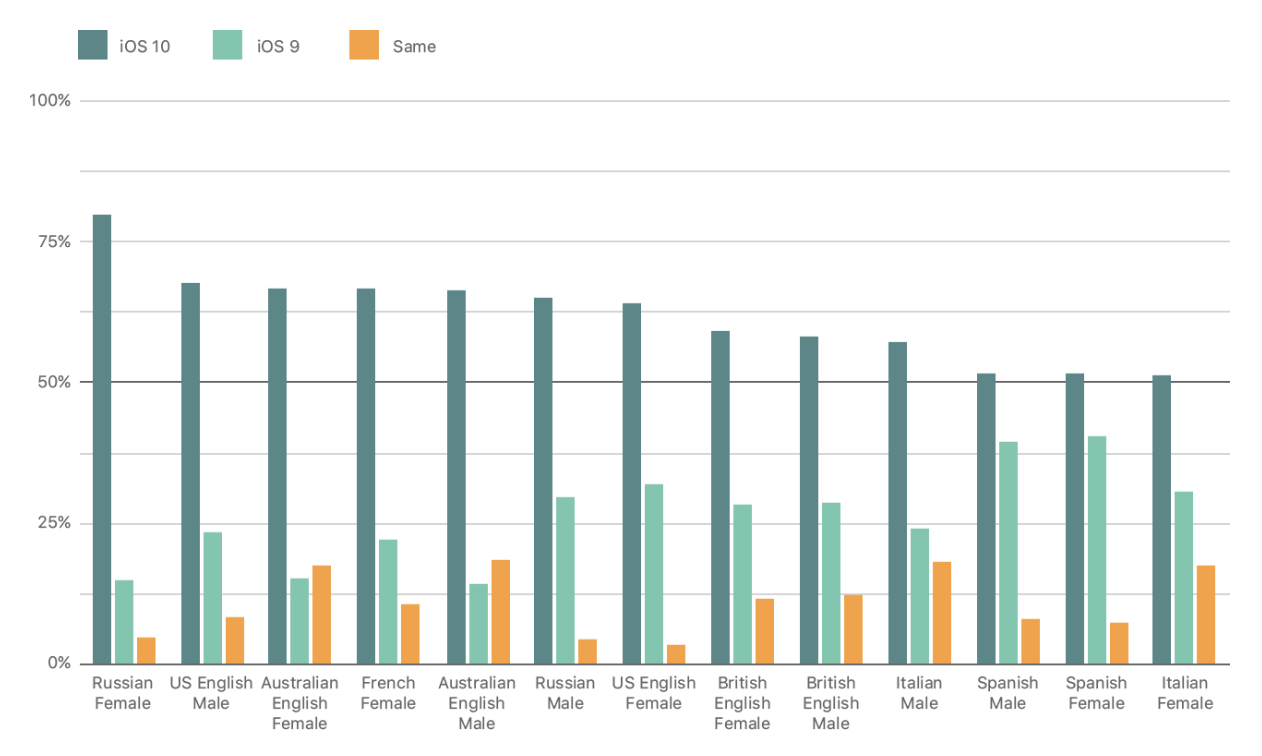

- 52: Review of Text-to-Voice Synthesis Technologies

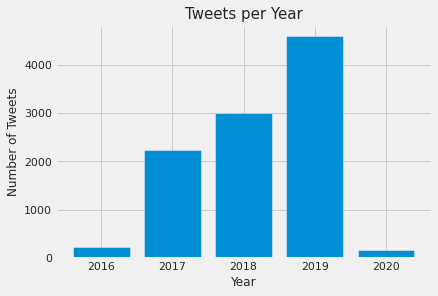

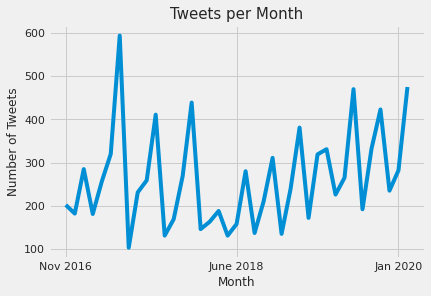

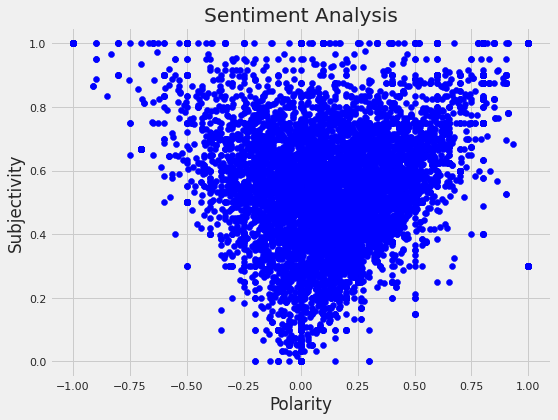

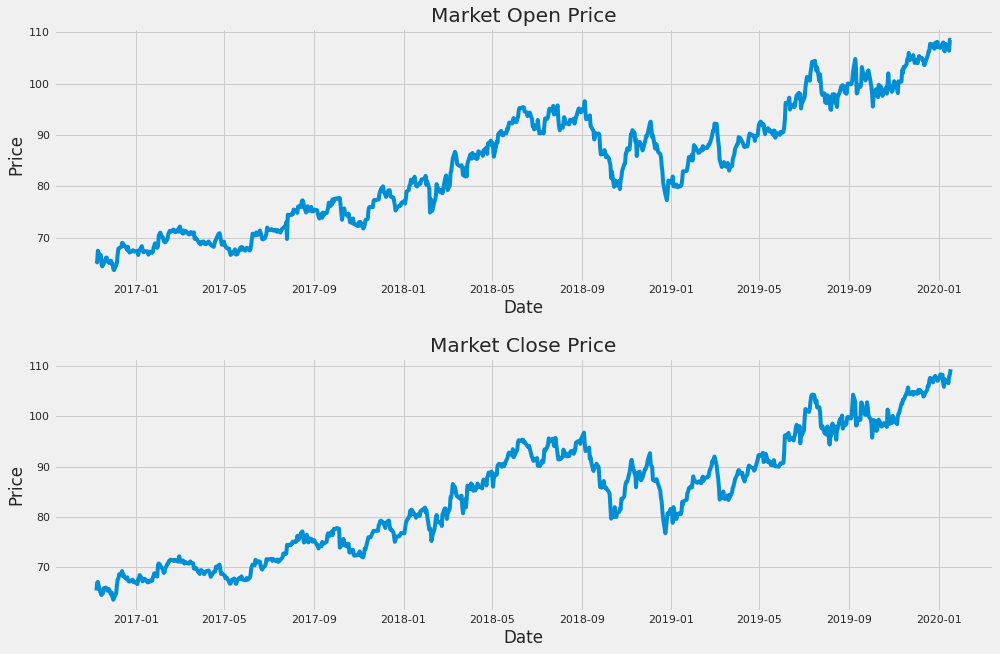

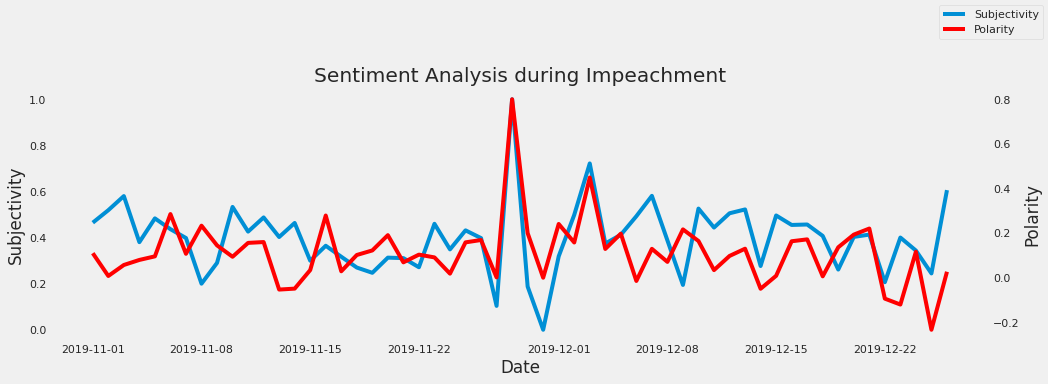

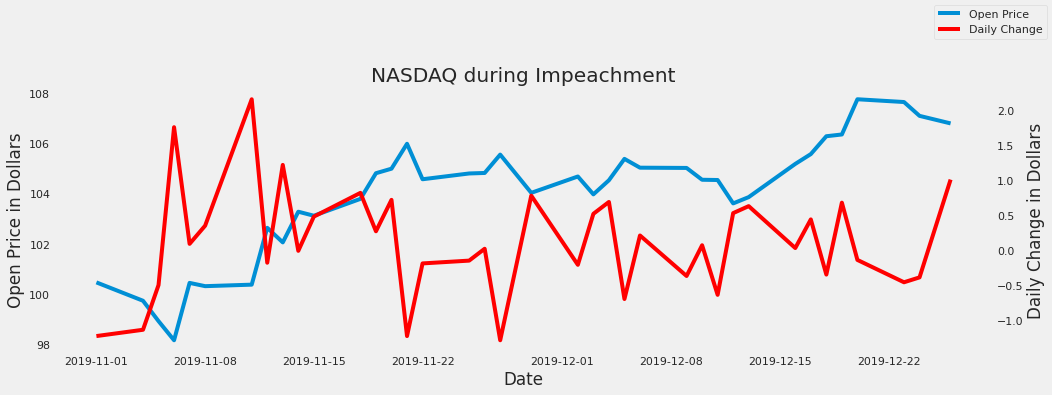

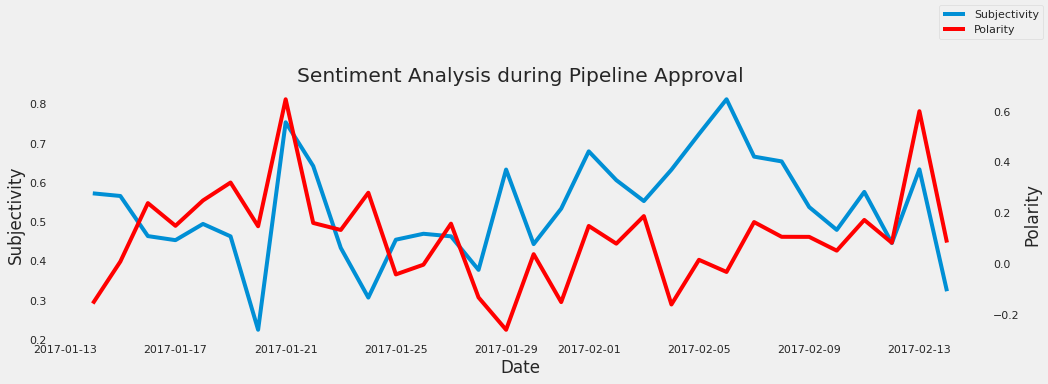

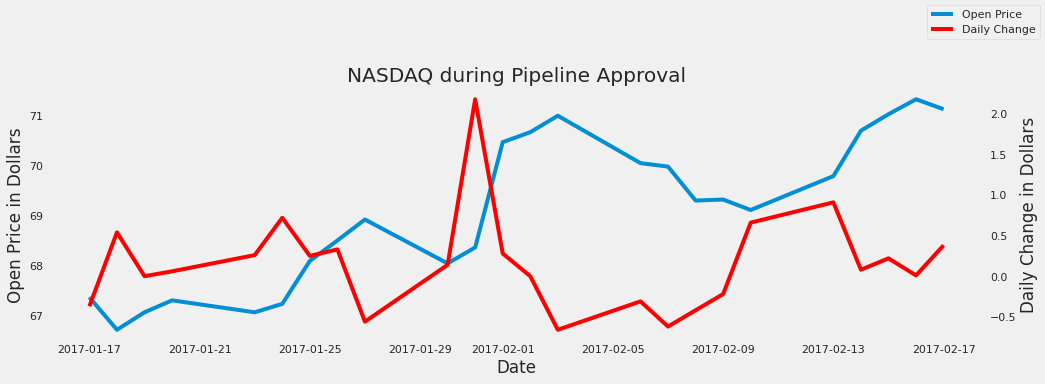

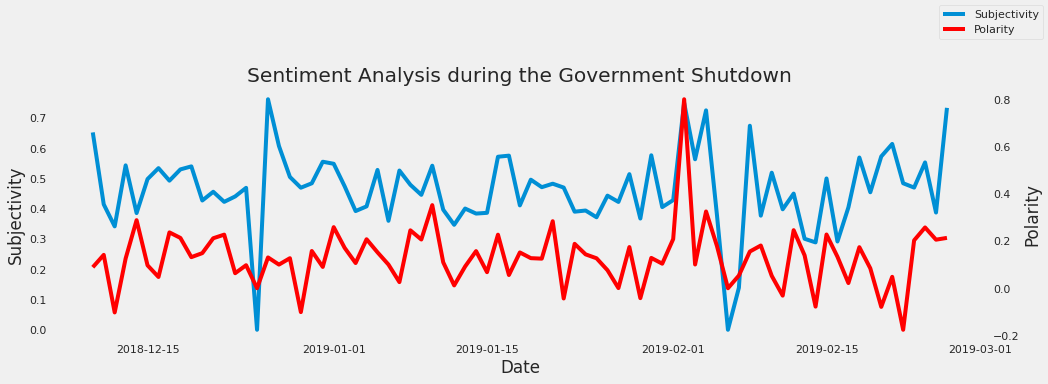

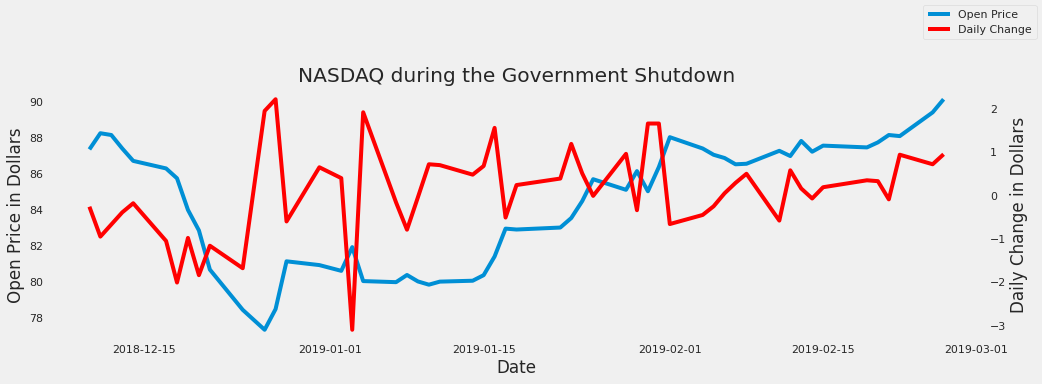

- 53: Analysis of Financial Markets based on President Trump's Tweets

- 54: Trending Youtube Videos Analysis

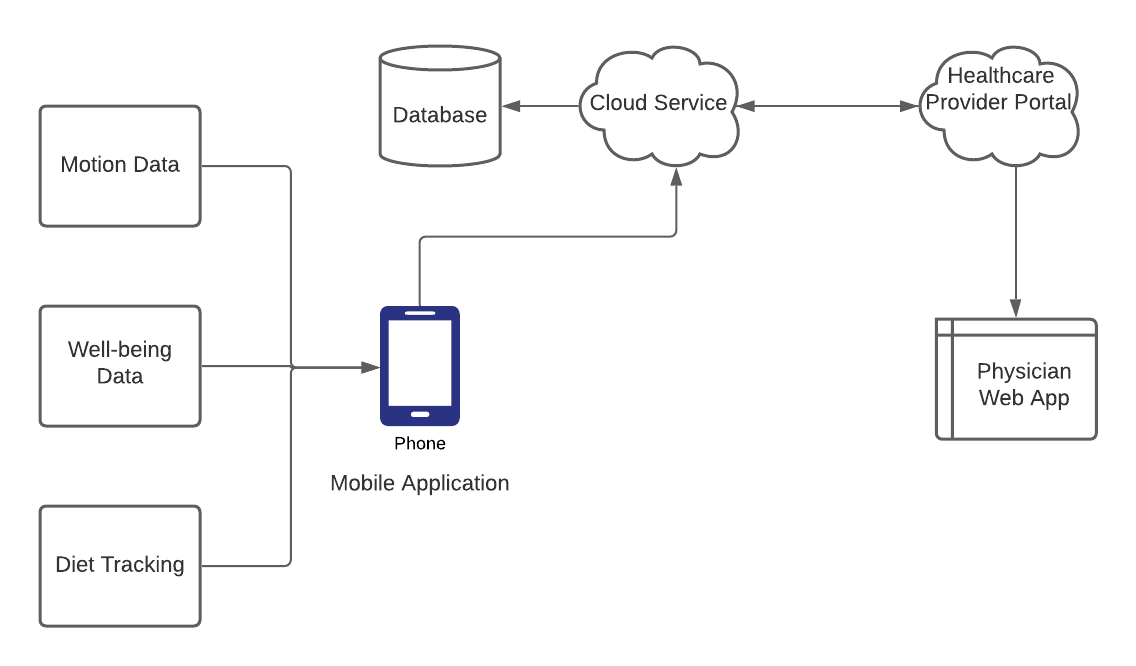

- 55: Review of the Use of Wearables in Personalized Medicine

- 56: Project: Identifying Agricultural Weeds with CNN

- 57: Detect and classify pathologies in chest X-rays using PyTorch library

- 58:

- 59:

- 60:

- 61:

- 62:

- 63:

- 64:

- 65:

- 66:

- 67:

- 68:

- 69:

- 70:

- 71:

- 72:

- 73:

- 74:

- 75:

- 76:

- 77:

- 78:

- 79:

- 80:

- 81:

- 82:

- 83:

- 84:

- 85:

- 86:

- 87:

- 88:

- 89:

- 90:

- 91:

- 92:

- 93:

- 94:

- 95:

- 96:

- 97:

- 98:

- 99:

- 100:

- 101:

- 102:

- 103:

- 104:

- 105:

- 106:

- 107:

- 108:

- 109:

- 110:

- 111:

- 112:

- 113:

- 114:

- 115:

- 116:

- 117:

- 118:

- 119:

- 120:

- 121:

- 122:

- 123:

- 124:

- 125:

- 126:

- 127:

- 128:

- 129:

- 130:

- 131:

- 132:

- 133:

- 134:

- 135:

- 136:

- 137:

- 138:

- 139:

- 140:

- 141:

- 142:

- 143:

- 144:

- 145:

- 146:

- 147:

- 148:

- 149:

- 150:

- 151:

1 - Investigating the Classification of Breast Cancer Subtypes using KMeans

Kehinde Ezekiel, su21-reu-362, Edit

Abstract

Breast cancer is an heterogenous disease that is characterized by abnormal growth of the cells in the breast region[^1]. There are four major molecular subtypes of breast cancer. This classification was based on a 50-gene signature profiling test called PAM50. Each molecular subtype has a specific morphology and treatment plan. Early diagnosis and detection of possible cancerous cells usually increase survival chances and provide a better approach for treatment and management. Different tools like ultrasound, thermography, mammography utilize approaches like image processing and artificial intelligence to screen and detect breast cancer. Artificial Intelligence (AI) involves the simulation of human intelligence in machines and can be used for learning or to solve problems. A major subset of AI is Machine Learning which involves training a piece of software (called model) to makwe useful predictions using dataset.

In this project, a machine learning algorithm, KMeans, was implemented to design and analyze a proteomic dataset into clusters using its proteins identifiers. These protein identifiers were associated with the PAM50genes that was used to originally classify breast cancer into four molecular subtypes. The project revealed that further studies can be done to investigate the relationship between the data points in each cluster with the biological properties of the molecular subtypes which could lead to newer discoveries and developmeny of new therapies, effective treatment plan and management of the disease. It also suggests that several machine learning algorithms can be leveraged upon to address healthcare issues like breast cancer and other diseases which are characterized by subtypes.

Contents

Keywords: AI, cancer, breast, algorithms, machine learning, healthcare, subtypes, classification.

1. Introduction

Breast cancer is the most common cancer, and also the primary cause of mortality due to cancer in females around the World. It is an heterogenous disease that is characterized by the abnormal growth of cells in the breast region1. Early diagnosis and detection of possible cancerous cells in the breast usually increase survival chances and provide a better approach for treatment and management. Treatment and management often depend on the stage of cancer, the subtype, the tumor size, location and many other factors. During the last 20 years, four major intrinsic molecular subtypes for breast cancer- luminal A, luminal B, HER2-enriched and Basal-like have been identified, classified and intensively studied. Each subtype has its distinct morphologies and clinical treatment. The classification is based on gene expression profiling, specificaly defined by mRNA expression of 50 genes (also known as, PAM50 Genes). This test is known as the PAM50 test. The accurate grouping of breast cancer into its relevant subtypes can improve accurate treatment-decision making2. The PAM50 test is now known as the Prosigna Breast Cancer Prognostic Gene Signature Assay 50 (known as Prosigna) and it analyzes the activity of certain genes in early-stage, hormone-receptor-positive breast cancer3. This classification is based on the mRNA expression and the activity of 50 genes and it aims to estimate the risk of distant reccurrence of breast cancer. Since the assay was based on mRNA expression, this project suggested that a classification based on the final product of mRNA, that is protein, can be implemented to investigate its role in the classifictaion of molecular breast cancer subtypes. As a result, the project was focused on the use of a proteomic dataset which contained published iTRAQ proteome profiling of 77 breast cancer samples and expression values for the proteins of each sample.

Most times, breast cancer is diagnosed and detected through a combination of different approaches such as imaging (e.g. mammogram and ultrasound), physical examination by a radiologist and biopsy. Biopsy is used to confirm the breast cancer symptoms. However, research has shown that radiologists can miss up to 30% of breast cancer tissues during detection4. This gap has brought about the introduction of Computer aided Diagnosis (CAD) systems can help detect abnormalities in an efficient manner. CAD is a technology that includes utilizing the concept of artificial intelligence(AI) and medical image processing to find abnormal signs in the human body5. Machine Learning is a subset of AI and it has several algorithms that can be used to build a model to perform a specific task or to predict a pattern. KMeans is one of such algorithm.

Building a model using machine learning involves selecting and preparing the appropriate dataset, identifying the accurate machine learnning algorithm to use, training the algorithm on the data to build a model, validating the resulting model’s performance on testing data and using the model on a new data6. In this project, KMeans was the algorithm used in this project, the datasets were prepared through several procedures like filtering, merging. KMeans clustering method was used to investigate the classification of the molecular subtypes. Its efficacy is often tested by a silhouette score. A silhouette score shows how similar an object is to its own cluster and it ranges from -1 to 1 where a high values indicates that an object is well matched to its own cluster. A homogeneity score determines if a cluster should only contain samples that belong to a particular class. It ranges from a value between 0 to 1 with low values indicating a low homogeneity.

The project investigated the efficient number of clusters that could be generated for the proteome dataset which would consequetly provide an optimal classification of the protein expression values for the breast cancer samples. The proteins that were used in the KMeans analysis were the proteins that were associated with the PAM50 genes. The result of the project could provide insights to medical scientists and researchers to identify any interelatedness between the original classification of breast cancer molecular subtypes.

2. Datasets

Datasets are eseential in drawing conclusion. In the diagnosis, detection and classification of breast cancecr, datasets have been essential to draw conclusion by identifying patterns. These datasets range from imaging datasets to clinical datasets, proteomic datasets etc. Large amounts of data have been collected due to new technological and computational advances like the use of websites like NCBI, equipments like Electroencephalogram (EEG) which record clinical information. Medical researchers leverage these datasets to make useful health care decisions that affect a region, gender or the world. The need for accuracy and reproducibilty has led to the use of machine learning as an important tool for drawing conclusions.

Machine Learning involves training a piece of software, also known as model, to idnetify patterns from a dataset and make useful predictions. There are several factors to be considered when using datasets. One of such is data privacy. Recently, measures have been taken to ensure that the privacy of data. Some of these measures include, replacing codes for patients name, using documents and mobile applications that ask for permission from patients before using their data. Recently, the World Health Organization (WHO) made her report on AI and provided priniples that ensure that AI works for all. On of such is that the designer of AI technologies should satisfy regulatory requirements for safety, accuracy and efficacy for well-defined use cases or indications. Measures of quality control in practice and quality improvement in the use of AI must be available7. Building a model using machine learning involves selecting and preparing the appropriate dataset, identifying the accurate machine learnning algorithm to use, training the algorithm on the data to build a model, validating the resulting model’s performance on testing data and using the model on a new data6. In this project, KMEans was the algorithm used in this project, the datasets were prepared through several procedures like filtering, merging.

3. The KMeans Approach

KMeans clustering is an unsupervised machine learning algorithm that makes inferences from datasets without referring to a known outcome. It aims to identify underlying patterns in a dataset by looking for a fixed number of clusters, (known as k). The required number of clusters is chosen by the person building the model. KMeans was used in this project to classify the protein IDs (or RefSeq_IDs) into clusters. Each cluster was designed to be associated with related protein IDs.

Three datasets were used for the algorithm. The first and main dataset was a proteomic dataset. It contained published iTRAQ proteome profiling of 77 breast cancer samples generated by the Clinical Proteomic Tumor Analysis Consortium (NCI/NIH). Each sample contained expression values for ~12000 proteins, with missing values present when a given protein could not be quantified in a given sample. The variables in the dataset included the RefSeq_accession_number(also known as RefSeq protein ID), “the gene_symbol” (which was unique to each gene), “the gene_name” (which was the full name of the gene). The remaining columns were the log2 iTRAQ ratios for each of the 77 samples while the last three columns are from healthy individuals.

The second dataset was a PAM50 dataset. It contained the list of genes and proteins used in the PAM50 classification system. The variables include the RefSeqProteinID which matched the Protein IDs(or RefSeq_IDs) in the main proteome dataset.

The third dataset was a clinical data of about 105 clinical breast cancer samples. 77 of the breast cancer samples were the samples in the first dataset. The excluded samples were as a result of protein degradation8. The variables in the dataset are: ‘Complete TCGA ID', ‘Gender’, ‘Age at Initial Pathologic Diagnosis’, ‘ER Status’, ‘PR Status’, ‘HER2 Final Status’, ‘Tumor’, ‘Tumor–T1 Coded’, ‘Node’, ‘Node-Coded’, ‘Metastasis’, ‘Metastasis-Coded’, ‘AJCC Stage’, ‘Converted Stage’, ‘Survival Data Form’, ‘Vital Status’, ‘Days to Date of Last Contact’, ‘Days to date of Death’, ‘OS event’, ‘OS Time’, ‘PAM50 mRNA’, ‘SigClust Unsupervised mRNA’, ‘SigClust Intrinsic mRNA’, ‘miRNA Clusters’, ‘methylation Clusters’, ‘RPPA Clusters’, ‘CN Clusters’, ‘Integrated Clusters (with PAM50)’, ‘Integrated Clusters (no exp)’, ‘Integrated Clusters (unsup exp).’

During the preparation of the datasets for KMeans analysis, unused columns like “gene_name” and “gene_symbol” were removed in the first dataset. The first and third dataset were merged together. Prior to merging, the variable ‘Complete TCGA ID’ in the third dataset was found to be the same as the TCGAs in the first dataset. The Complete TCGA ID refered to a breast cancer patient, some patients were found in both datasets. The TCGA ID in the first dataset was renamed to match with the TCGA of the third dataset, thereby giving the same syntax. The first dataset was also transposed as a row and its gene expression as the columns. These processes were done in order to merge both dataset efficiently.

After merging, the “PAM5O RNA” variable from the second dataset was selected to join the merged dataset. This single dataset was named “pam50data”. It contained all the variables that were needed for KMeans Analysis which included the genes that were used for the PAM50 classification (only 43 were available in the dataset), the complete TCGA ID of each 80 patient, and their molecular tumor type. Missing values in the dataset were imputed using SimpleImputer. Then, KMeans clustering was performed. The metrics were tested with cluster numbers of 3, 4, 5, 20 and 79. The bigger numbers (20 and 79) were tested just for comparison. Further details on the codes written can be found in 9. Also, 10 and 11 were kernels that provided insights for the written code.

5. Results and Images

Several codes were written to determine the best number of clusters for the model. The effectiveness of a cluster is often measured by scores such as silhouette score, homogeneity score and adjusted rand score.

The silhouette score for a cluster of 3, 4, and 5, 8, 20 and 79 were 0.143, 0.1393, 0.1193, 0.50968, 0.0872, 0.012 while the homogenenity scores were 0.4635, 0.4749, 0.1193, 0.5617, 0.6519 and 1.0 respectively. The homogeneity score for 79 is 1.0 since the algorithm can assign all the points into sepearate clusters. However, it is not efficient for the dataset we used. A cluster of 3 works best since the silhouette score is high and the homogeneity score jumps ~2-fold.

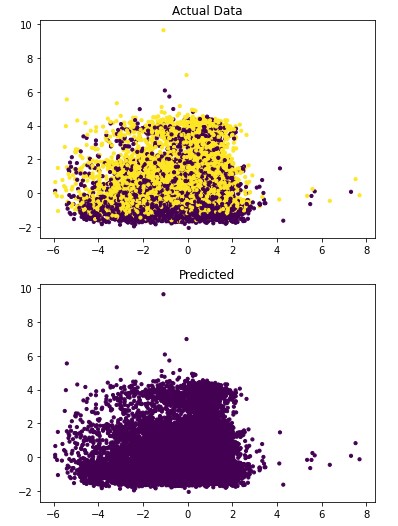

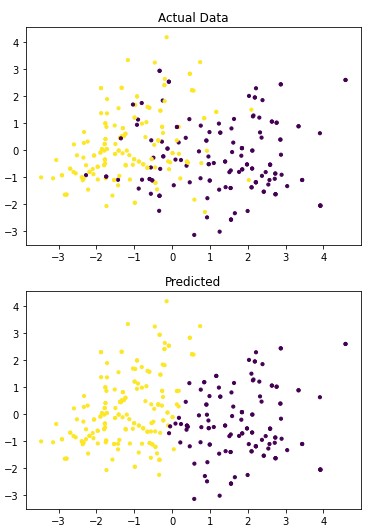

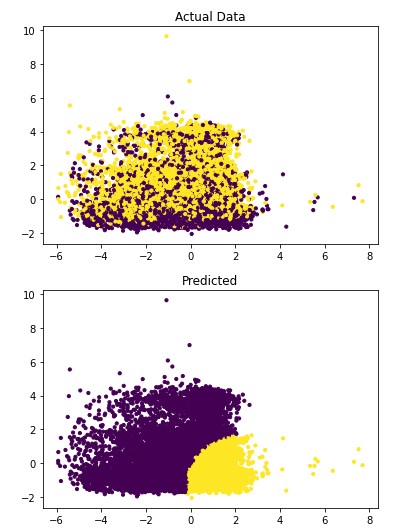

Figures 1 and 2 show the results of the visualization of the clusters of 3 and 4.

Figure 1: The classification of Breast Cancer Moleecular Subtypes using KMeans Clustering. (k=3). Each data point represnt the expression value for the genes that were used for clustering.

Figure 2: The classification of Breast Cancer Moleecular Subtypes using KMeans Clustering. (k=4). Each data point represnt the expression value for the genes that were used for clustering.

6. Benchmark

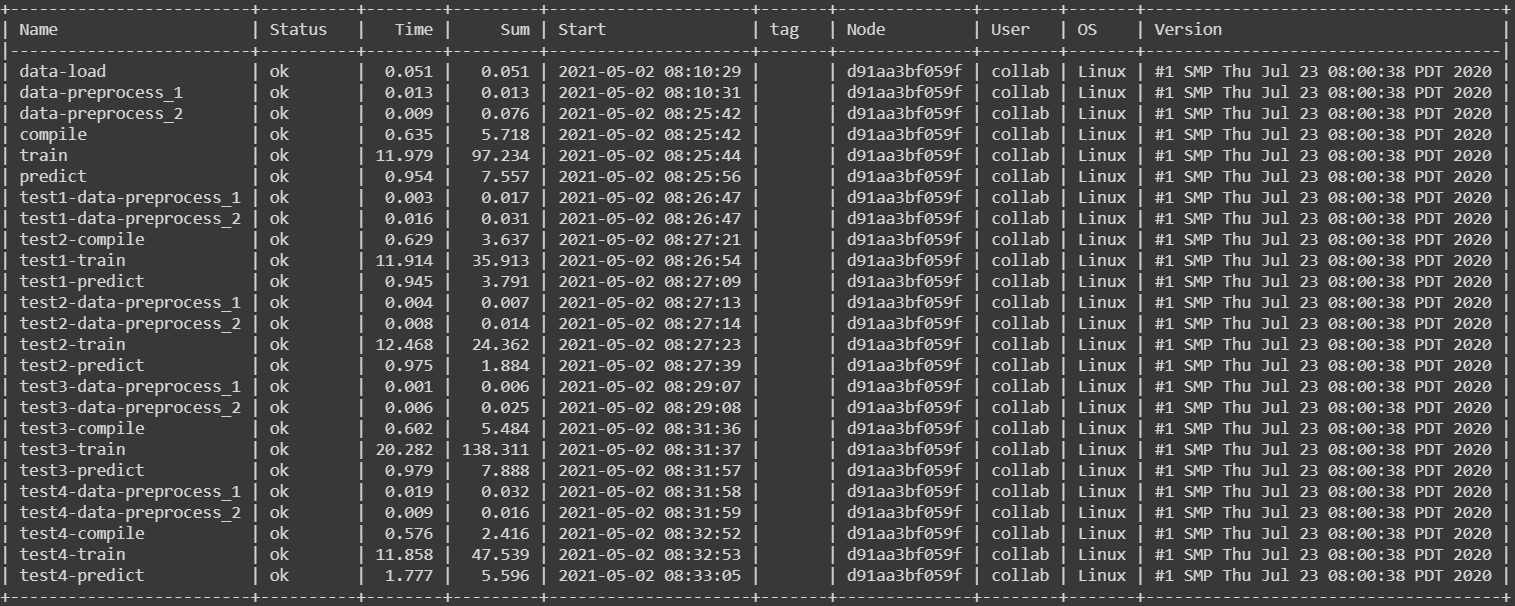

This program was executed on a Google Colab server and the entire runtime took 1.012 seconds Table 1 lists the amount of time taken to loop for n_components. The n_components is gotten from the code and it refers to the features of the dataset.

| Name | Status | Time(s) |

|---|---|---|

| parallel 1 | ok | 0.647 |

| parallel 3 | ok | 0.936 |

| parallel 5 | ok | 0.952 |

| parallel 7 | ok | 0.943 |

| parallel 9 | ok | 1.002 |

| parallel 11 | ok | 0.991 |

| parallel 13 | ok | 0.958 |

| parallel 15 | ok | 1.012 |

Benchmark: The table shows the parallel process time take the for loop for n_components.

7. Conclusion

The results of the KMeans analysis showed that three clusters provided an optimal result for the classification using a proteomic dataset. A cluster of 3 provided a balanced silhouette and homogeneity score. This predict that some interrelatedness could exist between the original PAM50 subtype classfication, since the result of classifying a protein dataset using a machine learning algorithm identified a cluster of 3 as one with the optimal result. Also, future research could be done by using other machine learning algorithms, possibly a supervised learning algotithm, to identify the correlation between the clusters and the four molecular subtypes. This model can be improved on and if proven to show that there truly exist a relationship between the four molecular subtypes, more research could be done to identify the factors that contribure to the interelatedness. This would lead medical scientists and researchers to work on better innovative methods that will aid the treatment and management of breast cancer.

8. Acknowledgments

This projected was immensely supported by Dr. Gregor von Laszewski. Also, a big appreciation to the REU Instructors (Carlos Theran, Yohn Jairo and Victor Adankai) for their contribution, support, teachings and advice. Also, gratitude to my colleagues who helped me out; Jacques Fleischer, David Umanzor and Sheimy Paz Serpa. gratitude to my colleagues. Lastly, appreciation to Dr. Byron Greene, the Florida A&M University, the Indiana University and Bethune Cookman University for providing a platform to be able to learn new things and embark on new projects.

9. References

-

Akram, Muhammad et al. “Awareness and current knowledge of breast cancer.” Biological research vol. 50,1 33. 2 Oct. 2017, doi:10.1186/s40659-017-0140-9 ↩︎

-

Wallden, Brett et al. “Development and verification of the PAM50-based Prosigna breast cancer gene signature assay.” BMC medical genomics vol. 8 54. 22 Aug. 2015, doi:10.1186/s12920-015-0129-6 ↩︎

-

Breast Cancer.org Prosigna Breast Cancer Prognostic Gene Signature Assay. https://www.breastcancer.org/symptoms/testing/types/prosigna ↩︎

-

L. Hussain, W. Aziz, S. Saeed, S. Rathore and M. Rafique, “Automated Breast Cancer Detection Using Machine Learning Techniques by Extracting Different Feature Extracting Strategies,” 2018 17th IEEE International Conference On Trust, Security And Privacy In Computing And Communications/ 12th IEEE International Conference On Big Data Science And Engineering (TrustCom/BigDataSE), 2018, pp. 327-331, doi: 10.1109/TrustCom/BigDataSE.2018.00057. ↩︎

-

Halalli, Bhagirathi et al. “Computer Aided Diagnosis - Medical Image Analysis Techniques.” 20 Dec. 2017, doi: 10.5772/intechopen.69792 ↩︎

-

Salod, Zakia, and Yashik Singh. “Comparison of the performance of machine learning algorithms in breast cancer screening and detection: A protocol.” Journal of public health research vol. 8,3 1677. 4 Dec. 2019, doi:10.4081/jphr.2019.1677Articles ↩︎

-

WHO, WHO issues first global report on Artificial Intelligence (AI) in health and six guiding principles for its design and use. https://www.who.int/news/item/28-06-2021-who-issues-first-global-report-on-ai-in-health-and-six-guiding-principles-for-its-design-and-use ↩︎

-

Mertins, Philipp et al. “Proteogenomics connects somatic mutations to signalling in breast cancer.” Nature vol. 534,7605 (2016): 55-62. doi:10.1038/nature18003 ↩︎

-

Kehinde Ezekiel, Project Code, https://github.com/cybertraining-dsc/su21-reu-362/blob/main/project/code/final_breastcancerproject.ipynb ↩︎

-

Kaggle_breast_cancer_proteomes «https://pastebin.com/A0Wj41DP> ↩︎

-

Proteomes_clustering_analysis https://www.kaggle.com/shashwatwork/proteomes-clustering-analysis ↩︎

2 - Project: Detection of Autism Spectrum Disorder with a Facial Image using Artificial Intelligence

Myra Saunders, su21-reu-378, Edit

- Utilized CNN Code: autism_classification.ipynb

Abstract

This project uses artificial intelligence to explore the possibility of using a facial image analysis to detect Autism in children. Early detection and diagnosis of Autism, along with treatment, is needed to minimize some of the difficulties that people with Autism encounter. Autism is usually diagnosed by a specialist through various Autism screening methods. This can be an expensive and complex process. Many children that display signs of Autism go undiagnosed because there families lack the expenses needed to pay for Autism screening and diagnosing. The development of a potential inexpensive, but accurate way to detect Autism in children is necessary for low-income families. In this project, a Convolutional Neural Network (CNN) is utilized, along with a dataset obtained from Kaggle. This dataset consists of collected images of male and female, autistic and non-autistic children between the ages of two to fourteen years old. These images are used to train and test the CNN model. When one of the images are received by the model and importance is assigned to various features in the image, an output variable (autistic or non-autistic) is received.

Contents

Keywords: Autism Spectrum Disorder, Detection, Artificial Intelligence, Deep Learning, Convolutional Neural Network.

1. Introduction

Autism Spectrum Disorder (ASD) is a broad range of lifelong developmental and neurological disorders that usually appear during early childhood. Autism affects the brain and can cause challenges with speech and nonverbal communication, repetitive behaviors, and social skills. Autism Spectrum Disorder can occur in all socioeconomic, ethnic, and racial groups, and can usually be detected and diagnosed from the age of three years old and up. As of June 2021, the World Health Organization has estimated that one in 160 children have an Autism Spectrum Disorder worldwide1. Early detection of Autism, along with treatment, is crucial to minimize some of the difficulties and symptoms that people with Autism face2. Symptoms of Autism Spectrum Disorder are normally identified based on psychological criteria3. Specialists use techniques such as behaivoral observation reports, questionaires, and a review of the child’s cognitive ability to detect and diagose Autism in children.

Many researchers believe that there is a correlation between facial morphology and Autism Spectrum Disorder, and that people with Autism have distinct facial features that can be used to detect their Autism Spectrum Disorder4. Human faces encode important markers that can be used to detect Autism Spectrum Disorder by analyzing facial features, eye contact,facial movements, and more5. Scientists found that children diagnosed with Autism share common facial feature distinctions from children who are not diagnosed with Autism6. Some of these facial features are wide-set eyes, short middle region of the face, and a broad upper face. Figure 1 provides an example of the facial feature differences between a child with Autism and a child without.

.png)

Figure 1: Image of Child with Autism (left) and Child with no Autism (right)7.

Due to the distinct features of Autistic individuals, we believe that it is necessary to explore the possiblities of using a facial analysis to detect Autism in children, using Artificial Intelligence (AI). Many researchers have attempted to explore the possibility of using various novel algorithms to detect and diagnose children, adolescents, and adults with Autism2. Previous research has been done to determine if Autism Spectrum Disorder can be detected in children by analyzing a facial image7. The author of this research collected approximately 1500 facial images of children with Autism from websites and Facebook pages associated with Autism. The facial images of non-autistic children were randomly downloaded from online and cropped.The author aimed to provide a first level screening for autism diagnosis, whereby parents could submit an image of their child and in return recieve a probability of the potential of Autism, without cost.

To contribute to this previous research7, this project will propose a model that can be used to detect the presence of Autism in children based on a facial image analysis. A deep learning algorithm will be used to develop an inexpensive, accurate, and effective method to detect Autism in children. This project implements and utilizes a Convolutional Neural Network (CNN) classifier to explore the possibility of using a facial image analysis to detect Autism in children, with an accuracy of 95% or higher. Most of the coding used for this CNN model was obtained from the Kaggle dataset and was done by Fran Valuch8. We made changes to some parts of this code, which will be discussed further in this project. The goal of this project is not to diagnose Autism, but to explore the possibility of detecting Autism at its early stage, using a facial image analysis.

2. Related Work

Previous work exists on the use of artificial intelligence to detect Autism in children using a facial image. Most of this previous work used the Autism kaggle dataset7, which was also used for this project. One study utilized MobileNet followed by two dense layers in order to perform deep learning on the dataset6. MobileNet was used because of its ability to compute outputs much faster, as it can reduce both computation and model size. The first layer was dedicated to distribution, and allowed customisation of weights to input into the second dense layer. The second dense layer allowed for classification. The architecture of this algorithm is shown below in Figure 2.

Figure 2: Algorithm Architecture using MobileNet6.

Training of this model completed after fifteen epochs, which resulted in a test accuracy of 94.64%. In this project we utilize a classic Convolutional Neural Network model using tensorflow. This will be done in hopes of obtaining a test accuracy of 95% or higher.

3. Dataset

The dataset used for this project was obtained from Kaggle7. This dataset contained approximately 1500 facial images of children with Autism that were obtained from websites and Facebook pages associated with Autism. The facial images of non-autistic children were randomly downloaded from online. The pictures obtained were not of the best quality or consistency with respect to the facial alignment. Therefore, the author developed a python program to automatically crop the images to include only the extent possible for a facial image. These images consist of male and female children that are of different races and range from around ages two to fourteen.

This project uses version 12 of this dataset, which is the latest version. The dataset consists of three directories labled test, train, and valid, along with a CSV file. The training set is labeled as train, and consists of ‘Autistic’ and ‘Non-Autistic’ subdirectories. These subdirectories contain 1269 images of autistic and 1269 images of non-autistic children respectively. The validation set located in the valid directory are separated into ‘Autistic’ and ‘Non-autistic’ subdirectories. These subdirectories also contain 100 images of autistic and 100 images of non-autistic children respectively. The testing set located in the test directory is divided into 100 images of autistic children and 100 images of non-autistic children. All of the images provided in this dataset are in 224 X 224 X 3, jpg format. Table 1 provides a summary of the content in the dataset.

Table 1: Summary Table of Dataset.

4. Proposed Methodology

Convolutional Neural Network (CNN)

This project utilizes a Convolution Neural Network (CNN) to develop a program that can be used to detect the presence of Autism in children from a facial image analysis. If successful this program can be used an inexpensive method to detect Autism in children at its early stages. We believed that a CNN model would be the best way create this program because of its little dependence on preprocessing data. A Convolutional Neural Network was also used becuase of its ability to take in an image and assign importance to, and identify diferent objects within the image. CNN also has very high accuracy when dealing with image recognition. The dataset used contains 1269 training images that were used to train and test this Convolution Neural Network model. The architecture of this model can be seen in Figure 3.

Figure 3: Architecture of utilized Convolutional Neural Network Model.

5. Results

The results of this project is estimated by affectability and accuracy by utilizing the Confusion Matrix CNN. The results also rely on how correct and precise the model was trained. This model was created to explore the possibility of detecting Autism in children at its early stage, using a facial image analysis. A Convolutional Neural Network classifier was used to create this model. For this CNN model we utilized max pooling and Rectified Linear Unit (ReLU), with two epochs. This resulted in an accuracy of 71%. These results can be seen below in Figure 4. Figure 5 displays some of the images that were classified and labeled correctly (right) and the others that were labeled incorrectly (left).

.png)

Figure 4: Results after Execution.

validation loss: 57% - validation accuracy: 68% - training loss: 55% - training accuracy: 71%

Figure 5: Correct Labels and Incorrect Labels.

6. Benchmark

Figure 6 shows the Confusion Matrix of the Convolutional Neural Network model used in this project. The Confusion Matrix displays a summary of the model’s predicted results after its attempt to classify each image as either autistic or non-autistic. Out of the 200 images, 159 of the images were labled correctly and 41 of the images were labled incorrectly.

Figure 6: Confusion Matrix of the Convolutional Neural Network model.

Cloudmesh-common9 was used to create a Stopwatch module, that was used to measure and study the training and testing time of the model. Table 2 shows the cloudmesh benchmark output.

Table 2: Cloudmesh Benchmark

| Name | Status | Time | Sum | Start | tag | msg | Node | User | OS | Version |

|---|---|---|---|---|---|---|---|---|---|---|

| Train | ok | 3745.28 | 3745.28 | 2021-08-10 16:08:57 | dab8db0489cd | collab | Linux | #1 SMP Sat Jun 5 09:50:34 PDT 2021 | ||

| Test | ok | 2.088 | 2.088 | 2021-08-10 17:43:09 | dab8db0489cd | collab | Linux | #1 SMP Sat Jun 5 09:50:34 PDT 2021 |

7. Conclusions and Future Work

Autism Spectrum Disorder is a broad range of lifelong developmental and neurological disorders that is considered one of the most growing disorders in children. The World Health Organization has estimated that one in 160 children have an Autism Spectrum Disorder worldwide1. Techniques that are used by specialists to detect autism can be time consuming and inconvenient for some families. Considering these factors, finding effective and essential ways to detect Autism in children is a neccesity. The aim of this project was to create a model that would analyze facial images of children, and in return determine if the child is Autistic or not. This was done in hopes of receiving 95% accuracy or higher. After executing the model we received an accuracy of 71%.

As shown in the results section above, some of the pictures that were initially labeled as Autistic, were labeled incorrectly after running the model. This low accuracy rate could be improved if the CNN model is combined with other algorithms such as transfer learning and VGG-19. This low accuracy could also be improved by using a dataset that includes a wider variety and larger amount of images. We could also ensure that images in the dataset includes children that are of a wider age range. These improvements could possibly increase our chances of obtaining an accuracy of 95% or higher. When this model is improved and an accuracy of atleast 95% is achieved, furture work can be done to create a model that can be used for Autistic individuals outside of the dataset age range (2 - 14 years old).

8. Acknowledgments

The author of this project would like to express a vote of thanks to Yohn Jairo, Carlos Theran, and Dr. Gregor von Laszewski for their encouragement and guidance throughout this project. A special vote of thanks also goes to Florida A&M University for funding this wonderful research program. The completion of this project could not have been possible without their support.

9. References

-

World Health Organization. 2021. Autism spectrum disorders, [Online resource] https://www.who.int/news-room/fact-sheets/detail/autism-spectrum-disorders ↩︎

-

Raj, S., and Masood, S., 2020. Analysis and Detection of Autism Spectrum Disorder Using Machine Learning Techniques, [Online resource https://reader.elsevier.com/reader/sd/pii/S1877050920308656?token=D9747D2397E831563D1F58D80697D9016C30AAC6074638AA926D06E86426CE4CBF7932313AD5C3504440AFE0112F3868&originRegion=us-east-1&originCreation=20210704171932 ↩︎

-

Khodatars, M., Shoeibi, A., Ghassemi, N., Jafari, M., Khadem, A., Sadeghi, D., Moridian, P., Hussain, S., Alizadehsani, R., Zare, A., Khosravi, A., Nahavandi, S., Acharya, U. R., and Berk, M., 2020. Deep Learning for Neuroimaging-based Diagnosis and Rehabilitation of Autism Spectrum Disorder: A Review. [Online resource] https://arxiv.org/pdf/2007.01285.pdf ↩︎

-

Musser, M., 2020. Detecting Autism Spectrum Disorder in Children using Computer Vision, Adapting facial recognition models to detect Autism Spectrum Disorder. [Online resource] https://towardsdatascience.com/detecting-autism-spectrum-disorder-in-children-with-computer-vision-8abd7fc9b40a ↩︎

-

Akter, T., Ali, M. H., Khan, I., Satu, S., Uddin, Jamal., Alyami, S. A., Ali, S., Azad, A., and Moni, M. A., 2021. Improved Transfer-Learning-Based Facial Recognition Framework to Detect Autistic Children at an Early Stage. [Online resource] https://www.mdpi.com/2076-3425/11/6/734 ↩︎

-

Beary, M., Hadsell, A., Messersmith, R., Hosseini, M., 2020. Diagnosis of Autism in Children using Facial Analysis and Deep Learning. [Online resource] https://arxiv.org/ftp/arxiv/papers/2008/2008.02890.pdf ↩︎

-

Piosenka, G., 2020. Detect Autism from a facial image. [Online resource] https://www.kaggle.com/gpiosenka/autistic-children-data-set-traintestvalidate?select=autism.csv ↩︎

-

Valuch, F., 2021. Easy Autism Detection with TF.[Online resource] https://www.kaggle.com/franvaluch/easy-autism-detection-with-tf/comments ↩︎

-

Gregor von Laszewski, Cloudmesh StopWatch and Benchmark - Cloudmesh-Common, [GitHub] https://github.com/cloudmesh/cloudmesh-common ↩︎

3 - Project: Analyzing the Advantages and Disadvantages of Artificial Intelligence for Breast Cancer Detection in Women

RonDaisja Dunn, su21-reu-377, Edit

Abstract

The AI system is improving its diagnostic accuracy by significantly decreasing unnecessary biopsies. AI’s algorithms for workflow improvement and outcome analyses are advancing. Although artificial intelligence can be beneficial to detecting and diagnosing breast cancer, there are some limitations to its techniques. The possibility of insufficient quality, quantity or appropriateness is possible. When compared to other imaging modalities, breast ultrasound screening offers numerous benefits, including a cheaper cost, the absence of ionizing radiation, and the ability to examine pictures in real time. Despite these benefits, reading breast ultrasound is a difficult process. Different characteristics, such as lesion size, shape, margin, echogenicity, posterior acoustic signals, and orientation, are used by radiologists to assess US pictures, which vary substantially across individuals. The development of AI systems for the automated detection of breast cancer using Ultrasound Screening pictures has been aided by recent breakthroughs in deep learning.

Contents

Keywords: project, reu, breast cancer, Artificial Intelligence, diagnosis detection, women, early detection, advantages, disadvantages

1. Introduction

The leading cause of cancer death in women worldwide is breast cancer. This deadly form of cancer has impacted many women across the globe. Specifically, African American women have been the most negatively impacted. Their death rates due to breast cancer have surpassed all other ethnicities. Serial screening is an essential part in detecting Breast cancer. Detecting the early stages of this disease and decreasing mortality rates is most effective by utilizing serial screening. Some women detect that they could have breast cancer by discovering a painless lump in their breast. Other women began to detect that there may be a problem due to annual and bi-annual breast screenings. Screening in younger women is not likely, because breast cancer is most likely to be detected in older women. Women from the age 55 to 69 are likely to be diagnosed with breast cancer. Women who frequently participate in receiving mammograms reduce the chance of breast cancer mortality.

Artificial Intelligence is the branch of computer science dedicated to the development of computer algorithms to accomplish tasks traditionally associated with human intelligence, such as the ability to learn and solve problems. This branch of computer science coincides with diagnosing breast cancer in individuals because of the use of radiology. Radiological images can be quantitated and can inform and train some algorithms. There are many terms that relate to Artificial Intelligence such as artificial neural networks (ANNs), machine and deep learning (ML, DL). These techniques complete duties in healthcare, including radiology. Machine learning interprets pixel data and patterns from mammograms. Benign or malignant features for inputs are defined by microcalcifications. Deep learning is effective in breast imaging, where it can identify several features such as edges, textures, and lines. More intricate features such as organs, shapes, and lesions can also be detected. Neural networks algorithms are used for image feature extractions that cannot be detected beyond human recognition.

A computer system that can perform complicated data analysis and picture recognition tasks is known as artificial intelligence (AI). Both massive processing power and the application of deep learning techniques made this possible, and are increasingly being used in the medical field. Mammograms are the x-rays used to detect breast cancer in women. Early detection is important to reduce deaths, because that is when the cancer is most treatable. Screenings have presented a 15%-35% false report in screened women. Errors and the ability to view the cancer from the human eye are the reasons for the false reports. Artificial Intelligence offers many advantages when detecting breast cancer. These advantages include less false reports, fewer cases missed because the AI program does not get tired and it reduces the effort of reading thousands of mammograms.

2. Methods From Literature Review

The goal was to emphasize the present data in terms of test accuracy and clinical utility results, as well as any gaps in the evidence. Women are screened by getting photos taken of each breast from different views. Two readers are assigned to interpret the photographs in a sequential order. Each reader decides whether the photograph is normal or whether a woman should be recalled for further examination. Arbitration is used when there is a disagreement. If a woman is recalled, she will be offered extra testing to see if she has cancer.

Another goal is to detect cancer at an earlier stage during screening so that therapy can be more successful. Some malignancies found during screening, on the other hand, might never have given the woman symptoms. Overdiagnosis is a term used to describe a situation in which a person has caused harm to another person during their lifetime. As a result, overtreatment (unnecessary treatment) occurs. Since some malignancies are overlooked during screening, the women are misled.

The methods in diagnostic procedures vary between radiologists and Artificial Intelligence networks. In a breast ultrasound exam, radiologists look for abnormal abnormalities in each image, while AI networks analyze each image in an exam that is processed separately using a ResNet-18 model, and a saliency map is generated, identifying the most essential sections. With radiologists, the focus is on photos with abnormal lesions and with AI networks the image is given an attention score based on its relative value. To make a final diagnosis, radiologists consider signals in all photos, and AI computes final predictions for benign and malignant results by combining information from all photos using an attention technique.

3. Results From Literature Review

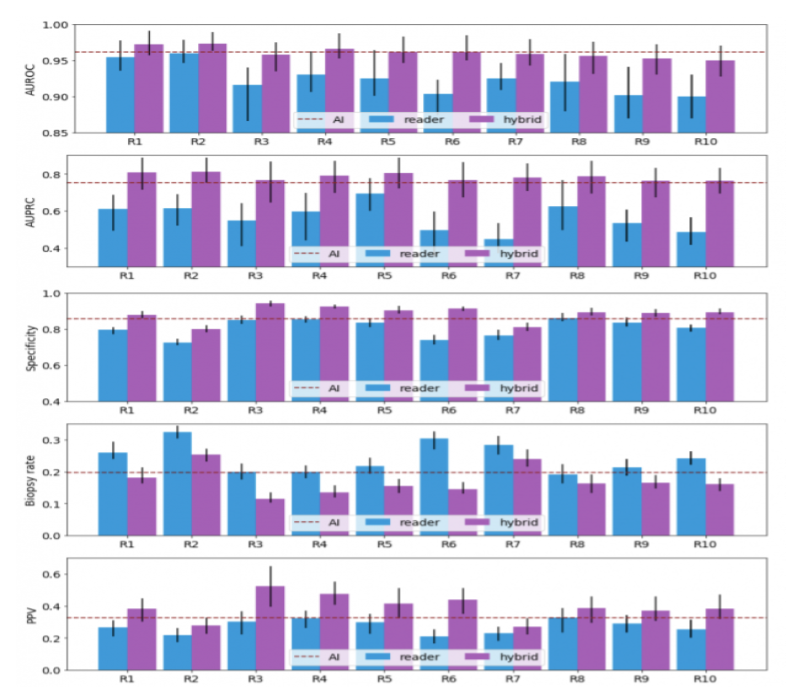

Using pathology data, each breast in an exam was given a label indicating the presence of cancer. Image-guided biopsy or surgical excision were used to collect tissues for pathological tests. The AI system was shown to perform comparably to board-certified breast radiologists in the reader study subgroup. In this reader research, the AI system detected tumors with the same sensitivity as radiologists, but with greater specificity, a higher PPV, and a lower biopsy rate. Furthermore, the AI system outperformed all ten radiologists in terms of AUROC and AUPRC. This pattern was replicated in the subgroup study, which revealed that the algorithm could correctly interpret Ultrasound Screening examinations that radiologists considered challenging.

Figure 1: Analysis of saliency maps on a qualitative level- This figure displays the sagittal and transverse views of the lesion (left) and the AI’s saliency maps indicating the anticipated sites of benign (center) and malignant (right) findings in each of the six instances (a-f) from the reader study.

4. Datasets

Figure 2: The probabilistic forecasts of each hybrid model were randomly divided to fit the reader’s sensitivity. The dichotomization of the AI’s predictions matches the sensitivity of the average radiologists. Readers' AUROC, AUPRC, specificity, and PPV improve as a result of the collaboration between AI and readers, whereas biopsy rates decrease.

5. Conclusion

There are some benefits of AI help with mammogram screenings. The reduction in treatment expenses is one of the advantages of screening. Treatment for people who are diagnosed sooner is less invasive and expensive, which may lessen patient anxiety and improve their prognosis. One or all human readers could be replaced by AI. AI may be used to pre-screen photos, with only the most aggressive ones being reviewed by humans. AI could be employed as a reader aid, with the human reader relying on the AI system for guidance during the reading process.

However, there is also fear that AI could discover changes that would never hurt women. Because the adoption of AI systems will alter the current screening program, it’s crucial to determine how accurate AI is in breast screening clinical practice before making any changes. It’s uncertain how effective AI is at detecting breast cancer in different sorts of women or in different groups of women (for example different ethnic groups). AI could significantly minimize staff workload, as well as the proportion of cancers overlooked during screening, and the amount of women who are asked to return for more tests despite the fact that they do not have cancer. According to the findings of the reader survey, such teamwork between AI systems and radiologists increases diagnosis accuracy and decreases false positive biopsies for all 10 radiologists. This research indicated that integrating the Artificial intelligence system’s predictions enhanced the performance of all readers.

6. Acknowledgments

Thank you to the extremely intellectual, informative, patient and courteous instructors of the Research Experience for Undergraduates Program.

- Carlos Theran, REU Instructor

- Yohn Jairo Parra, REU Instructor

- Gregor von Laszewski, REU Instructor

- Victor Adankai, Graduate Student

- REU Peers

- Florida Agricultural and Mechanical University

7. References

- Coleman C. Early Detection and Screening for Breast Cancer. Semin Oncol Nurs. 2017 May;33(2):141-155. doi: 10.1016/j.soncn.2017.02.009. Epub 2017 Mar 29. PMID: 28365057

- Freeman, K., Geppert, J., Stinton, C., Todkill, D., Johnson, S., Clarke, A., & Taylor-Phillips, S. (2021, May 10). Use of Artificial Intelligence for Image Analysis in Breast Cancer Screening. https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/987021/AI_in_BSP_Rapid_review_consultation_2021.pdf

- Li, J., Zhou, Z., Dong, J., Fu, Y., Li, Y., Luan, Z., & Peng, X. (2021). Predicting breast cancer 5-year survival using machine learning: A systematic review. PloS one, 16(4), e0250370.

- Mendelsonm, Ellen B., Artificial Intelligence in Breast Imaging: Potentials and Limitations. American Journal of Roentgenology 2019 212:2, 293-299

- Seely, J. M., & Alhassan, T. (2018). Screening for breast cancer in 2018-what should we be doing today?. Current oncology (Toronto, Ont.), 25(Suppl 1), S115–S124.

- Shamout, F. E., Shen, A., Witowski, J., Oliver, J., & Geras, K. (2021, June 24). Improving Breast Cancer Detection in Ultrasound Imaging Using AI. NVIDIA Developer Blog. https://developer.nvidia.com/blog/improving-breast-cancer-detection-in-ultrasound-imaging-using-ai/

4 - Increasing Cervical Cancer Risk Analysis

Theresa Jean-Baptistee, su21-reu-369, Edit

Abstract

Cervical Cancer is an increasing matter that is affecting various women across the nation, in this project we will be analyzing risk factors that are producing higher chances of this cancer. In order to analyize these risk factors a machine learning technique is implemented to help us understand the leading factors of cervical cancer.

Contents

Keywords: Cervical, Cancer, Diseases, Data, conditions

1. Introduction

Cervical cancer is a disease that is increasing in various women nationwide. It occurs within the cells of the cervix (can be seen in stage 1 of the image below). This cancer is the fourth leading cancer, where there are about 52,800 cases found each year, predominantly being in lower developed countries. Cervical cancer occurs most commonly in women who are within their 50’s and who has symptoms such as watery and bloody discharge, bleeding, and painful intercourse. Two other common causes can be an early start on sexual activity and multiple partners. The most common way to determine if one may be affected by this disease is through a pap smear. When witnessed early it, can allow a better chance of results and treatment.

Cervical cancer is so important for the future of reproduction, being the cause of a successful or unsuccessful birth with completions like premature a child. The cervix help keeps the fetus stable within the uterus during this cycle, towards the end of development, it softens and dilates for the birth of a child. If diagnosed with this cancer, a miracle would be needed to conceive a child after having treatment. Most treatments begin with a biopsy removing affected areas of cervical tissue. As it continues, to spread radiotherapy might be recommended to treat the cancer where may affect the womb. lastly, one may need to have a hysterectomy which is the removal of the womb.

In this paper, we will study the exact cause and risk factors that may place someone in this position. If spotted early it wouldn’t affect someone’s dream chance of conceiving or affect their reproductive parts. Using various data sets we will study the way everything may alignes in causes and machine leaning would be the primary technique to used interpretate the relation between variables and risk factor on cervical cancer.

Model

2. DataSets

The Data sets obatained shows the primary risk factors that affect women ages 15 and above. The few factors that sticked out the most were age, start of sexual activity, tabacoo intake, and IUD. The age and start of sexual activity maybe primary factor because a person is more liable to catch an STD and get this diease from mutiple parnters never really knowing what the other person may be doing outside of the encounterment. Tabcoo intake causes an affect making a person by weaking the immune system and making somone more septable to the disease. The IUD has the highest number on the data set being a primary factor that may put a person at risk, this device aids the prevention of pregency by thickneing the mucos of the cervix that could later cause infection or make your more spetiable to them.

IUD Visulaization

Tabacoo Visulization Affect On Cervixs

Correlation of Age and Start Of sexual activity

3. Other People Works

The research of others work has made a huge imapact to this project starting from data to important knowledge needed to conduct the project. With the various research sites, we were able to witness what the affects various day to day activtie affect women long term. The Cervical Cancer Diagnosis Using a Chicken Swarm Optimization Based Machine Learning Method, was a big aid throught the project explaing the stages of cervical cancer, ways it can be treated, and the affects it may cause. With the data that was used from UCI Machine Learning, we were able to find efficent correlation into the data, helping the implented machine learning algorithm for the classification task.

4. Explantion of Confusion Matrix

The confusion matrix generated by multilayer perceptron can be explained as the perdicted summary results from the data obtained. Zero is when no cervical cancer is witnessed, one is when cervical cancer is seen. A hundrend and sixty-two is the highest number of this disease seen on the chart and the lowest number being winessed is two and eight being quiet of a jump.

5. Benchmark

6. Conclusion

In conclusion it can be found as women partake in their first sexual activity and continue they are more at risk. 162 is a dramatic number not necessarily being affected by age and 0 is only seen when a person does not partake in it. In the future I hope to keep furthering my Knowledge on Cervical Cancer, hopefully coming up with a realistic method to cure this disease where one can continue to live their life with as a human being.

7. Acknowledgments

The author would like to thank Yohn, Carlos, Gregor, Victor, and Jacques for all of their Help. Thank you!

8. References

5 - Cyber Attacks Detection Using AI Algorithms

Victor Adankai, su21-reu-365, Edit

Abstract

Here comes a short abstract of the project that summarizes what it is about

Contents

Keywords: AI, ML, DL, Cybersecurity, Cyber Attacks.

1. Introduction

- Find literature about AI and Cyber Attacks on IoT Devices Dectection.

- Analyze the literature and explain how AI for Cyber Attacks on IOT Devices Detection are beneficial.

Types of Cyber Attacks

- Denial of service (DoS) Attack:

- Remote to Local Attack:

- Probing:

- User to Root Attack:

- Adversarial Attacks:

- Poisoning Attack:

- Evasion Attack:

- Integrity Attack:

- Malware Attack:

- Phising Attack:

- Zero Day Attack:

- Sinkhole Attack:

- Causative Attack:

Examples of AI Algorithms for Cyber Attacks Detection

- Convolutional Neural Network (CNN)

- Autoencoder (AE)

- Deep Belief Network (DBN)

- Recurrent Neural Network (RNN)

- Generative Adversal Network (GAN)

- Deep Reinforcement Learning (DIL)

2. Datasets

- Finding data sets in IoT Devices Cyber Attacks.

- Can any of the data sets be used in AI?

- What are the challenges with IoT Devices Cyber Attacks data set? Privacy, HIPPA, Size, Avalibility

- Datasets can be huge and GitHub has limited space. Only very small datasets should be stored in GitHub.

However, if the data is publicly available you program must contain a download function instead that you customize.

Write it using pythons

request. You will get point deductions if you check-in data sets that are large and do not use the download function.

3. Using Images

- Place a cool image into projects images in my directory

- Correct the following link, replace the fa number with my su number and thne chart of png.

- If the image has been copied, you must use a reference such as shown in the Figure 1 caption.

Figure 1: Images can be included in the report, but if they are copied you must cite them 1.

4. Benchmark

Your project must include a benchmark. The easiest is to use cloudmesh-common [^2]

5. Conclusion

A convincing but not fake conclusion should summarize what the conclusion of the project is.

6. Acknowledgments

- Gregor von Laszewski

- Yohn Jairo Bautista

- Carlos Theran

7. References

-

Gregor von Laszewski, Cloudmesh StopWatch and Benchmark from the Cloudmesh Common Library, [GitHub] https://github.com/cloudmesh/cloudmesh-common ↩︎

6 - Report: Dentronics: Classifying Dental Implant Systems by using Automated Deep Learning

Jamyla Young, su21-reu-376, Edit

Abstract

Artificial intelligence is a branch of computer science that focuses on building and programming machines to think like humans and mimic their actions. The proper concept definition of this term cannot be achieved simply by applying a mathematical, engineering, or logical approach but requires an approach that is linked to a deep cognitive scientific inquiry. The use of machine-based learning is constantly evolving the dental and medical field to assist with medical decision making process.In addition to diagnosis of visually confirmed dental caries and impacted teeth, studies applying machine learning based on artificial neural networks to dental treatment through analysis of dental magnetic resonance imaging, computed tomography, and cephalometric radiography are actively underway, and some visible results are emerging at a rapid pace for commercialization.

Contents

Keywords: Dental implants, Deep Learning, Prosthodontics, Implant classificiation, Artificial Intelligence, Neural Networks.

1. Introduction

Dental implants are ribbed oral protheses typically made up of biocompatible titanium to replace the missing root(s) of an absent tooth. These dental protheses are used to support the jaw bone to prevent deterioration due to an absent root1. This is referred to as bone resorption which can result to facial malformation as well as reduced oral function such as biting and chewing. These devices are composed of three elements that imitates a natural tooth function and structure.The implant which are typically ribbed and threaded to promote stability while integrating within the bone tissue. The osseointegration process usually takes 6-8 months to rebuild the bone to support the implant. An implant abutment is fixed on top of the implant to act as a base for prosthetic devices 2. Prefabricated abutments are manufactured in many shapes, sizes and angles depending on the location of the implant and the types of prothesis that will be attached. Dental abutments support a range of prothetic devices such as dental crowns, bridges, and dentures 3.

Osseointegrated dental implants depend on various factors that affect the anchorage of the implant to the bone tissue. Successful surgical anchoring techniques can contribute to long term success of implant stability. Primary stability plays a role 2 week postoperatively by achieving mechanical retention of the implant. It helps establish a mechanical microenvironment for gradual bone healing, or osseointegration-This is secondary implant stability. Bone type, implant length, implant and diameter influences primary and secondary implant stability. Implant length can range from 6mm to 20mm; however, the most common lengths are between 8mm to 15mm. Many studies suggest that implant length contribute to decreasing bone stress and increasing implant stability. Bone stress can occur at both the cortical and cancellous part of the bone. Increasing implant length will decrease stress in the cancellous part of the bone while increasing the implant diameter can decrease stress in the cortical part of the bone4. Bone type can promote positive bone stimulation around an implant improving the overall function. There are four different types: Type I, Type II, Type III, and Type IV. Type I is the most dense of them which provides more cortical anchorage but has limited vascularity. Type II is the best for osseointegration because it provides good cortical anchorage and has better vascularity than type I. Type III and IV have a thin layer of cortical bone which decrease the success rate of primary stability5.

Implant stability can be measured using the Implant Stability Quotient (ISQ) as an indirect indicator to determine the time frame for implant loading and prognostic indicator for implant failure 4. This can be measured by resonance frequency analysis (RFA) immediately after the implant has been placed. Resonance frequency analysis is the measurement in which a device vibrates in response to frequencies in the range of 5-15 kHz. The peak amplitude of the response is then encoded into the implant stability quotient (ISQ). The clinical range of ISQ is from 55-80. High stability is >70 ISQ while medium stability is between 60-69 ISQ. Low stability is <60 ISQ6.

There are over 2000 types of dental implant systems (DIS) that differs in diameter, length, shape, coating, and surface material properties. These devices have more than a 90% long termed survival rate which ranges more than 10 years. Inevitably, biological and mechanical complications such as fractures, low implant stability, and screw loosening can occur. Therefore, identifying the correct Dental Implant System is essential to repair or replace the existing system. Methods and techniques that enables clear identification is insufficient 7.

Artificial intelligence is a branch of computer science that focuses on building and programming machines to think like humans and mimic their actions. A deep convolutional neural network (DCNN) is a brach of artificial intelligence that applies multiple layers of nonlinear processing units for feature extraction, transformation, and classification of high dimensional datasets. Deep convolutional neural networks are commonly used to identify patterns in images and videos. The structure typically consist of four types of layers: convolution, pooling, activation, and fully connected. These neural networks use images as an input to train a classifier which employs a mathematical operation called a convolution. Deep neural networks have been successfully applied in the dental field and demonstrated advantages in terms of diagnosis and prognosis. Using automated deep convolutional neural networks is highly efficient in classifying different dental implant systems compared to most dental professionals7.

2. Data sets

Researchers at Daejon Dental Hospital used automated deep convolutional neural networks to evaluate the efficacy of its ability to classify dental implant systems and compare the performance with dental professionals using radiographic images.

11,980 raw panoramic and periapical radiographic images of dental implant systems were collected. these images were then randomly divided into 2 groups: 9584 (80%) images were selected for the training dataset and the remaining 2396 (20%) images were used as the testing dataset.

2.1 Dental implant classification

Dental implant systems were classified into six different types with a diameter of 3.3-5.0mm and a length of 7-13mm.

- Astra OsseoSpeed TX (Dentsply IH AB, Molndal, Sweden), with a diameter of 4.5–5.0 mm and a length of 9–13 mm;

- Implantium (Dentium, Seoul, Korea), with a diameter of 3.6–5.0 mm and a length of 8–12 mm;

- Superline (Dentium, Seoul, Korea), with a diameter of 3.6–5.0 mm and a length of 8–12 mm;

- TSIII (Osstem, Seoul, Korea), with a diameter of 3.5–5.0 mm and a length of 7–13 mm;

- SLActive BL (Institut Straumann AG, Basel, Switzerland), with a diameter of 3.3–4.8 mm and a length of 8–12 mm;

- SLActive BLT (Institut Straumann AG, Basel, Switzerland), with a diameter of 3.3–4.8 mm and a length of 8–12 mm.

2.2 Deep Convulutional Neural Network

Using Neuro-T to automatically select the model and optimize hyper-parameter. During training and inference, the automated DCNN automatically creates effective deep learning models and searches the optimal hyperparameters. An Adam optimizer with L2 regularization was used for transfer learning. The batch size was set to 432, and the automated DCNN architecture consisted of 18 layers with no dropout.

Figure 1: Overview of an automated deep convolutional neural network 7.

3. Results

For the evaluation, the following statistical parameters were taken into account: receiver operating characteristic (ROC) curve, area under the ROC curve (AUC), 95% confidence intervals (CIs), standard error (SE), Youden index (sensitivity + specificity − 1), sensitivity, and specificity, which were calculated using Neuro-T and R statistical software . Delong’s method was used to compare the AUCs generated from the test dataset, and the significance level was set at p < 0.05.

The accuracy of the automated DCNN abased on the AUC, Youden index, sensitivity, and specificity for the 2,396 panoramic and periapical radiographic images were 0.954(95% CI = 0.933–0.970, SE = 0.011), 0.808, 0.955, and 0.853, respectively. Using only panoramic radiographic images (n = 1429), the automated DCNN achieved an AUC of 0.929 (95% CI = 0.904–0.949, SE = 0.018, Youden index = 0804, sensitivity = 0.922, and specificity = 0.882), while the corresponding value using only periapical radiographic images (n = 967) achieved an AUC of 0.961 (95% CI = 0.941–0.976, SE = 0.009, Youden index = 0.802, sensitivity = 0.955, and specificity = 0.846). There were no significant differences in accuracy among the three ROC curves.

Figure 2: The accuracy of the automated DCNN for the test dataset did not show a significant difference among the three ROC three ROC curves based on DeLong’s method 7.

The Straumann SLActive BLT implant system has a relatively large tapered shape compared to other types of DISs. Thus, the automated DCNN (AUC = 0.981, 95% CI = 0.949–0.996). However, for the Dentium Superline and Osstem TSIII implant systems that do not have conspicuous characteristic elements with a tapered shape, the automated DCNN classified correctly with an AUC of 0.903 (95% CI = 0.850–0.967) and 0.937 (95% CI = 0.890–0.967)

Figure 3 (a-f): Performance of the automated DCNN and comparison with dental professionals for classification of six types of DIS 7

4. Conclusion

Nonetheless, this study has certain limitations. Although six types of DISs were selected from three different dental hospitals and categorized as a dataset, the training dataset was still insufficient for clinical practice. Therefore, it is necessary to build a high-quality and large-scale dataset containing different types of DISs. If time and cost are not limited, the automated DCNN can be continuously trained and optimized for improved accuracy. Additionally, the automated DCNN regulates the entire process, including appropriate model selection and optimized hyper-parameter adjustment. The automated DCNN can help clinical dental practitioners to classify various types of DISs based on dental radiographic images. Nevertheless, further studies are necessary to determine the efficacy and feasibility of applying the automated DCNN in clinical practice.

5. Acknowledgments

-

Carlos Theran, REU Instructor

-

Yohn Jairo Parra, REU Instructor

-

Gregor von Laszewski, REU Instructor

-

Victor Adankai, Graduate Student

-

Jacques Fleischer, REU peer

-

Florida Agricultural and Mechanical University

6. References

[^3] Gregor von Laszewski, Cloudmesh StopWatch and Benchmark from the Cloudmesh Common Library, [GitHub https://github.com/cloudmesh/cloudmesh-common

-

Karras, Spiro, Look at the structure of dental implants.(2020, September 2). https://www.drkarras.com/a-look-at-the-structure-of-dental-implants/ ↩︎

-

Ghidrai, G. (n.d.). Dental implant abutment. Stomatologia pe intelesul tuturor. https://www.infodentis.com/dental-implants/abutment.php ↩︎

-

Bataineh, A. B., & Al-Dakes, A. M. (2017, January 1). The influence of length of implant on primary stability: An in vitro study using resonance frequency analysis. Journal of clinical and experimental dentistry. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5268121/ ↩︎

-

Huang, H., G, Wu., & E, Hunziker. (2020). The clinical significance of implant Stability QUOTIENT (ISQ) MEASUREMENTS: A literature review. Journal of oral biology and craniofacial research. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7494467/ ↩︎

-

Li, J., Yin, X., Huang, L., Mouraret, S., Brunski, J. B., Cordova, L., Salmon, B., & Helms, J. A. (2017, July). Relationships among Bone QUALITY, IMPLANT Osseointegration, and WNT SIGNALING. Journal of dental research https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5480808/ ↩︎

-

Möhlhenrich, S. C., Heussen, N., Modabber, A., Bock, A., Hölzle, F., Wilmes, B., Danesh, G., & Szalma, J. (2020, July 24). Influence of bone density, screw size and surgical procedure on orthodontic mini-implant placement – part b: Implant stability. International Journal of Oral and Maxillofacial Surgery. https://www.sciencedirect.com/science/article/abs/pii/S0901502720302496 ↩︎

-

Lee JH, Kim YT, Lee JB, Jeong SN. A Performance Comparison between Automated Deep Learning and Dental Professionals in Classification of Dental Implant Systems from Dental Imaging: A Multi-Center Study. Diagnostics (Basel). 2020 Nov 7;10(11):910. doi: 10.3390/diagnostics10110910. PMID: 33171758; PMCID: PMC7694989. ↩︎

7 -

Fix the links: and than remove this line

Fix the links: and than remove this line

Gregor von Laszewski, hid-example, Edit

Abstract

Here comes a short abstract of the project that summarizes what it is about

Contents

Keywords: tensorflow, example.

1. Introduction

Do not include this tip in your document:

Tip: Please note that an up to date version of these instructions is available at

Here comes a convincing introduction to the problem

2. Report Format

The report is written in (hugo) markdown and not commonmark. As such some features are not visible in GitHub. You can set up hugo on your local computer if you want to see how it renders or commit and wait 10 minutes once your report is bound into cybertraining.

To set up the report, you must first replace the word hid-example in this example report with your hid. the hid will look something like sp21-599-111`

It is to be noted that markdown works best if you include an empty line before and after each context change. Thus the following is wrong:

# This is My Headline

This author does ignore proper markdown while not using empty lines between context changes

1. This is because this author ignors all best practices

Instead, this should be

# This is My Headline

We do not ignore proper markdown while using empty lines between context changes

1. This is because we encourage best practices to cause issues.

2.1. GitHub Actions

When going to GitHub Actions you will see a report is autmatically generated with some help on improving your markdown. We will not review any document that does not pass this check.

2.2. PAst Copy from Word or other Editors is a Disaster!

We recommend that you sue a proper that is integrated with GitHub or you use the commandline tools. We may include comments into your document that you will have to fix, If you juys past copy you will

- Not learn how to use GitHub properly and we deduct points

- Overwrite our coments that you than may miss and may result in point deductions as you have not addressed them.

2.3. Report or Project

You have two choices for the final project.

- Project, That is a final report that includes code.

- Report, that is a final project without code.

YOu will be including the type of the project as a prefix to your title, as well as in the Type tag at the beginning of your project.

3. Using Images

Figure 1: Images can be included in the report, but if they are copied you must cite them 1.

4. Using itemized lists only where needed

Remember this is not a powerpoint presentation, but a report so we recommend

- Use itemized or enumeration lists sparingly

- When using bulleted lists use * and not -

5. Datasets

Datasets can be huge and GitHub has limited space. Only very small datasets should be stored in GitHub.

However, if the data is publicly available you program must contain a download function instead that you customize.

Write it using pythons request. You will get point deductions if you check-in data sets that are large and do not use

the download function.

6. Benchmark

Your project must include a benchmark. The easiest is to use cloudmesh-common 2

6. Conclusion

A convincing but not fake conclusion should summarize what the conclusion of the project is.

8. Acknowledgments

Please add acknowledgments to all that contributed or helped on this project.

9. References

Your report must include at least 6 references. Please use customary academic citation and not just URLs. As we will at one point automatically change the references from superscript to square brackets it is best to introduce a space before the first square bracket.

-

Use of energy explained - Energy use in homes, [Online resource] https://www.eia.gov/energyexplained/use-of-energy/electricity-use-in-homes.php ↩︎

-

Gregor von Laszewski, Cloudmesh StopWatch and Benchmark from the Cloudmesh Common Library, [GitHub] https://github.com/cloudmesh/cloudmesh-common ↩︎

8 - Report: Aquatic Animals Classification Using AI

Timia Williams, su21-reu-370, Edit

Abstract

Marine animals play an important role in the ecosystem. “Aquatic animals play an important role in nutrient cycles because they store a large proportion of ecosystem nutrients in their tissues, transport nutrients farther than other aquatic animals and excrete nutrients in dissolved forms that are readily available to primary producers” (Vanni MJ 1) Fish images are captured by scuba divers, tourist, or underwater submarines. different angles of fishes image can be very difficult to get because of the constant movement of the fish. In addition to getting the right angles, the images of marine animals are usually low-quality because of the water. Underwater cameras that is required for a good quality image can be expensive. Using AI could potentially increase the marine population by the help of classification by testing the usage of machine learning using the images obtained from the aquarium combined with advanced technology. We collect 164 fish images data from Georgia acquarium to look at the different movements.

Contents

Keywords: tensorflow, example.

1. Introduction

It can be challenging to obtain a large number of different complex species in a single aquatic environment. Traditionally, it would take marine biologists years to collect the data and successfully classify the type of species obtained [1]. Scientist says that more than 90 percent of the ocean’s species are still undiscovered, with some estimating that there are anywhere between a few hundred thousand and a few million more to be discovered" (National Geographic Society). Currently, scientists know of around 226,000 ocean species. Now and days, Artificial intelligence and machine learning has been used for detection and classification in images. In this project, We will propose to use machine learning techniques to analyze the images obtained from the Georgia Aquarium to identify legal and illegal fishing.

2. Machine learning in fish species.

Aquatic ecologists often count animals to keep up the population count of providing critical conservation and management. Since the creation of underwater cameras and other recording equipment, underwater devices have allowed scientists to safely and efficiently classify fishes images without the disadvantages of manually entering data, ultimately saving lots of time, labor, and money. The use of machine learning to automate image processing has its benefits but has rarely been adopted in aquatic studies. With using efforts to use deep learning methods, the classification of specific species could potentially increase. In fact, there is a study done in Australia’s ocean waters that classification of fish through deep learning was more efficient that manual human classification. In the study to test the abundance of different species, “The computer’s performance in determining abundance was 7.1% better than human marine experts and 13.4% better than citizen scientists in single image test datasets, and 1.5 and 7.8% higher in video datasets, respectively” (Campbell, M. D.). This remarkably explain that using machiene learning in marine animals is a better method than a manually classifying Aquatic animals Not only is it good for classification, it will be used to answer broader questions such as population count, the location of species, its abundance, and how it appears to be thriving. Since Machine learning and deep learning are often defined as one, both learning methods will be used to analyze the images and find patterns on my data.

3. Datasets

We used two datasets in my project. The first dataset includes the pictures that I took at the Georgia Acquarium. That dataset was used for testing. The second dataset used was a fish dataset from kaggle which contains 9 different seafood types (Black Sea Sprat, Gilt-Head Bream, Hourse Mackerel, Red Mullet, Red Sea Bream, Sea Bass, Shrimp, Striped Red Mullet, Trout). For each type, there are 1000 augmented images and their pair-wise augmented ground truths.

The link to access the Kaggle dataset is https://www.kaggle.com/crowww/a-large-scale-fish-dataset

3.1. Sample of Images of Personal Dataset

Left to right: Banded Archerfish, Lionfish, and Red Piranha

Figure 1: These images are samples of my personal data which is made up of images of fishes taken at the Georgia Acquarium.

3.2. Sample of Images from Large Scale Fish Dataset

4. Conclusion

Deep learning methods provide a faster, cheaper, and more accurate alternative to manual data analysis methods currently used to monitor and assess animal abundance and have much to offer the field of aquatic ecology. We was able to create a model to prove that we can use AI to efficiently detect and classify marine animals.

5. Acknowledgments

Special thanks to these people that helped me with this paper: Gregor von Laszewski Yohn Jairo Carlos Theran Jacques Fleischer Victor Adankai

6. References

9 - Project: Hand Tracking with AI

David Umanzor, su21-reu-364, Edit

Abstract

In this project, we study the ability of an AI to recognize letters from the American Sign Language (ASL) alphabet. We use a Convolutional Neural Network and apply it to a dataset of hands in different positionings showing the letters ‘a’, ‘b’, and ‘c’ in ASL. The proposed CNN model receives an ASL image and recognizes the feature of the image, generating the predicted letter.

Contents