This the multi-page printable view of this section. Click here to print.

Content

- 1: Courses

- 1.1: 2021 REU Course

- 1.2: AI-First Engineering Cybertraining

- 1.2.1: Project Guidelines

- 1.3: Big Data 2020

- 1.4: REU 2020

- 1.5: Big Data 2019

- 1.6: Cloud Computing

- 1.7: Data Science to Help Society

- 1.8: Intelligent Systems

- 1.9: Linux

- 1.10: Markdown

- 1.11: OpenStack

- 1.12: Python

- 1.13: MNIST Classification on Google Colab

- 2: Books

- 2.1: Python

- 2.1.1: Introduction to Python

- 2.1.2: Python Installation

- 2.1.3: Interactive Python

- 2.1.4: Editors

- 2.1.5: Google Colab

- 2.1.6: Language

- 2.1.7: Cloudmesh

- 2.1.7.1: Introduction

- 2.1.7.2: Installation

- 2.1.7.3: Output

- 2.1.7.4: Dictionaries

- 2.1.7.5: Shell

- 2.1.7.6: StopWatch

- 2.1.7.7: Cloudmesh Command Shell

- 2.1.7.8: Exercises

- 2.1.8: Data

- 2.1.8.1: Data Formats

- 2.1.9: Mongo

- 2.1.9.1: MongoDB in Python

- 2.1.9.2: Mongoengine

- 2.1.10: Other

- 2.1.10.1: Word Count with Parallel Python

- 2.1.10.2: NumPy

- 2.1.10.3: Scipy

- 2.1.10.4: Scikit-learn

- 2.1.10.5: Dask - Random Forest Feature Detection

- 2.1.10.6: Parallel Computing in Python

- 2.1.10.7: Dask

- 2.1.11: Applications

- 2.1.11.1: Fingerprint Matching

- 2.1.11.2: NIST Pedestrian and Face Detection :o2:

- 2.1.12: Libraries

- 2.1.12.1: Python Modules

- 2.1.12.2: Data Management

- 2.1.12.3: Plotting with matplotlib

- 2.1.12.4: DocOpts

- 2.1.12.5: OpenCV

- 2.1.12.6: Secchi Disk

- 3: Modules

- 3.1: Contributors

- 3.2: List

- 3.3: Autogenerating Analytics Rest Services

- 3.4: DevOps

- 3.4.1: DevOps - Continuous Improvement

- 3.4.2: Infrastructure as Code (IaC)

- 3.4.3: Ansible

- 3.4.4: Puppet

- 3.4.5: Travis

- 3.4.6: DevOps with AWS

- 3.4.7: DevOps with Azure Monitor

- 3.5: Google Colab

- 3.6: Modules from SDSC

- 3.7: AI-First Engeneering Cybertraining Spring 2021 - Module

- 3.7.1: 2021

- 3.7.1.1: Introduction to AI-Driven Digital Transformation

- 3.7.1.2: AI-First Engineering Cybertraining Spring 2021

- 3.7.1.3: Introduction to AI in Health and Medicine

- 3.7.1.4: Mobility (Industry)

- 3.7.1.5: Space and Energy

- 3.7.1.6: AI In Banking

- 3.7.1.7: Cloud Computing

- 3.7.1.8: Transportation Systems

- 3.7.1.9: Commerce

- 3.7.1.10: Python Warm Up

- 3.7.1.11: Distributed Training for MNIST

- 3.7.1.12: MLP + LSTM with MNIST on Google Colab

- 3.7.1.13: MNIST Classification on Google Colab

- 3.7.1.14: MNIST With PyTorch

- 3.7.1.15: MNIST-AutoEncoder Classification on Google Colab

- 3.7.1.16: MNIST-CNN Classification on Google Colab

- 3.7.1.17: MNIST-LSTM Classification on Google Colab

- 3.7.1.18: MNIST-MLP Classification on Google Colab

- 3.7.1.19: MNIST-RMM Classification on Google Colab

- 3.8: Big Data Applications

- 3.8.1: 2020

- 3.8.1.1: Introduction to AI-Driven Digital Transformation

- 3.8.1.2: BDAA Fall 2020 Course Lectures and Organization

- 3.8.1.3: Big Data Use Cases Survey

- 3.8.1.4: Physics

- 3.8.1.5: Introduction to AI in Health and Medicine

- 3.8.1.6: Mobility (Industry)

- 3.8.1.7: Sports

- 3.8.1.8: Space and Energy

- 3.8.1.9: AI In Banking

- 3.8.1.10: Cloud Computing

- 3.8.1.11: Transportation Systems

- 3.8.1.12: Commerce

- 3.8.1.13: Python Warm Up

- 3.8.1.14: MNIST Classification on Google Colab

- 3.8.2: 2019

- 3.8.2.1: Introduction

- 3.8.2.2: Introduction (Fall 2018)

- 3.8.2.3: Motivation

- 3.8.2.4: Motivation (cont.)

- 3.8.2.5: Cloud

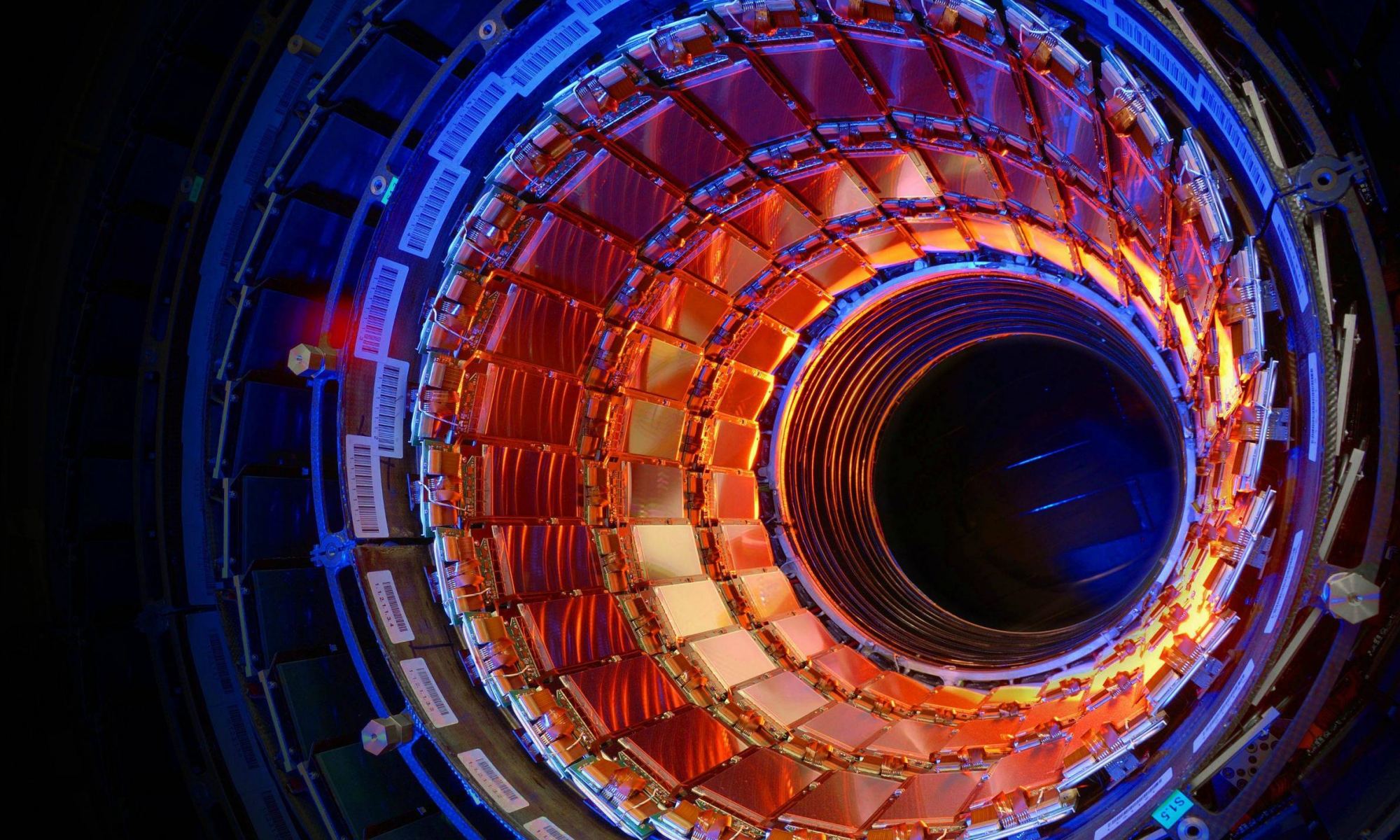

- 3.8.2.6: Physics

- 3.8.2.7: Deep Learning

- 3.8.2.8: Sports

- 3.8.2.9: Deep Learning (Cont. I)

- 3.8.2.10: Deep Learning (Cont. II)

- 3.8.2.11: Introduction to Deep Learning (III)

- 3.8.2.12: Cloud Computing

- 3.8.2.13: Introduction to Cloud Computing

- 3.8.2.14: Assignments

- 3.8.2.14.1: Assignment 1

- 3.8.2.14.2: Assignment 2

- 3.8.2.14.3: Assignment 3

- 3.8.2.14.4: Assignment 4

- 3.8.2.14.5: Assignment 5

- 3.8.2.14.6: Assignment 6

- 3.8.2.14.7: Assignment 7

- 3.8.2.14.8: Assignment 8

- 3.8.2.15: Applications

- 3.8.2.15.1: Big Data Use Cases Survey

- 3.8.2.15.2: Cloud Computing

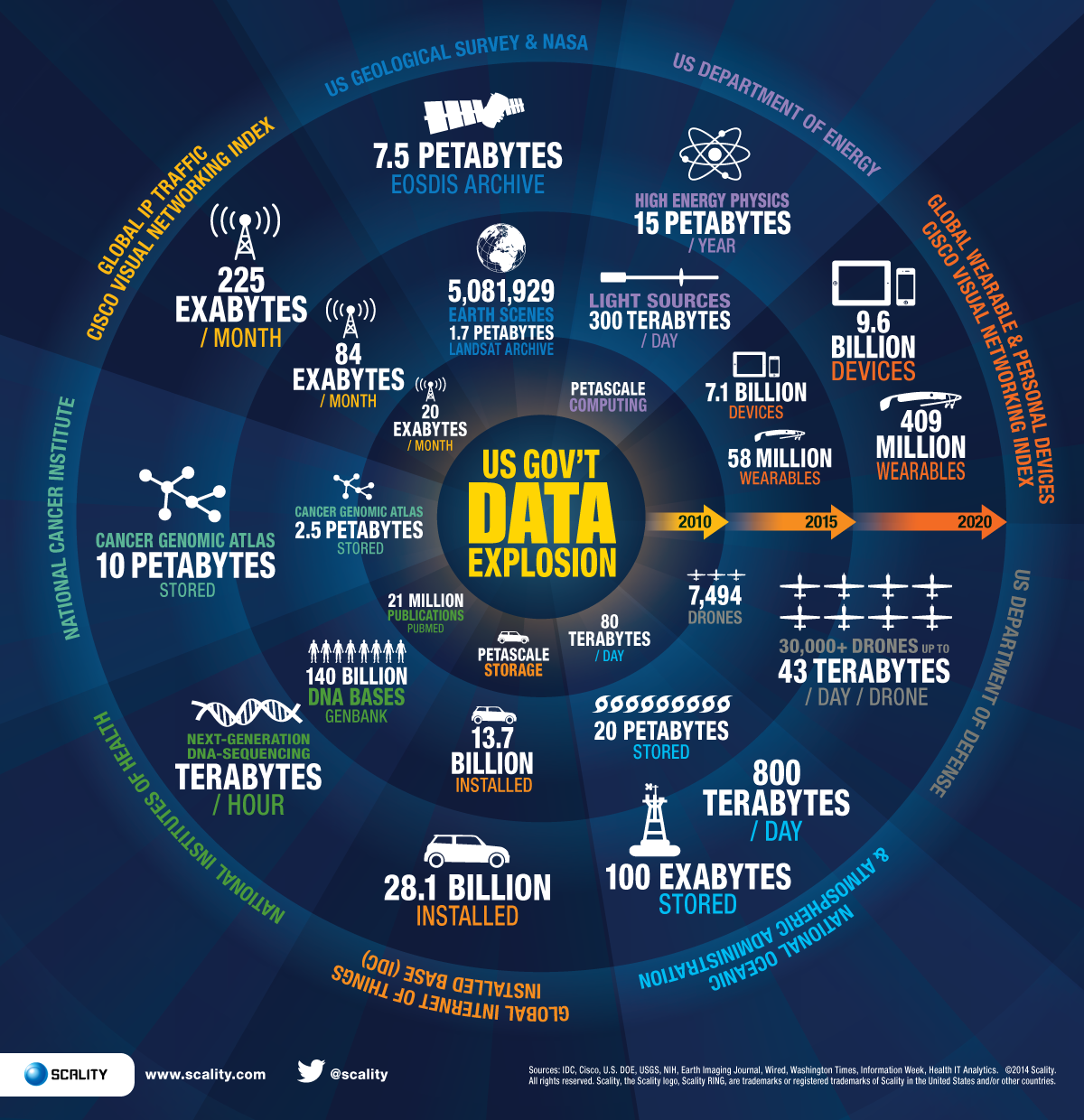

- 3.8.2.15.3: e-Commerce and LifeStyle

- 3.8.2.15.4: Health Informatics

- 3.8.2.15.5: Overview of Data Science

- 3.8.2.15.6: Physics

- 3.8.2.15.7: Plotviz

- 3.8.2.15.8: Practical K-Means, Map Reduce, and Page Rank for Big Data Applications and Analytics

- 3.8.2.15.9: Radar

- 3.8.2.15.10: Sensors

- 3.8.2.15.11: Sports

- 3.8.2.15.12: Statistics

- 3.8.2.15.13: Web Search and Text Mining

- 3.8.2.15.14: WebPlotViz

- 3.8.2.16: Technologies

- 3.9: MNIST Example

- 3.10: Sample

- 3.10.1: Module Sample

- 3.10.2: Alert Sample

- 3.10.3: Element Sample

- 3.10.4: Figure Sample

- 3.10.5: Mermaid Sample

- 4: Reports

- 4.1: Reports

- 4.2: Reports 2021

- 4.3: 2021 REU Reports

- 4.4: Project FAQ

- 5: Tutorials

- 5.1: cms

- 5.2: Git

- 5.2.1: Git pull requst

- 5.2.2: Adding a SSH Key for GitHub Repository

- 5.2.3: GitHub gh Command Line Interface

- 5.2.4: GitHub hid Repository

- 5.3: REU Tutorials

- 5.3.1: Installing PyCharm Professional for Free

- 5.3.2: Installing Git Bash on Windows 10

- 5.3.3: Using Raw Images in GitHub and Hugo in Compatible Fashion

- 5.3.4: Using Raw Images in GitHub and Hugo in Compatible Fashion

- 5.3.5: Uploading Files to Google Colab

- 5.3.6: Adding SSH Keys for a GitHub Repository

- 5.3.7: Installing Python

- 5.3.8: Installing Visual Studio Code

- 5.4: Tutorial on Using venv in PyCharm

- 5.5: 10 minus 4 Monitoring Tools for your Nvidia GPUs on Ubuntu 20.04 LTS

- 5.6: Example test

- 5.7:

- 6: Contributing

- 6.1: Overview

- 6.2: Getting Started

- 6.2.1: Example Markdown

- 6.3: Contribution Guidelines

- 6.4: Contributors

- 7: Publications

1 - Courses

With the help of modules, one can assemble their own courses. Courses can be designed individually or for a class with multiple students.

One of the main tools to export such courses is bookmanager which you can find out about at

https://pypi.org/project/cyberaide-bookmanager/

https://github.com/cyberaide/bookmanager

1.1 - 2021 REU Course

This course introduces the REU students to various topics in Intelligent Systems Engineering. The course was taught in Summer 2021.

Rstudio with Git and GitHub Slides

|

Rstudio with Git and GitHub Slides |

Programming with Python

Python is a great languge for doing data science and AI, a comprehensive list of features is available in book form. Please note that when installing Python, you always want to use a venv as this is best practice.

|

Introduction to Python (ePub) (PDF) |

Installation of Python

|

Installation of Python — June 7th, 2021 (AM) |

Update to the Video:

Best practices in Python recommend to use a Python venv. This is pretty easy to do and creates a separate Python environment for you so you do not interfere with your system Python installation. Some IDEs may do this automatically, but it is still best practice to install one and bind the IDE against it. To do this:

-

Download Python version 3.9.5 just as shown in the first lecture.

-

After the download you do an additional step as follows:

-

on Mac:

python3.9 -m venv ~/ENV3 source ~/ENV/bin/activateyou need to do the source every time you start a new window or on mac ass it to .zprofile

-

-

on Windows you first install gitbash and do all yuour terminal work from gitbash as this is more Linux-like. In gitbash, run

python -m venv ~/ENV3 ~/ENV/Script/activateIn case you like to add it to gitbash, you can add the source line to .bashrc and/or .bash_profile

-

In case you use VSCode, you can also do it individually in a directory where you have your code.

- On Mac:

cd TO YOUR DIR; python3.9 -m venv . - On Windows

cd TO YOUR DIR; python -m venv .

Then start VSCode in the directory and it will ask you to use this venv. However, the global ENV3 venv may be better and you cen set your interpreter to it.

- On Mac:

-

On Pycharm we recommend you use the ENV3 and set the clobal interpreter

Jupyter Notebooks

|

Jupyter Notebooks — June 7th, 2021 (PM): This lecture provides an introduction to Jupyter Notebooks using Visual Studio as IDE. |

Github

|

Video: Github |

|

Video-Github 2 — June 8th, 2021 (PM): In this lecture the student can learn how to create a project on RStudio and link it with a repository on GitHub to commit, pull and push the code from RStudio. |

Introduction to Python

|

Slides: This introduction to Python cover the different data type, how to convert type of variable, understand and create flow control usign conditional statements. |

|

Slides — June 10th, 2021 (PM): String, Numbers, Booleans Flow of control Using If statements |

|

Slides: String, Numbers, Booleans Flow of control Using If statements (2) |

|

Python Exercises - Lab 2 |

The first exercise will require a simple for loop, while the second is more complicated, requiring nested for loops and a break statement.

General Instructions: Create two different files with extension .ipnyb, one for each problem. The first file will be named factorial.ipnyb which is for the factorial problem, and the second prime_number.ipnyb for the prime number problem.

-

Write a program that can find the factorial of any given number. For example, find the factorial of the number 5 (often written as 5!) which is 12345 and equals 120. Your program should take as input an integer from the user.

Note: The factorial is not defined for negative numbers and the factorial of Zero is 1; that is 0! = 1.

You should

- If the number is less than Zero return with an error message.

- Check to see if the number is Zero—if it is then the answer is 1—print this out.

- Otherwise use a loop to generate the result and print it out.

-

A Prime Number is a positive whole number, greater than 1, that has no other divisors except the number 1 and the number itself. That is, it can only be divided by itself and the number 1, for example the numbers 2, 3, 5 and 7 are prime numbers as they cannot be divided by any other whole number. However, the numbers 4 and 6 are not because they can both be divided by the number 2 in addition the number 6 can also be divided by the number 3.

You should write a program to calculate prime number starting from 1 up to the value input by the user.

You should

- If the user inputs a number below 2, print an error message.

- For any number greater than 2 loop for each integer from 2 to that number and determine if it can be divided by another number (you will probably need two for loops for this; one nested inside the other).

- For each number that cannot be divided by any other number (that is its a prime number) print it out.

Motivation for the REU

|

Video — June 11th, 2021 (AM): Motivation for the REU: Data is Driven Everything |

|

Slides: Motivation for the REU: Data is Driven Everything |

|

Slides: Descriptive Statistic |

|

Slides: Probability |

|

Video — June 28th, 2021 (AM): Working on GitHUb Template and Mendeley references management |

Data Science Tools

|

Slides: Data Science Tools |

|

Video — June 14th, 2021 (AM): Numpy |

|

Video — June 14th, 2021 (PM): Pandas data frame |

|

Video — June 15th, 2021 (AM): Web data mining |

|

Video — June 15th, 2021 (PM): Pandas IO |

|

Video — June 16th, 2021 (AM): Pandas |

|

Video: Pycharm Installation and Virtual Environment setup — June 18th, 2021 (AM) |

|

Video: This lecture the student can learn the different applications of Matrix Operation using images on Python. — June 21st, 2021 (AM) |

|

Video: Data wrangling and Descriptive Statistic Using Python — June 21st, 2021 (AM) |

|

Video: Data wrangling and Descriptive Statistic Using Python — June 22nd, 2021 (PM) |

|

Video: FURY Visualization and Microsoft Lecture — June 25th, 2021 (PM) |

|

Video: Instroduction to Probability — June 25th, 2021 (PM) |

|

Video: Digital Twins and Virtual Tissue ussing CompuCell3D Simulating Cancer Somatic Evolution in nanoHUB — July 2nd, 2021 (AM) |

AI First Engineering

|

Video: AI First Engineering: Learning material — June 25th, 2021 (AM) |

|

Video: Adding content to your su21-reu repositories — June 17th, 2021 (PM) |

|

Slides: AI First Engineering |

Datasets for Projects

|

Video: Datasets for Projects: Data world and Kaggle — June 29th, 2021 (AM) |

|

Video: Datasets for Projects: Data world and Kaggle part 2 — June 29th, 2021 (PM) |

Machine Learning Models

|

Video: K-Means: Unsupervised model — June 30th, 2021 (AM) |

|

Video: Support Vector Machine: Supervised model — July 2nd, 2021 (PM) |

|

Slides: Support Vector Machine Supervised model. |

|

Video: Neural Networks: Deep Learning Supervised model — July 6th, 2021 (AM) |

|

Video: Neural Networks: Deep learning Model — July 6th, 2021 (AM) |

|

Video: Data Visualization: Visualizaton for Data Science — July 7th, 2021 (AM) |

|

Video: Convulotional Neural Networks: Deep learning Model — July 8th, 2021 (AM) |

Students Report Help

|

Video: Student Report Help with Introduction and Datasets — July 7th, 2021 (AM) |

|

Video: Student Report Help with Introduction and Datasets — July 13th, 2021 (AM) |

COVID-19

|

Video: Chemo-Preventive Effect of Vegetables and Fruits Consumption on the COVID-19 Pandemic — July 1st, 2021 (AM) |

- Yedjou CG, Alo RA, Liu J, et al. Chemo-Preventive Effect of Vegetables and Fruits Consumption on the COVID-19 Pandemic. J Nutr Food Sci. 2021;4(2):029

- Geoffrey C. Fox, Gregor von Laszewski, Fugang Wang, Saumyadipta Pyne, AICov: An Integrative Deep Learning Framework for COVID-19 Forecasting with Population Covariates, J. data sci. 19(2021), no. 2, 293-313, DOI 10.6339/21-JDS1007

1.2 - AI-First Engineering Cybertraining

This course introduces the students to AI-First Engineering Cybertraining we provide the following sections

Class Material

As part of this class, we will be using a variety of sources. To simplify the presentation we provide them in a variety of smaller packaged material including books, lecture notes, slides, presentations and code.

Note: We will regularly update the course material, so please always download the newest version. Some browsers try to be fancy and cache previous page visits. So please make sure to refresh the page.

We will use the following material:

Course Lectures and Management

|

Course Lectures. These meeting notes are updated weekly (Web) |

Overview

This course is built around the revolution driven by AI and in particular deep learning that is transforming all activities: industry, research, and lifestyle. It will a similar structure to The Big Data Class and the details of the course will be adapted to the interests of participating students. It can include significant deep learning programming.

All activities – Industry, Research, and Lifestyle – are being transformed by Artificial Intelligence AI and Big Data. AI is currently dominated by deep learning implemented on a global pervasive computing environment - the global AI supercomputer. This course studies the technologies and applications of this transformation.

We review Core Technologies driving these transformations: Digital transformation moving to AI Transformation, Big Data, Cloud Computing, software and data engineering, Edge Computing and Internet of Things, The Network and Telecommunications, Apache Big Data Stack, Logistics and company infrastructure, Augmented and Virtual reality, Deep Learning.

There are new “Industries” over the last 25 years: The Internet, Remote collaboration and Social Media, Search, Cybersecurity, Smart homes and cities, Robotics. However, our focus is Traditional “Industries” Transformed: Computing, Transportation: ride-hailing, drones, electric self-driving autos/trucks, road management, travel, construction Industry, Space, Retail stores and e-commerce, Manufacturing: smart machines, digital twins, Agriculture and Food, Hospitality and Living spaces: buying homes, hotels, “room hailing”, Banking and Financial Technology: Insurance, mortgage, payments, stock market, bitcoin, Health: from DL for pathology to personalized genomics to remote surgery, Surveillance and Monitoring: – Civilian Disaster response; Miltary Command and Control, Energy: Solar wind oil, Science; more data better analyzed; DL as the new applied mathematics, Sports: including Sabermetrics, Entertainment, Gaming including eSports, News, advertising, information creation and dissemination, education, fake news and Politics, Jobs.

We select material from above to match student interests.

Students can take the course in either software-based or report-based mode. The lectures with be offered in video form with a weekly discussion class. Python and Tensorflow will be main software used.

Lectures on Particular Topics

Introduction to AI-Driven Digital Transformation

|

Introduction to AI-Driven Digital Transformation (Web) |

Introduction to Google Colab

|

A Gentle Introduction to Google Colab (Web) |

|

A Gentle Introduction to Python on Google Colab (Web) |

|

MNIST Classification on Google Colab (Web) |

|

MNIST-MLP Classification on Google Colab (Web) |

|

MNIST-RNN Classification on Google Colab (Web) |

|

MNIST-LSTM Classification on Google Colab (Web) |

|

MNIST-Autoencoder Classification on Google Colab (Web) |

|

MNIST with MLP+LSTM Classification on Google Colab (Web) |

|

Distributed Training with MNIST Classification on Google Colab (Web) |

|

PyTorch with MNIST Classification on Google Colab (Web) |

Material

Health and Medicine

AI in Banking

Space and Energy

Mobility (Industry)

Cloud Computing

Commerce

Complementary Material

- When working with books, ePubs typically display better than PDF. For ePub, we recommend using iBooks on macOS and calibre on all other systems.

Piazza

|

Piazza. The link for all those that participate in the IU class to its class Piazza. |

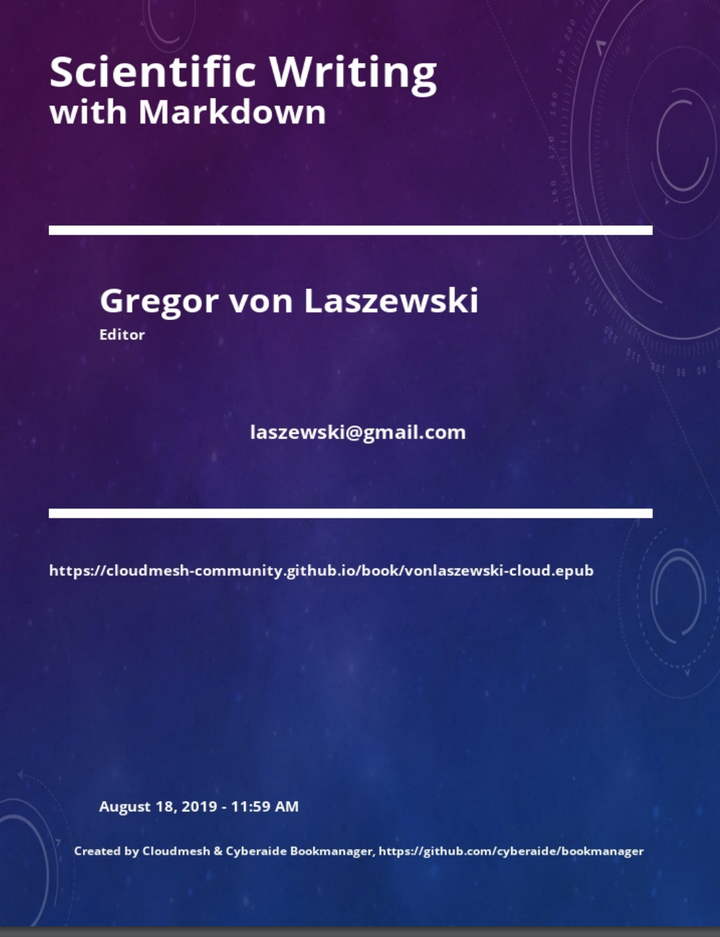

Scientific Writing with Markdown

|

Scientific Writing with Markdown (ePub) (PDF) |

Git Pull Request

|

Git Pull Request. Here you will learn how to do a simple git pull request either via the GitHub GUI or the git command line tools |

Introduction to Linux

This course does not require you to do much Linux. However, if you do need it, we recommend the following as starting point listed

The most elementary Linux features can be learned in 12 hours. This includes bash, editor, directory structure, managing files. Under Windows, we recommend using gitbash, a terminal with all the commands built-in that you would need for elementary work.

|

Introduction to Linux (ePub) (PDF) |

Older Course Material

Older versions of the material are available at

|

Lecture Notes 2020 (ePub) (PDF) |

|

Big Data Applications (Nov. 2019) (ePub) (PDF) |

|

Big Data Applications (2018) (ePub) (PDF) |

Contributions

You can contribute to the material with useful links and sections that you find. Just make sure that you do not plagiarize when making contributions. Please review our guide on plagiarism.

Computer Needs

This course does not require a sophisticated computer. Most of the things can be done remotely. Even a Raspberry Pi with 4 or 8GB could be used as a terminal to log into remote computers. This will cost you between $50 - $100 dependent on which version and equipment. However, we will not teach you how to use or set up a Pi or another computer in this class. This is for you to do and find out.

In case you need to buy a new computer for school, make sure the computer is upgradable to 16GB of main memory. We do no longer recommend using HDD’s but use SSDs. Buy the fast ones, as not every SSD is the same. Samsung is offering some under the EVO Pro branding. Get as much memory as you can effort. Also, make sure you back up your work regularly. Either in online storage such as Google, or an external drive.

1.2.1 - Project Guidelines

We present here the project guidelines

All students of this class are doing a software project. (Some of our classes allow non software projects)

-

The final project is 50% of grade

-

All projects must have a well-written report as well as the software component

-

We must be able to run software from class GitHub repository. To do so you must include an appendix to your project report describing how to run your project.

- If you use containers you must decsribe how to create them from Docker files.

- If you usue ipy notebooks you must include a button or links so it can be run in Google collab

-

There are a useful set of example projects submitted in previous classes

- Last year’s Fall 2020 Big Data class

- Earlier Classes [https://github.com/cybertraining-dsc/pub/blob/master/docs/vonLaszewski-cloud-vol-9.pdf] (26 projects)

- Earlier Classes [https://github.com/cybertraining-dsc/pub/blob/master/docs//vonLaszewski-i523-v3.pdf] (45 projects)

- Earlier Classes [https://github.com/cybertraining-dsc/pub/blob/master/docs//vonLaszewski-i524-spring-2017.pdf] (28 projects)

-

In this class you do not have the option to work on a joint report, however you can collaborate.

-

Note: all reports and projects are open for everyone as they are open source.

Details

The major deliverable of the course is a software project with a report. The project must include a programming part to get a full grade. It is expected that you identify a suitable analysis task and data set for the project and that you learn how to apply this analysis as well as to motivate it. It is part of the learning outcome that you determine this instead of us giving you a topic. This topic will be presented by student in class April 1.

It is desired that the project has a novel feature in it. A project that you simply reproduce may not recieve the best grade, but this depends on what the analysis is and how you report it.

However “major advances” and solving of a full-size problem are not required. You can simplify both network and dataset to be able to complete project. The project write-up should describe the “full-size” realistic problem with software exemplifying an instructive example.

One goal of the class is to use open source technology wherever possible. As a beneficial side product of this, we are able to distribute all previous reports that use such technologies. This means you can cite your own work, for example, in your resume. For big data, we have more than 1000 data sets we point to.

Comments on Example Projects from previous classes

Warning: Please note that we do not make any quality assumptions to the published papers that we list here. It is up to you to identify outstanding papers.

Warning: Also note that these activities took place in previous classes, and the content of this class has since been updated or the focus has shifted. Especially chapters on Google Colab, AI, DL have been added to the course after the date of most projects. Also, some of the documents include an additional assignment called Technology review. These are not the same as the Project report or review we refer to here. These are just assignments done in 2-3 weeks. So please do not use them to identify a comparison with your own work. The activities we ask from you are substantially more involved than the technology reviews.

Format of Project

Plagiarism is of course not permitted. It is your responsibility to know what plagiarism is. We provide a detailed description book about it here, you can also do the IU plagiarism test to learn more.

All project reports must be provided in github.com as a markdown file.

All images must be in an images directory. You must use proper

citations. Images copied from the Internet must have a citation in the

Image caption. Please use the IEEE citation format and do not use

APA or harvard style. Simply use fotnotes in markdown but treat them as

regular citations and not text footnotes (e.g. adhere to the IEEE rules).

All projects and reports must

be checked into the Github repository. Please take a look at the example we created for you.

The report will be stored in the github.com.

./project/index.md

./project/images/mysampleimage.png

Length of Project Report

Software Project Reports: 2500 - 3000 Words.

Possible sources of datasets

Given next are links to collections of datasets that may be of use for homework assignments or projects.

-

[https://www.data.gov/] [https://github.com/caesar0301/awesome-public-datasets]

-

[https://www.quora.com/Where-can-I-find-large-datasets-open-to-the-public]

FAQ

-

Why you should not just paste and copy into the GitHub GUI?

We may make comments directly in your markdown or program files. If you just paste and copy you may overlook such comments. HEns only paste and copy small paragraphs. If you need to. The best way of using github is from commandline and using editors such as pycharm and emacs.

-

I like to do a project that relates to my company?

- Please go ahead and do so but make sure you use open-source data, and all results can be shared with everyone. If that is not the case, please pick a different project.

-

Can I use Word or Google doc, or LaTeX to hand in the final document?

-

No. you must use github.com and markdown.

-

Please note that exporting documents from word or google docs can result in a markdown file that needs substantial cleanup.

-

-

Where do I find more information about markdown and plagiarism

-

https://laszewski.github.io/publication/las-20-book-markdown/

-

[https://cloudmesh-community.github.io/pub/vonLaszewski-writing.pdf]{.ul}

-

Can I use an online markdown editor?

-

There are many online markdown editors available. One of them is [https://dillinger.io/]{.ul}.

Use them to write your document or check the one you have developed in another editor such as word or google docs. -

Remember, online editors can be dangerous in case you lose network connection. So we recommend to develop small portions and copy them into a locally managed document that you then check into github.com.

-

Github GUI (recommended): this works very well, but the markdown is slightly limited. We use hugo’s markdown.

-

pyCharm (recommended): works very well.

-

emacs (recommended): works very well

-

-

What level of expertise and effort do I need to write markdown?

- We taught 10-year-old students to use markdown in less than 5 minutes.

-

What level of expertise is needed to learn BibTeX

- We have taught BibTeX to inexperienced students while using jabref in less than an hour (but it is not required for this course). You can use footnotes while making sure that the footnotes follow the IEEE format.

-

How can I get IEEE formatted footnotes?

- Simply use jabref and paste and copy the text it produces.

-

Will there be more FAQ’s?

-

Please see our book on markdown.

-

Discuss your issue in piazza; if it is an issue that is not yet covered, we will add it to the book.

-

-

How do I write URLs?

-

Answered in book

-

Note: All URL’s must be either in [TEXT](URLHERE) or <URLHERE> format.

-

1.3 - Big Data 2020

This course introduces the students to Cloud Big Data Applications we provide the following sections

Class Material

As part of this class, we will be using a variety of sources. To simplify the presentation we provide them in a variety of smaller packaged material including books, lecture notes, slides, presentations and code.

Note: We will regularly update the course material, so please always download the newest version. Some browsers try to be fancy and cache previous page visits. So please make sure to refresh the page.

We will use the following material:

Course Lectures and Management

|

Course Lectures. These meeting notes are updated weekly (Web) |

Lectures on Particular Topics

Introduction to AI-Driven Digital Transformation

|

Introduction to AI-Driven Digital Transformation (Web) |

Big Data Usecases Survey

Introduction to Google Colab

|

A Gentle Introduction to Google Colab (Web) |

|

A Gentle Introduction to Python on Google Colab (Web) |

|

MNIST Classification on Google Colab (Web) |

Material

Physics

Sports

Health and Medicine

AI in Banking

Transportation Systems

Space and Energy

Mobility (Industry)

Cloud Computing

Commerce

Complementary Material

- When working with books, ePubs typically display better than PDF. For ePub, we recommend using iBooks on macOS and calibre on all other systems.

Piazza

|

Piazza. The link for all those that participate in the IU class to its class Piazza. |

Scientific Writing with Markdown

|

Scientific Writing with Markdown (ePub) (PDF) |

Git Pull Request

|

Git Pull Request. Here you will learn how to do a simple git pull request either via the GitHub GUI or the git command line tools |

Introduction to Linux

This course does not require you to do much Linux. However, if you do need it, we recommend the following as starting point listed

The most elementary Linux features can be learned in 12 hours. This includes bash, editor, directory structure, managing files. Under Windows, we recommend using gitbash, a terminal with all the commands built-in that you would need for elementary work.

|

Introduction to Linux (ePub) (PDF) |

Older Course Material

Older versions of the material are available at

|

Lecture Notes 2020 (ePub) (PDF) |

|

Big Data Applications (Nov. 2019) (ePub) (PDF) |

|

Big Data Applications (2018) (ePub) (PDF) |

Contributions

You can contribute to the material with useful links and sections that you find. Just make sure that you do not plagiarize when making contributions. Please review our guide on plagiarism.

Computer Needs

This course does not require a sophisticated computer. Most of the things can be done remotely. Even a Raspberry Pi with 4 or 8GB could be used as a terminal to log into remote computers. This will cost you between $50 - $100 dependent on which version and equipment. However, we will not teach you how to use or set up a Pi or another computer in this class. This is for you to do and find out.

In case you need to buy a new computer for school, make sure the computer is upgradable to 16GB of main memory. We do no longer recommend using HDD’s but use SSDs. Buy the fast ones, as not every SSD is the same. Samsung is offering some under the EVO Pro branding. Get as much memory as you can effort. Also, make sure you back up your work regularly. Either in online storage such as Google, or an external drive.

1.4 - REU 2020

This course introduces the REU students to various topics in Intelligent Systems Engineering. The course was taught in Summer 2020.

Computational Foundations

- Brief Overview of the Praxis AI Platform and Overview of the Learning Paths

- Accessing Praxis Cloud

- Introduction To Linux and the Command Line

- Jupyter Notebooks

- A Brief Intro to Machine Learning in Google Colaboratory

Programming with Python

Selected chapters from out python Book

- Analyzing Patient Data

- Loops Lists Analyzing Data

- Functions Errors Exceptions

- Defensive Programming Debugging

|

Introduction to Python (ePub) (PDF) |

Coronavirus Overview

Basic Virology and Immunology

Clinical Presentation

|

|

Clinical Presentation |

Management of COVID-19

|

|

Management of COVID-19 |

Investigational Therapeutics and Vaccine Development

|

|

Investigational Therapeutics and Vaccine Development |

Coronavirus Genomics Superlab

Pull from Computational Biology Journey

SARS by the numbers

|

SARS by the numbers |

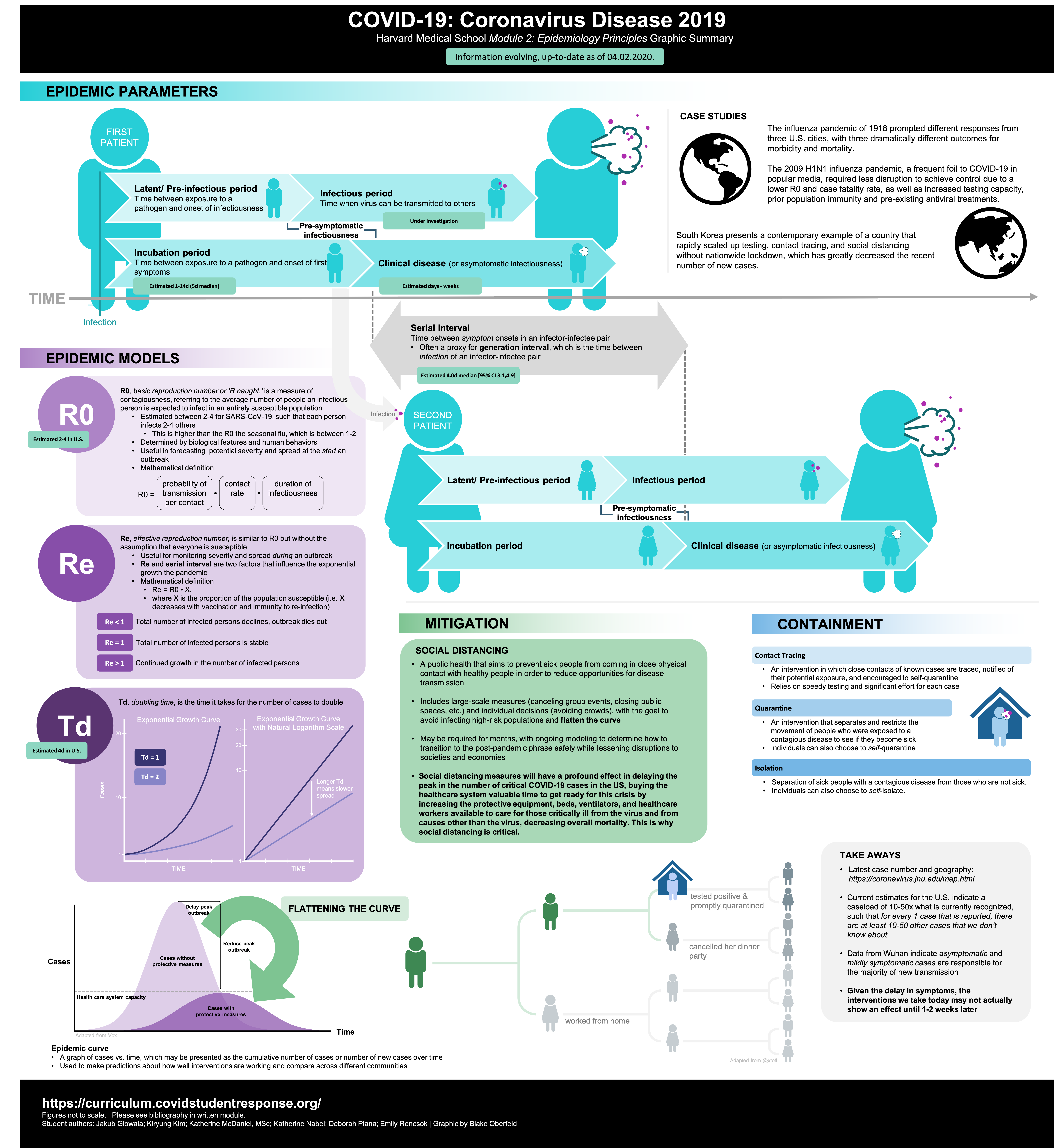

Epidemiology

Introduction to Epidemiological Terms

|

|

Principals |

|

Summary |

|

|

Introduction |

Where Are We Now?

|

|

Where Are We Now? |

Where Will We Be Next?

|

|

Where Will We Be Next? |

Approaches to Long-Term Planning

|

|

Approaches to Long-Term Planning |

Case Studies

|

|

1918-influenza-pandemic |

|

|

2009 H1N1 pandemic |

|

Soutch Korea 2020 |

Introduction to AI/Deep Learning

Deep Learning in Health and Medicine A: Overall Trends

Deep Learning in Health and Medicine B: Diagnostics

Deep Learning in Health and Medicine C: Examples

Deep Learning in Health and Medicine D: Impact of Corona Virus Covid-19

Deep Learning in Health and Medicine E: Corona Virus Covid-19 and Recession

Deep Learning in Health and Medicine F: Tackling Corona Virus Covid-19

Deep Learning in Health and Medicine G: Data and Computational Science and The Corona Virus Covid-19

Deep Learning in Health and Medicine H: Screening Covid-19 Drug Candidates

Deep Learning in Health and Medicine I: Areas for Covid19 Study and Pandemics as Complex Systems

REU Projects

- REU Individual Project Overview and Expectations

- Accessing the Coronavirus Datasets

- Primer on How to Analyze the Data

Effect of AI on Industry and its Transformation Introduction to AI First Engineering

Examples of Applications of Deep Learning

Optimization – a key goal of Statistics, AI and Deep Learning

Learn the Deep Learning important words/components

Deep Learning and Imaging: It’s first greast success

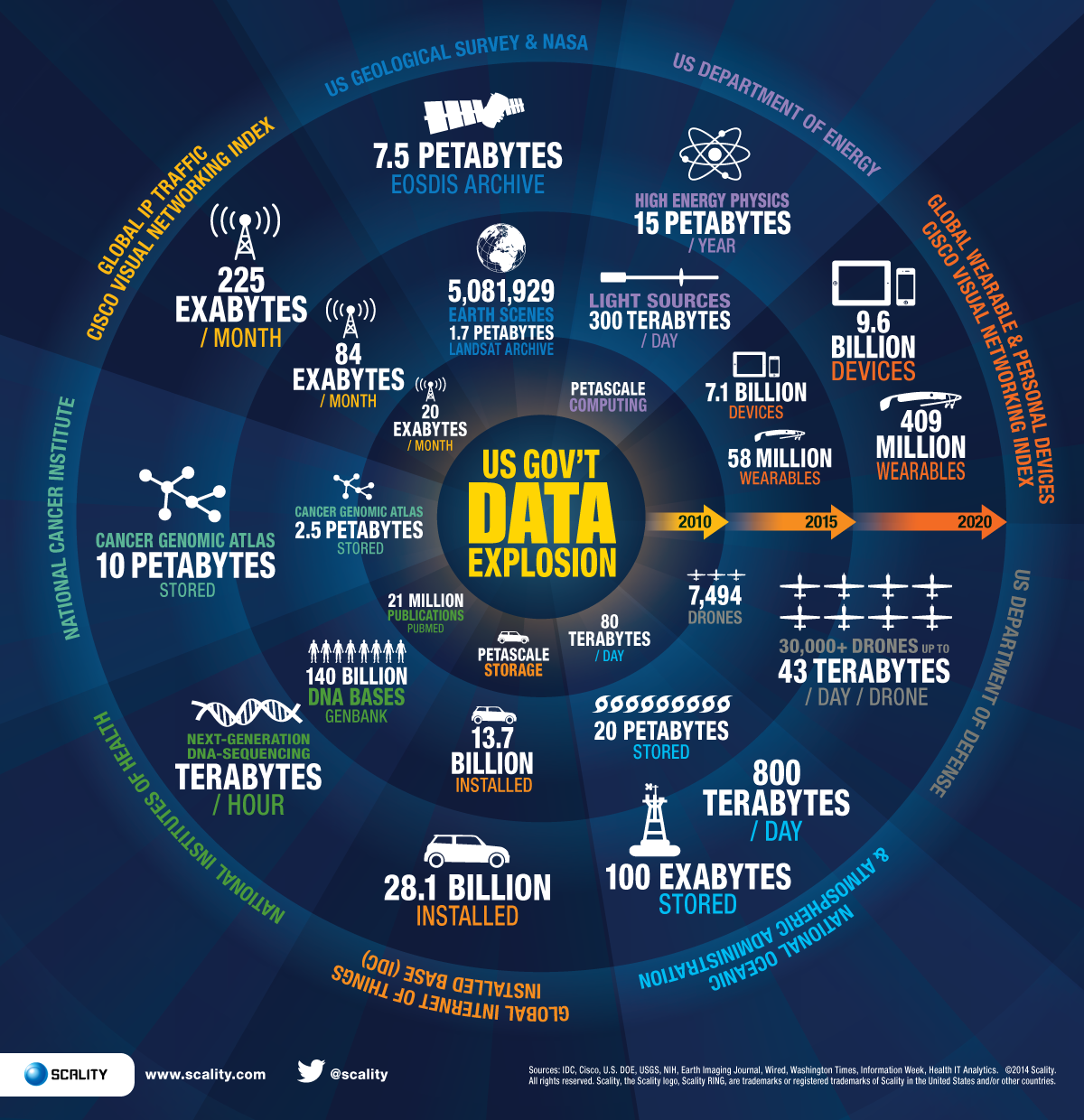

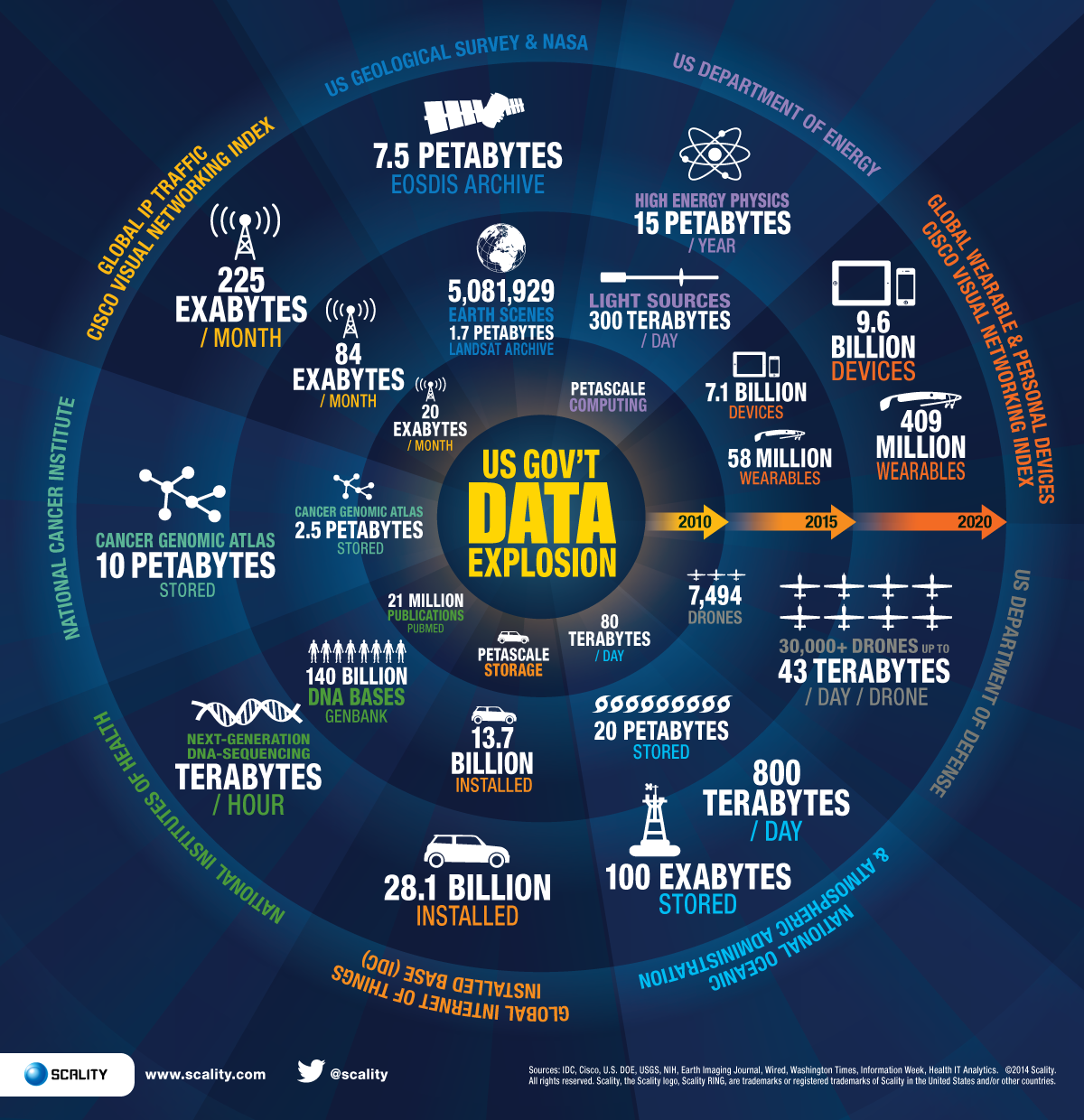

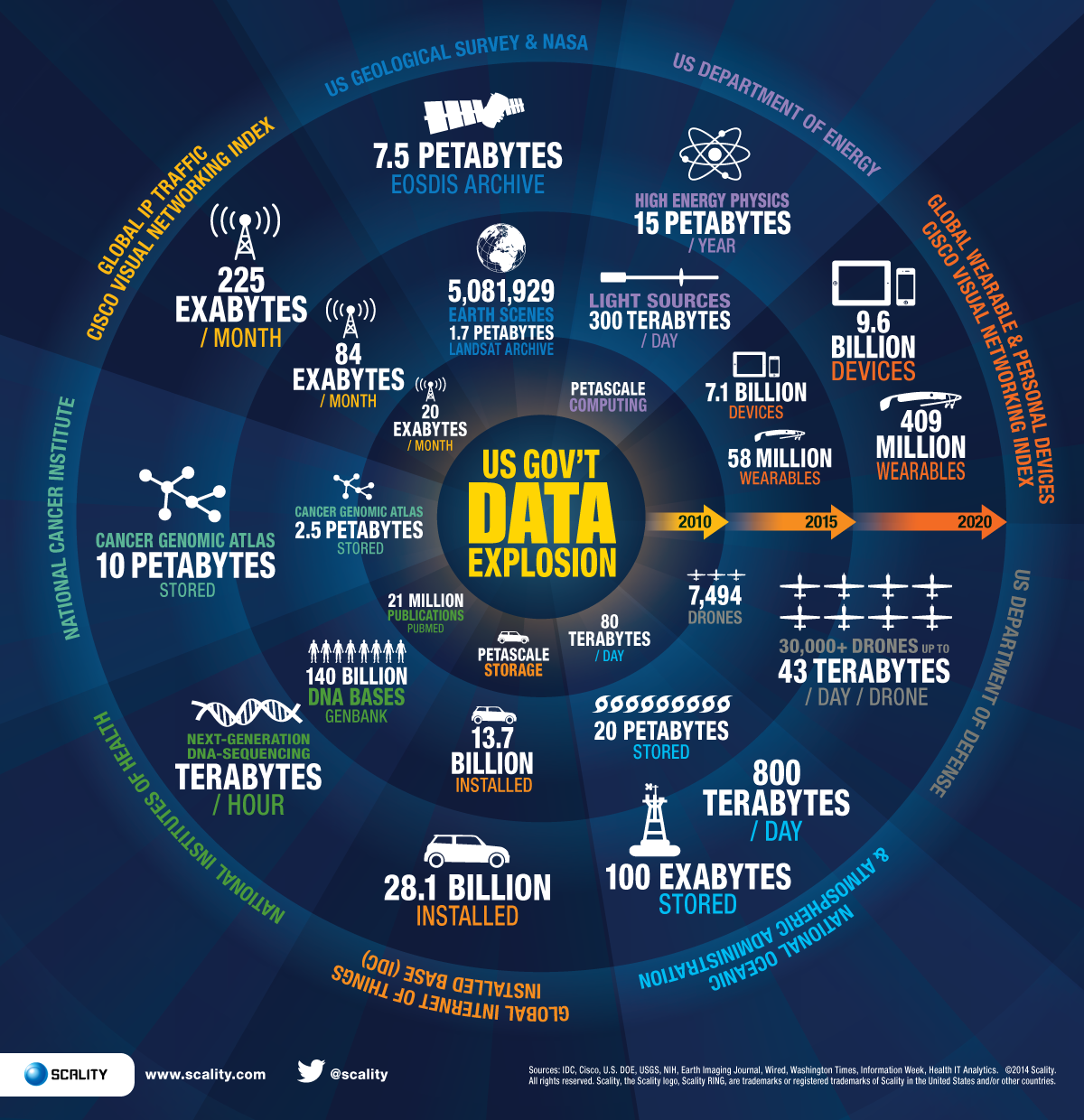

For the BIg Data Class we revised the following material Big Data Overview Fall 2019

Big Data, technology, clouds and selected applications

|

20 Videos covering Big Data, technology, clouds and selected applications |

Cloud Computing

|

18 Videos covering cloud computing |

1.5 - Big Data 2019

The document is available as an online book in ePub and PDF

For ePub, we recommend using iBooks on macOS and calibre on all other systems.

1.6 - Cloud Computing

The document is available as an online book in ePub and PDF from the following Web Page:

For ePub, we recommend using iBooks on Macos and calibre on all other systems.

THe book has over 590 pages. Topics coverd include:

- DEFINITION OF CLOUD COMPUTING

- CLOUD DATACENTER

- CLOUD ARCHITECTURE

- CLOUD REST

- NIST

- GRAPHQL

- HYPERVISOR

- Virtualization

- Virtual Machine Management with QEMU

- Virtualization

- IAAS

- Multipass

- Vagrant

- Amazon Web Services

- Microsoft Azure

- Google IaaS Cloud Services

- OpenStack

- Python Libcloud

- AWS Boto

- Cloudmesh

- MAPREDUCE

- HADOOP

- SPARK

- HADOOP ECOSYSTEM

- TWISTER

- HADOOP RDMA

- CONTAINERS

- DOCKER

- KUBERNETES

- Singularity

- SERVERLESS

- FaaS

- Apache OpenWhisk

- Kubeless

- OpenFaaS ` * OpenLamda

- MESSAGING

- MQTT

- Apache Avro

- GO

1.7 - Data Science to Help Society

COVID 101, Climate Change and their Technologies

General Material

Python Language

Need to add material here

Using Google CoLab and Jupyter notebooks

-

For questions on software, please mail Fugang Wang

- Fugang can also give you help on python including introductory material if you need extra

-

Introduction to Machine Learning Using TensorFlow (pptx)

-

Introduction to using Colab from IU class E534 with videos and note (google docs) This unit includes 3 videos

-

Deep Learning for MNIST The docs are located alongside the video at

-

This teaches how to do deep learning on a handwriting example from NIST which is used in many textbooks

-

In the latter part of the document, a homework description is given. That can be ignored!

-

There are 5 videos

-

Jupyter notebook on Google Colab for COVID-19 data analysis ipynb

Follow-up on Discussion of AI remaking Industry worldwide

-

Class on AI First Engineering with 35 videos describing technologies and particular industries Commerce, Mobility, Banking, Health, Space, Energy in detail (youtube playlist)

-

Introductory Video (one of 35) discussing the Transformation - Industries invented and remade through AI (youtube)

-

Some online videos on deep learning

- Introduction to AI First Engineering (youtube)

- Examples of Applications of Deep Learning (youtube)

Optimization -- a key in Statistics, AI and Deep Learning (youtube)

Learn the Deep Learning important words and parts (youtube)

Deep Learning and Imaging: It's first great success (youtube)

Covid Material

Covid Biology Starting point

Medical Student COVID-19 Curriculum - COVID-19 Curriculum Module 1 and then module 2

Compucell3D Modelling material

- Download

- Manual

- List of NanoHub Tools

- For help please ask Juliano Ferrari Gianlupi

Interactive Two-Part Virtual Miniworkshop on Open-Source CompuCell3D

Multiscale, Virtual-Tissue Spatio-Temporal Simulations of COVID-19 Infection, Viral Spread and Immune Response and Treatment Regimes** VTcovid19Symp

- Part I: Will be presented twice:

- First Presentation June 11th, 2020, 2PM-5PM EST (6 PM- 9PM GMT)

- Second Presentation June 12th, 9AM - 12 noon EST (1 PM - 4 PM GMT)

- Part II: Will be presented twice:

- First Presentation June 18th, 2020, 2PM-5PM EST (6 PM- 9PM GMT)

- Second Presentation June 19th, 9AM - 12 noon EST (1 PM - 4 PM GMT)

Topics in Covid 101

- Biology1 and Harvard medical school material above

- Epidemiology2

- Public Health: Social Distancing and Policies3

- HPC4

- Data Science 5,6,7

- Modeling 8,9

Climate Change Material

Topics in Climate Change (Russell Hofmann)

-

This also needs Colab and deep learning background

-

A cursory and easy to understand review of climate issues in terms of AI: Tackling Climate Change with Machine Learning

-

For application in extreme weather event prediction, an area where traditional modelling methods have always struggled:

-

AI4ESS Summer School: Anyone who is interested in using Machine learning for climate science research I highly recommend you register for the Artificial Intelligence for Earth System Science summer school & interactive workshops which conveniently runs June 22^nd^ to 26^th^. Prior Experience with tensorflow/keras via google co-lab should be all the introductory skill needed to follow along. Register ASAP. https://www2.cisl.ucar.edu/events/summer-school/ai4ess/2020/artificial-intelligence-earth-system-science-ai4ess-summer-school

-

Kaggle Climate Change Climate Change Forecast - SARIMA Model with classic time series methods

-

Plotting satellite data Notebook (ipynb)

-

Accessing UCAR data (docx)

-

Hydrology with RNN and LSTM’s (more than 20 PDF’s)

References

-

Y. M. Bar-On, A. I. Flamholz, R. Phillips, and R. Milo, “SARS-CoV-2 (COVID-19) by the numbers,” arXiv [q-bio.OT], 28-Mar-2020. http://arxiv.org/abs/2003.12886 ↩︎

-

Jiangzhuo Chen, Simon Levin, Stephen Eubank, Henning Mortveit, Srinivasan Venkatramanan, Anil Vullikanti, and Madhav Marathe, “Networked Epidemiology for COVID-19,” Siam News, vol. 53, no. 05, Jun. 2020. https://sinews.siam.org/Details-Page/networked-epidemiology-for-covid-19 ↩︎

-

A. Adiga, L. Wang, A. Sadilek, A. Tendulkar, S. Venkatramanan, A. Vullikanti, G. Aggarwal, A. Talekar, X. Ben, J. Chen, B. Lewis, S. Swarup, M. Tambe, and M. Marathe, “Interplay of global multi-scale human mobility, social distancing, government interventions, and COVID-19 dynamics,” medRxiv - Public and Global Health, 07-Jun-2020. http://dx.doi.org/10.1101/2020.06.05.20123760 ↩︎

-

D. Machi, P. Bhattacharya, S. Hoops, J. Chen, H. Mortveit, S. Venkatramanan, B. Lewis, M. Wilson, A. Fadikar, T. Maiden, C. L. Barrett, and M. V. Marathe, “Scalable Epidemiological Workflows to Support COVID-19 Planning and Response,” May 2020. ↩︎

-

Luca Magri and Nguyen Anh Khoa Doan, “First-principles Machine Learning for COVID-19 Modeling,” Siam News, vol. 53, no. 5, Jun. 2020. https://sinews.siam.org/Details-Page/first-principles-machine-learning-for-covid-19-modeling ↩︎

-

[Robert Marsland and Pankaj Mehta, “Data-driven modeling reveals a universal dynamic underlying the COVID-19 pandemic under social distancing,” arXiv [q-bio.PE], 21-Apr-2020. http://arxiv.org/abs/2004.10666 ↩︎

-

Geoffrey Fox, “Deep Learning Based Time Evolution.”. http://dsc.soic.indiana.edu/publications/Summary-DeepLearningBasedTimeEvolution.pdf. ↩︎

-

T. J. Sego, J. O. Aponte-Serrano, J. F. Gianlupi, S. Heaps, K. Breithaupt, L. Brusch, J. M. Osborne, E. M. Quardokus, and J. A. Glazier, “A Modular Framework for Multiscale Spatial Modeling of Viral Infection and Immune Response in Epithelial Tissue,” BioRxiv, 2020. https://www.biorxiv.org/content/10.1101/2020.04.27.064139v2.abstract ↩︎

-

Yafei Wang, Gary An, Andrew Becker, Chase Cockrell, Nicholson Collier, Morgan Craig, Courtney L. Davis, James Faeder, Ashlee N. Ford Versypt, Juliano F. Gianlupi, James A. Glazier, Randy Heiland, Thomas Hillen, Mohammad Aminul Islam, Adrianne Jenner, Bing Liu, Penelope A Morel, Aarthi Narayanan, Jonathan Ozik, Padmini Rangamani, Jason Edward Shoemaker, Amber M. Smith, Paul Macklin, “Rapid community-driven development of a SARS-CoV-2 tissue simulator,” BioRxiv, 2020. https://www.biorxiv.org/content/10.1101/2020.04.02.019075v2.abstract ↩︎

-

Gagne II, D. J., S. E. Haupt, D. W. Nychka, and G. Thompson, 2019: Interpretable Deep Learning for Spatial Analysis of Severe Hailstorms. Mon. Wea. Rev., 147, 2827–2845, https://doi.org/10.1175/MWR-D-18-0316.1 ↩︎

1.8 - Intelligent Systems

1.9 - Linux

Linux will be used on many computers to develop and interact with cloud services. Especially popular are the command line tools that even exist on Windows. Thus we can have a uniform environment on all platforms using the bash shell.

For ePub, we recommend using iBooks on MacOS and calibre on all other systems.

Topics covered include:

- Linux Shell

- Perl one liners

- Refcards

- SSH

- keygen

- agents

- port forwarding

- Shell on Windows

- ZSH

1.10 - Markdown

An important part of any scientific research is to communicate and document it. Previously we used LaTeX in this class to provide the ability to contribute professional-looking documents. However, here we will describe how you can use markdown to create scientific documents. We use markdown also on the Web page.

Scientific Writing with Markdown

The document is available as an online book in ePub and PDF

For ePub, we recommend using iBooks on macOS and calibre on all other systems.

Topics covered include:

- Plagiarism

- Writing Scientific Articles

- Markdown (Pandoc format)

- Markdown for presentations

- Writing papers and reports with markdown

- Emacs and markdown as an editor

- Graphviz in markdown

1.11 - OpenStack

OpenStack is usable via command line tools and REST APIs. YOu will be able to experiment with it on Chameleon Cloud.

OpenStack with Chameleon Cloud

We have put together from the chameleon cloud manual a subset of information that is useful for using OpenStack. This focusses mostly on Virtual machine provisioning. The reason we put our own documentation here is to promote more secure utilization of Chameleon Cloud.

Additional material on how to uniformly access OpenStack via a multicloud command line tool is available at:

We highly recommend you use the multicloud environment as it will allow you also to access AWS, Azure, Google, and other clouds from the same command line interface.

The Chameleon Cloud document is availanle as online book in ePub and PDF from the following Web Page:

The book is available in ePub and PDF.

For ePub, we recommend using iBooks on MacOS and calibre on all other systems.

Topics covered include:

- Using Chameleoncloud more securely

- Resources

- Hardware

- Charging

- Getting STarted

- Virtual Machines

- Commandline Interface

- Horizon

- Heat

- Bare metal

- FAQ

1.12 - Python

Python is an easy to learn programming language. It has efficient high-level data structures and a simple but effective approach to object-oriented programming. Python’s simple syntax and dynamic typing, together with its interpreted nature, make it an ideal language for scripting and rapid application development in many areas on most platforms.

Introduction to Python

This online book will provide you with enough information to conduct your programming for the cloud in python. Although this the introduction was first developed for Cloud Computing related classes, it is a general introduction suitable for other classes.

The document is available as an online book in ePub and PDF

For ePub, we recommend using iBooks on macOS and calibre on all other systems.

Topics covered include:

- Python Installation

- Using Multiple different Python Versions

- First Steps

- REPL

- Editors

- Google Colab

- Python Language

- Python Modules

- Selected Libraries

- Python Cloudmesh Common Library

- Basic Matplotlib

- Basic Numpy

- Python Data Management

- Python Data Formats

- Python MongoDB

- Parallelism in Python

- Scipy

- Scikitlearn

- Elementary Machine Learning

- Dask

- Applications

- Fingerprint Matching

- Face Detection

1.13 - MNIST Classification on Google Colab

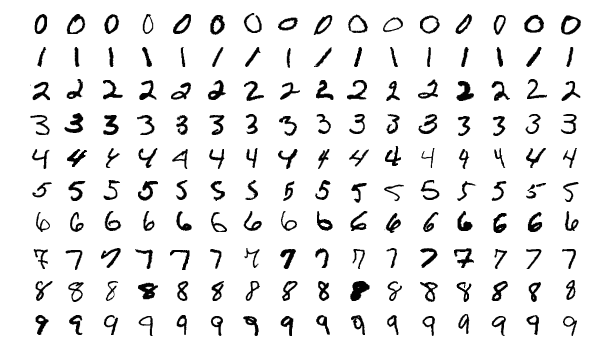

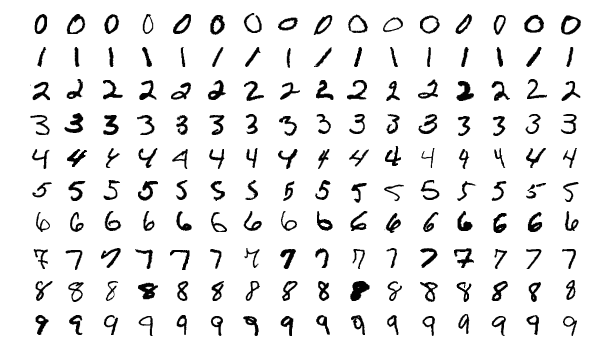

We discuss in this module how to create a simple IPython Notebook to solve an image classification problem. MNIST contains a set of pictures.

Prerequisite

- Knowledge of Python

- Google account

Effort

- 1 hour

Topics covered

- Using Google Colab

- Running an AI application on Google Colab

1. Introduction to Google Colab

This module will introduce you to how to use Google Colab to run deep learning models.

|

2. (Optional) Basic Python in Google Colab

In this module, we will take a look at some fundamental Python Concepts needed for day-to-day coding.

|

3. MNIST On Google colab

In this module, we discuss how to create a simple IPython Notebook to solve an image classification problem. MNIST contains a set of pictures

|

Assignments

- Get an account on Google if you do not have one.

- Do the optional Basic Python Colab lab module

- Do the MNIST Colab module.

References

2 - Books

2.1 - Python

Gregor von Laszewski (laszewski@gmail.com)

2.1.1 - Introduction to Python

Gregor von Laszewski (laszewski@gmail.com)

Learning Objectives

Learning Objectives

- Learn quickly Python under the assumption you know a programming language

- Work with modules

- Understand docopts and cmd

- Conduct some Python examples to refresh your Python knowledge

- Learn about the

mapfunction in Python - Learn how to start subprocesses and redirect their output

- Learn more advanced constructs such as multiprocessing and Queues

- Understand why we do not use

anaconda - Get familiar with

venv

Portions of this lesson have been adapted from the official Python Tutorial copyright Python Software Foundation.

Python is an easy-to-learn programming language. It has efficient high-level data structures and a simple but effective approach to object-oriented programming. Python’s simple syntax and dynamic typing, together with its interpreted nature, make it an ideal language for scripting and rapid application development in many areas on most platforms. The Python interpreter and the extensive standard library are freely available in source or binary form for all major platforms from the Python Web site, https://www.python.org/, and may be freely distributed. The same site also contains distributions of and pointers to many free third-party Python modules, programs and tools, and additional documentation. The Python interpreter can be extended with new functions and data types implemented in C or C++ (or other languages callable from C). Python is also suitable as an extension language for customizable applications.

Python is an interpreted, dynamic, high-level programming language suitable for a wide range of applications.

The philosophy of Python is summarized in The Zen of Python as follows:

- Explicit is better than implicit

- Simple is better than complex

- Complex is better than complicated

- Readability counts

The main features of Python are:

- Use of indentation whitespace to indicate blocks

- Object orient paradigm

- Dynamic typing

- Interpreted runtime

- Garbage collected memory management

- a large standard library

- a large repository of third-party libraries

Python is used by many companies and is applied for web development, scientific computing, embedded applications, artificial intelligence, software development, and information security, to name a few.

The material collected here introduces the reader to the basic concepts and features of the Python language and system. After you have worked through the material you will be able to:

- use Python

- use the interactive Python interface

- understand the basic syntax of Python

- write and run Python programs

- have an overview of the standard library

- install Python libraries using venv for multi-Python interpreter development.

This book does not attempt to be comprehensive and cover every single feature, or even every commonly used feature. Instead, it introduces many of Python’s most noteworthy features and will give you a good idea of the language’s flavor and style. After reading it, you will be able to read and write Python modules and programs, and you will be ready to learn more about the various Python library modules.

In order to conduct this lesson you need

- A computer with Python 3.8.1

- Familiarity with command line usage

- A text editor such as PyCharm, emacs, vi, or others. You should identify which works best for you and set it up.

References

Some important additional information can be found on the following Web pages.

Python module of the week is a Web site that provides a number of short examples on how to use some elementary python modules. Not all modules are equally useful and you should decide if there are better alternatives. However, for beginners, this site provides a number of good examples

- Python 2: https://pymotw.com/2/

- Python 3: https://pymotw.com/3/

2.1.2 - Python Installation

Gregor von Laszewski (laszewski@gmail.com)

Learning Objectives

Learning Objectives

- Learn how to install Python.

- Find additional information about Python.

- Make sure your Computer supports Python.

In this section, we explain how to install python 3.8 on a computer. Likely much of the code will work with earlier versions, but we do the development in Python on the newest version of Python available at https://www.python.org/downloads .

Hardware

Python does not require any special hardware. We have installed Python not only on PC’s and Laptops but also on Raspberry PI’s and Lego Mindstorms.

However, there are some things to consider. If you use many programs on your desktop and run them all at the same time, you will find that in up-to-date operating systems, you will find yourself quickly out of memory. This is especially true if you use editors such as PyCharm, which we highly recommend. Furthermore, as you likely have lots of disk access, make sure to use a fast HDD or better an SSD.

A typical modern developer PC or Laptop has 16GB RAM and an SSD. You can certainly do Python on a $35-$55 Raspberry PI, but you probably will not be able to run PyCharm. There are many alternative editors with less memory footprint available.

Python 3.9

Here we discuss how to install Python 3.9 or newer on your operating system. It is typically advantageous to use a newer version of python so you can leverage the latest features. Please be aware that many operating systems come with older versions that may or may not work for you. YOu always can start with the version that is installed and if you run into issues update later.

Python 3.9 on macOS

You want a number of useful tools on your macOS. This includes git, make, and a c compiler. All this can be installed with Xcode which is available from

Once you have installed it, you need to install macOS XCode command-line tools:

$ xcode-select --install

The easiest installation of Python is to use the installation from

https://www.python.org/downloads. Please, visit the page and follow the

instructions to install the python .pkg file. After this install, you have

python3 available from the command line.

Python 3.9 on macOS via Homebrew

Homebrew may not provide you with the newest version, so we recommend using the install from python.org if you can.

An alternative installation is provided from Homebrew. To use this

install method, you need to install Homebrew first. Start the process by

installing Python 3 using homebrew. Install homebrew using the

instruction in their web page:

$ /usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

Then you should be able to install Python using:

$ brew install python

Python 3.9 on Ubuntu 20.04

The default version of Python on Ubuntu 20.04 is 3.8. However, you can benefit from newer version while either installing them through python.org or adding them as follows:

$ sudo apt-get update

$ sudo apt install software-properties-common

$ sudo add-apt-repository ppa:deadsnakes/ppa -y

$ sudo apt-get install python3.9 python3-dev -y

Now you can verify the version with

$ python3.9 --version

which should be 3.9.5 or newer.

Now we will create a new virtual environment:

$ python3.9 -m venv --without-pip ~/ENV3

Now you must edit the ~/.bashrc file and add the following line at the end:

alias ENV3="source ~/ENV3/bin/activate"

ENV3

Now activate the virtual environment using:

$ source ~/.bashrc

You can install the pip for the virtual environment with the commands:

$ curl "https://bootstrap.pypa.io/get-pip.py" -o "get-pip.py"

$ python get-pip.py

$ rm get-pip.py

$ pip install -U pip

Prerequisite Windows 10

Python 3.9.5 can be installed on Windows 10 using: https://www.python.org/downloads

Let us assume you choose the Web-based installer than you click on the

file in the edge browser (make sure the account you use has

administrative privileges). Follow the instructions that the installer

gives. Important is that you select at one point [x] Add to Path.

There will be an empty checkmark about this that you will click on.

Once it is installed chose a terminal and execute

python --version

However, if you have installed conda for some reason, you need to read up on how to install 3.9.5 Python in conda or identify how to run conda and python.org at the same time. We often see others are giving the wrong installation instructions. Please also be aware that when you uninstall conda it is not sufficient t just delete it. You will have t make sure that you usnet the system variables automatically set at install time. THi includes. modifications on Linux and or Mac in .zprofile, .bashrc and .bash_profile. In windows, PATH and other environment variables may have been modified.

Python in the Linux Subsystem

An alternative is to use Python from within the Linux Subsystem. But that has some limitations, and you will need to explore how to access the file system in the subsystem to have a smooth integration between your Windows host so you can, for example, use PyCharm.

To activate the Linux Subsystem, please follow the instructions at

A suitable distribution would be

However, as it may use an older version of Python, you may want to update it as previously discussed

Using venv

This step is needed if you have not yet already installed a

venv for Python to make sure you are not interfering with your system

python. Not using a venv could have catastrophic consequences and the

destruction of your operating system tools if they really on Python. The

use of venv is simple. For our purposes we assume that you use the

directory:

~/ENV3

Follow these steps first:

First cd to your home directory. Then execute

$ python3 -m venv ~/ENV3

$ source ~/ENV3/bin/activate

You can add at the end of your .bashrc (ubuntu) or .bash_profile or

.zprofile` (macOS) file the line

If you like to activate it when you start a new terminal, please add

this line to your .bashrc or .bash_profile or .zprofile` file.

$ source ~/ENV3/bin/activate

so the environment is always loaded. Now you are ready to install Cloudmesh.

Check if you have the right version of Python installed with

$ python --version

To make sure you have an up to date version of pip issue the command

$ pip install pip -U

Install Python 3.9 via Anaconda

We are not recommending ether to use conda or anaconda. If you do so, it is your responsibility to update the information in this section in regards to it.

:o2: We will check your python installation, and if you use conda and anaconda you need to work on completing this section.

Download conda installer

Miniconda is recommended here. Download an installer for Windows, macOS, and Linux from this page: https://docs.conda.io/en/latest/miniconda.html

Install conda

Follow instructions to install conda for your operating systems:

- Windows. https://conda.io/projects/conda/en/latest/user-guide/install/windows.html

- macOS. https://conda.io/projects/conda/en/latest/user-guide/install/macos.html

- Linux. https://conda.io/projects/conda/en/latest/user-guide/install/linux.html

Install Python via conda

To install Python 3.9.5 in a virtual environment with conda please use

$ cd ~

$ conda create -n ENV3 python=3.9.5

$ conda activate ENV3

$ conda install -c anaconda pip

$ conda deactivate ENV3

It is very important to make sure you have a newer version of pip installed. After you installed and created the ENV3 you need to activate it. This can be done with

$ conda activate ENV3

If you like to activate it when you start a new terminal, please add

this line to your .bashrc or .bash_profile

If you use zsh please add it to .zprofile instead.

Version test

Regardless of which version you install, you must do a version test to make sure you have the correct python and pip versions:

$ python --version

$ pip --version

If you installed everything correctly you should see

Python 3.9.5

pip 21.1.2

or newer.

2.1.3 - Interactive Python

Gregor von Laszewski (laszewski@gmail.com)

Python can be used interactively. You can enter the interactive mode by entering the interactive loop by executing the command:

$ python

You will see something like the following:

$ python

Python 3.9.5 (v3.9.5:0a7dcbdb13, May 3 2021, 13:17:02)

[Clang 6.0 (clang-600.0.57)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>>

The >>> is the prompt used by the interpreter. This is similar to bash

where commonly $ is used.

Sometimes it is convenient to show the prompt when illustrating an example. This is to provide some context for what we are doing. If you are following along you will not need to type in the prompt.

This interactive python process does the following:

- read your input commands

- evaluate your command

- print the result of the evaluation

- loop back to the beginning.

This is why you may see the interactive loop referred to as a REPL: Read-Evaluate-Print-Loop.

REPL (Read Eval Print Loop)

There are many different types beyond what we have seen so far, such as dictionariess, lists, sets. One handy way of using the interactive python is to get the type of a value using type():

>>> type(42)

<type 'int'>

>>> type('hello')

<type 'str'>

>>> type(3.14)

<type 'float'>

You can also ask for help about something using help():

>>> help(int)

>>> help(list)

>>> help(str)

Using help() opens up a help message within a pager. To navigate you can use the spacebar to go down a page w to go up a page, the arrow keys to go up/down line-by-line, or q to exit.

Interpreter

Although the interactive mode provides a convenient tool to test

things out you will see quickly that for our class we want to use the

python interpreter from the command line. Let us assume the program is

called prg.py. Once you have written it in that file you simply can call it with

$ python prg.py

It is important to name the program with meaningful names.

2.1.4 - Editors

Gregor von Laszewski (laszewski@gmail.com)

This section is meant to give an overview of the Python editing tools needed for completing this course. There are many other alternatives; however, we do recommend using PyCharm.

PyCharm

PyCharm is an Integrated Development Environment (IDE) used for programming in Python. It provides code analysis, a graphical debugger, an integrated unit tester, and integration with git.

[Video

Python in 45 minutes

Next is an additional community YouTube video about the Python programming language. Naturally, there are many alternatives to this video, but it is probably a good start. It also uses PyCharm which we recommend.

[Video

How much you want to understand Python is a bit up to you. While it is good to know classes and inheritance, you may be able to get away without using it for this class. However, we do recommend that you learn it.

PyCharm Installation:

Method 1: Download and install it from the PyCharm website. This is easy and if no automated install is required we recommend this method. Students and teachers can apply for a free professional version. Please note that Jupyter notebooks can only be viewed in the professional version.

Method 2: PyCharm Installation on ubuntu using umake

$ sudo add-apt-repository ppa:ubuntu-desktop/ubuntu-make

$ sudo apt-get update

$ sudo apt-get install ubuntu-make

Once the umake command is installed, use the next command to install PyCharm community edition:

$ umake ide pycharm

If you want to remove PyCharm installed using umake command, use this:

$ umake -r ide pycharm

Method 2: PyCharm installation on ubuntu using PPA

$ sudo add-apt-repository ppa:mystic-mirage/pycharm

$ sudo apt-get update

$ sudo apt-get install pycharm-community

PyCharm also has a Professional (paid) version that can be installed using the following command:

$ sudo apt-get install pycharm

Once installed, go to your VM dashboard and search for PyCharm.

2.1.5 - Google Colab

Gregor von Laszewski (laszewski@gmail.com)

In this section, we are going to introduce you, how to use Google Colab to run deep learning models.

Introduction to Google Colab

This video contains the introduction to Google Colab. In this section we will be learning how to start a Google Colab project.

[Video

Programming in Google Colab

In this video, we will learn how to create a simple, Colab Notebook.

Required Installations

pip install numpy

[Video

Benchmarking in Google Colab with Cloudmesh

In this video, we learn how to do a basic benchmark with Cloudmesh tools. Cloudmesh StopWatch will be used in this tutorial.

Required Installations

pip install numpy

pip install cloudmesh-installer

pip install cloudmesh-common

[Video

2.1.6 - Language

Gregor von Laszewski (laszewski@gmail.com)

Statements and Strings

Let us explore the syntax of Python while starting with a print statement

print("Hello world from Python!")

This will print on the terminal

Hello world from Python!

The print function was given a string to process. A string is a sequence of characters. A character can be an alphabetic (A through Z, lower and upper case), numeric (any of the digits), white space (spaces, tabs, newlines, etc), syntactic directives (comma, colon, quotation, exclamation, etc), and so forth. A string is just a sequence of the character and typically indicated by surrounding the characters in double-quotes.

Standard output is discussed in the Section Linux.

So, what happened when you pressed Enter? The interactive Python program

read the line print ("Hello world from Python!"), split it into the

print statement and the "Hello world from Python!" string, and then

executed the line, showing you the output.

Comments

Comments in Python are followed by a #:

# This is a comment

Variables

You can store data into a variable to access it later. For instance:

hello = 'Hello world from Python!'

print(hello)

This will print again

Hello world from Python!

Data Types

Booleans

A boolean is a value that can have the values True or False. You

can combine booleans with boolean operators such as and and or

print(True and True) # True

print(True and False) # False

print(False and False) # False

print(True or True) # True

print(True or False) # True

print(False or False) # False

Numbers

The interactive interpreter can also be used as a calculator. For instance, say we wanted to compute a multiple of 21:

print(21 * 2) # 42

We saw here the print statement again. We passed in the result of the operation 21 * 2. An integer (or int) in Python is a numeric value without a fractional component (those are called floating point numbers, or float for short).

The mathematical operators compute the related mathematical operation to the provided numbers. Some operators are:

Operator Function

* multiplication

/ division

+ addition

- subtraction

** exponent

Exponentiation $x^y$ is written as x**y is x to the yth power.

You can combine floats and ints:

print(3.14 * 42 / 11 + 4 - 2) # 13.9890909091

print(2**3) # 8

Note that operator precedence is important. Using parenthesis to indicate affect the order of operations gives a difference results, as expected:

print(3.14 * (42 / 11) + 4 - 2) # 11.42

print(1 + 2 * 3 - 4 / 5.0) # 6.2

print( (1 + 2) * (3 - 4) / 5.0 ) # -0.6

Module Management

A module allows you to logically organize your Python code. Grouping related code into a module makes the code easier to understand and use. A module is a Python object with arbitrarily named attributes that you can bind and reference. A module is a file consisting of Python code. A module can define functions, classes, and variables. A module can also include runnable code.

Import Statement

When the interpreter encounters an import statement, it imports the module if the module is present in the search path. A search path is a list of directories that the interpreter searches before importing a module. The from…import Statement Python’s from statement lets you import specific attributes from a module into the current namespace. It is preferred to use for each import its own line such as:

import numpy

import matplotlib

When the interpreter encounters an import statement, it imports the module if the module is present in the search path. A search path is a list of directories that the interpreter searches before importing a module.

The from … import Statement

Python’s from statement lets you import specific attributes from a module into the current namespace. The from … import has the following syntax:

from datetime import datetime

Date Time in Python

The datetime module supplies classes for manipulating dates and times in

both simple and complex ways. While date and time arithmetic is

supported, the focus of the implementation is on efficient attribute

extraction for output formatting and manipulation. For related

functionality, see also the time and calendar modules.

The import Statement You can use any Python source file as a module by executing an import statement in some other Python source file.

from datetime import datetime

This module offers a generic date/time string parser which is able to parse most known formats to represent a date and/or time.

from dateutil.parser import parse

pandas is an open-source Python library for data analysis that needs to be imported.

import pandas as pd

Create a string variable with the class start time

fall_start = '08-21-2018'

Convert the string to datetime format

datetime.strptime(fall_start, '%m-%d-%Y') \#

datetime.datetime(2017, 8, 21, 0, 0)

Creating a list of strings as dates

class_dates = [

'8/25/2017',

'9/1/2017',

'9/8/2017',

'9/15/2017',

'9/22/2017',

'9/29/2017']

Convert Class_dates strings into datetime format and save the list into

variable a

a = [datetime.strptime(x, '%m/%d/%Y') for x in class_dates]

Use parse() to attempt to auto-convert common string formats. Parser must be a string or character stream, not list.

parse(fall_start) # datetime.datetime(2017, 8, 21, 0, 0)

Use parse() on every element of the Class_dates string.

[parse(x) for x in class_dates]

# [datetime.datetime(2017, 8, 25, 0, 0),

# datetime.datetime(2017, 9, 1, 0, 0),

# datetime.datetime(2017, 9, 8, 0, 0),

# datetime.datetime(2017, 9, 15, 0, 0),

# datetime.datetime(2017, 9, 22, 0, 0),

# datetime.datetime(2017, 9, 29, 0, 0)]

Use parse, but designate that the day is first.

parse (fall_start, dayfirst=True)

# datetime.datetime(2017, 8, 21, 0, 0)

Create a dataframe. A DataFrame is a tabular data structure comprised of

rows and columns, akin to a spreadsheet, database table. DataFrame is a

group of Series objects that share an index (the column names).

import pandas as pd

data = {

'dates': [

'8/25/2017 18:47:05.069722',

'9/1/2017 18:47:05.119994',

'9/8/2017 18:47:05.178768',

'9/15/2017 18:47:05.230071',

'9/22/2017 18:47:05.230071',

'9/29/2017 18:47:05.280592'],

'complete': [1, 0, 1, 1, 0, 1]}

df = pd.DataFrame(

data,

columns = ['dates','complete'])

print(df)

# dates complete

# 0 8/25/2017 18:47:05.069722 1

# 1 9/1/2017 18:47:05.119994 0

# 2 9/8/2017 18:47:05.178768 1

# 3 9/15/2017 18:47:05.230071 1

# 4 9/22/2017 18:47:05.230071 0

# 5 9/29/2017 18:47:05.280592 1

Convert df[`date`] from string to datetime

import pandas as pd

pd.to_datetime(df['dates'])

# 0 2017-08-25 18:47:05.069722

# 1 2017-09-01 18:47:05.119994

# 2 2017-09-08 18:47:05.178768

# 3 2017-09-15 18:47:05.230071

# 4 2017-09-22 18:47:05.230071

# 5 2017-09-29 18:47:05.280592

# Name: dates, dtype: datetime64[ns]

Control Statements

Comparison

Computer programs do not only execute instructions. Occasionally, a choice needs to be made. Such as a choice is based on a condition. Python has several conditional operators:

Operator Function

> greater than

< smaller than

== equals

!= is not

Conditions are always combined with variables. A program can make a choice using the if keyword. For example:

x = int(input("Guess x:"))

if x == 4:

print('Correct!')

In this example, You guessed correctly! will only be printed if the

variable x equals four. Python can also execute

multiple conditions using the elif and else keywords.

x = int(input("Guess x:"))

if x == 4:

print('Correct!')

elif abs(4 - x) == 1:

print('Wrong, but close!')

else:

print('Wrong, way off!')

Iteration

To repeat code, the for keyword can be used. For example, to display the

numbers from 1 to 10, we could write something like this:

for i in range(1, 11):

print('Hello!')

The second argument to the range, 11, is not inclusive, meaning that the

loop will only get to 10 before it finishes. Python itself starts

counting from 0, so this code will also work:

for i in range(0, 10):

print(i + 1)

In fact, the range function defaults to starting value of 0, so it is equivalent to:

for i in range(10):

print(i + 1)

We can also nest loops inside each other:

for i in range(0,10):

for j in range(0,10):

print(i,' ',j)

In this case, we have two nested loops. The code will iterate over the entire coordinate range (0,0) to (9,9)

Datatypes

Lists

see: https://www.tutorialspoint.com/python/python_lists.htm

Lists in Python are ordered sequences of elements, where each element can be accessed using a 0-based index.

To define a list, you simply list its elements between square brackets ‘[ ]':

names = [

'Albert',

'Jane',

'Liz',

'John',

'Abby']

# access the first element of the list

names[0]

# 'Albert'

# access the third element of the list

names[2]

# 'Liz'

You can also use a negative index if you want to start counting elements from the end of the list. Thus, the last element has index -1, the second before the last element has index -2 and so on:

# access the last element of the list

names[-1]

# 'Abby'

# access the second last element of the list

names[-2]

# 'John'

Python also allows you to take whole slices of the list by specifying a beginning and end of the slice separated by a colon

# the middle elements, excluding first and last

names[1:-1]

# ['Jane', 'Liz', 'John']

As you can see from the example, the starting index in the slice is inclusive and the ending one, exclusive.

Python provides a variety of methods for manipulating the members of a list.

You can add elements with append’:

names.append('Liz')

names

# ['Albert', 'Jane', 'Liz',

# 'John', 'Abby', 'Liz']

As you can see, the elements in a list need not be unique.

Merge two lists with ‘extend’:

names.extend(['Lindsay', 'Connor'])

names

# ['Albert', 'Jane', 'Liz', 'John',

# 'Abby', 'Liz', 'Lindsay', 'Connor']

Find the index of the first occurrence of an element with ‘index’:

names.index('Liz') \# 2

Remove elements by value with ‘remove’:

names.remove('Abby')

names

# ['Albert', 'Jane', 'Liz', 'John',

# 'Liz', 'Lindsay', 'Connor']

Remove elements by index with ‘pop’:

names.pop(1)

# 'Jane'

names

# ['Albert', 'Liz', 'John',

# 'Liz', 'Lindsay', 'Connor']

Notice that pop returns the element being removed, while remove does not.

If you are familiar with stacks from other programming languages, you can use insert and ‘pop’:

names.insert(0, 'Lincoln')

names

# ['Lincoln', 'Albert', 'Liz',

# 'John', 'Liz', 'Lindsay', 'Connor']

names.pop()

# 'Connor'

names

# ['Lincoln', 'Albert', 'Liz',

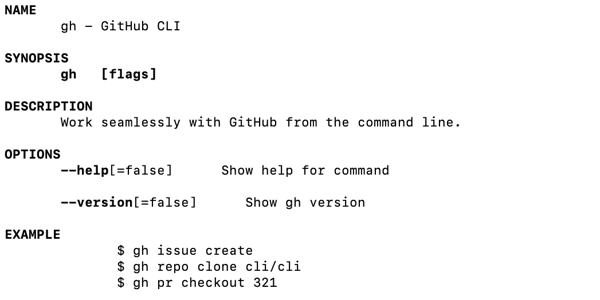

# 'John', 'Liz', 'Lindsay']